Kubernetes Foundations:

Best Practices & Most Common Challenges/Solutions

Executive Summary

In the rapidly evolving cloud-native ecosystem, Kubernetes has solidified its position as the cornerstone technology for container orchestration. As organizations adopt Kubernetes, they experience transformative gains in scalability, operational efficiency, and developer agility. These advantages, however, are accompanied by a complex set of challenges that demand well-considered strategies and adherence to industry best practices for effective management and deployment.

This whitepaper serves as a comprehensive resource, offering a deep dive into the core principles of Kubernetes. It meticulously outlines a suite of best practices for its deployment and ongoing management, ensuring that organizations can fully leverage Kubernetes' capabilities. Furthermore, the paper addresses the prevalent challenges faced in the Kubernetes environment, providing pragmatic solutions drawn from real-world implementation experiences.

By synthesizing foundational knowledge, expert recommendations, and practical solutions, this whitepaper aims to equip enterprises with the necessary insights to harness the full potential of Kubernetes, ensuring a smooth and successful journey in the cloud-native realm.

Introduction

Kubernetes, since its inception, has been a transformative force in the technology landscape, fundamentally redefining the deployment and management of applications across distributed systems. Originating from Google's internal systems and cultivated under the Cloud Native Computing Foundation, it has rapidly evolved into an open-source powerhouse with a thriving ecosystem. Kubernetes simplifies the complexity of managing containerized applications by providing a resilient and efficient framework for automating deployment, scaling, and operations across clusters of hosts.

At its core, Kubernetes orchestrates computing, networking, and storage infrastructure on behalf of user workloads. This orchestration allows businesses to focus on application development rather than the underlying infrastructure, thereby accelerating time to market and enabling scalability on demand. It abstracts the hardware layer, presenting a unified view of resources that can be managed consistently, regardless of the deployment environment—be it public cloud, private cloud, or hybrid setups.

Kubernetes also introduces abstractions such as Services, which define a logical set of Pods and a policy by which to access them, and Deployments, which automate the updating and scaling of applications. These abstractions, along with others like Volumes for storage and Secrets for sensitive data, provide a rich set of tools that developers can leverage to build cloud-native applications that are resilient, scalable, and portable.

For organizations looking to adopt Kubernetes, it is crucial to understand these concepts and how they fit together to create a dynamic, self-healing system that can adapt to changing workloads and environments. Mastery of Kubernetes' capabilities enables businesses to deploy complex applications with confidence, manage them at scale, and unlock the full potential of modern cloud computing.

Best Kubernetes Deployment

Infrastructure as Code (IaC)

In Kubernetes environments, the adoption of Infrastructure as Code (IaC) is not just beneficial; it's essential. Utilizing tools like Terraform or Kubernetes’ own declarative manifests not only ensures reproducibility and consistency but also significantly enhances the speed and reliability of deployments.

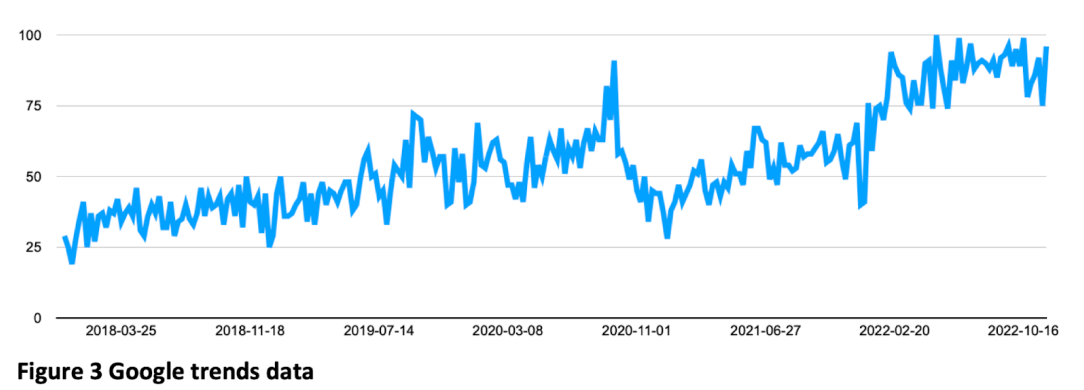

Evidence:According to data from Google Trends interest in the topic has been growing steadily. The following graph compiled data from Google Trends (2022) data for the topic “Infrastructure as Code” worldwide over the past 5 years.

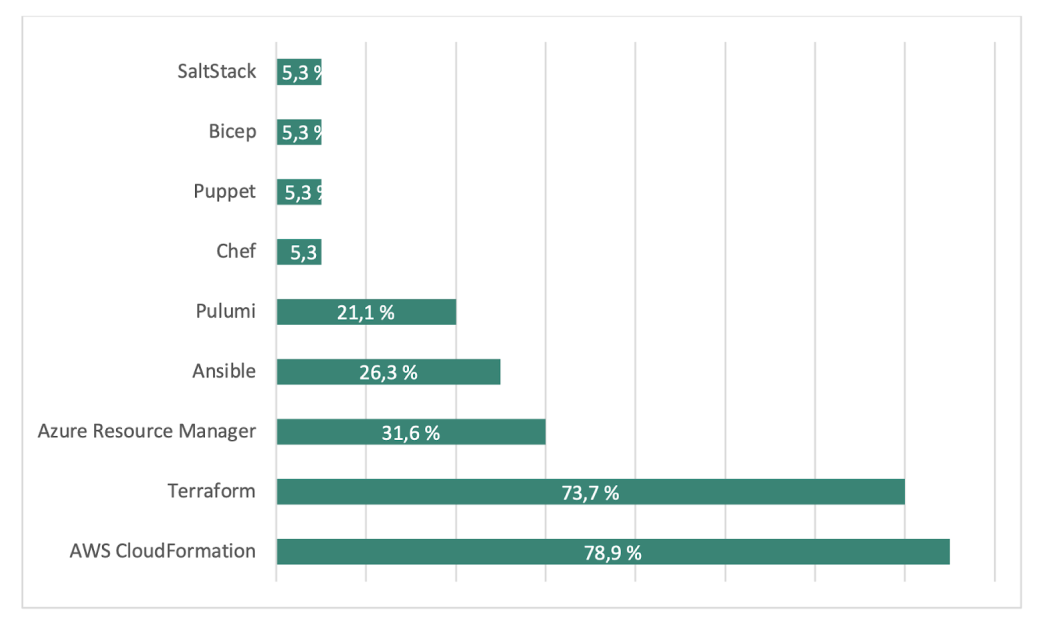

IaC Tools Usage from the survey:

Immutable Infrastructure

Embracing an immutable infrastructure approach, where updates are made by replacing containers rather than altering existing ones, minimizes drift and enhances security. This strategy aligns with the Kubernetes philosophy of declarative configuration and can significantly streamline the update process.

Case Study:“Out-of-the-box, Spinnaker supports sophisticated deployment strategies like release canaries, multiple staging environments, red/black (a.k.a. blue/green) deployments, traffic splitting and easy rollbacks,” wrote Google Product Manager Christopher Sanson, in a blog post.

“This is enabled in part by Spinnaker’s use of immutable infrastructure in the cloud, where changes to your application trigger a redeployment of your entire server fleet. Compare this to the traditional approach of configuring updates to running machines, which results in slower, riskier rollouts and hard-to-debug configuration-drift issues.”

https://thenewstack.io/netflix-built-spinnaker-high-velocity-continuous-delivery-platform/

“This is enabled in part by Spinnaker’s use of immutable infrastructure in the cloud, where changes to your application trigger a redeployment of your entire server fleet. Compare this to the traditional approach of configuring updates to running machines, which results in slower, riskier rollouts and hard-to-debug configuration-drift issues.”

https://thenewstack.io/netflix-built-spinnaker-high-velocity-continuous-delivery-platform/

Also, Netflix, as an early adopter of machine images for deployment, utilizes a suite of tools for managing systems on Amazon Web Services using immutable infrastructure. It provides insights into important Netflix Open Source Software (OSS) projects, basic configurations, and usage patterns that have enabled Netflix to manage thousands of deployments daily without compromising security or consistency.

https://www.slideshare.net/AmerAther/netflix-massively-scalable-highly-available-immutable-infrastructure

https://www.slideshare.net/AmerAther/netflix-massively-scalable-highly-available-immutable-infrastructure

Continuous Integration / Continuous Deployment (CI/CD)

The implementation of CI/CD pipelines is critical for automating and streamlining deployment processes. Tools such as Jenkins, GitLab CI, and Spinnaker play a pivotal role in achieving high deployment frequencies and rapid iteration cycles.

Proof: Jez Humble and David Farley, in their seminal work "Continuous Delivery," highlight that CI/CD practices can lead to a reduction in time-to-market, underscoring the efficiency gains from automated pipelines. https://continuousdelivery.com/about/

Proof: Jez Humble and David Farley, in their seminal work "Continuous Delivery," highlight that CI/CD practices can lead to a reduction in time-to-market, underscoring the efficiency gains from automated pipelines. https://continuousdelivery.com/about/

Security Best Practices

Security within Kubernetes should be a multi-layered approach. Enforcing role-based access control (RBAC), managing sensitive data through secrets, and proactive vulnerability scanning are non-negotiable practices.

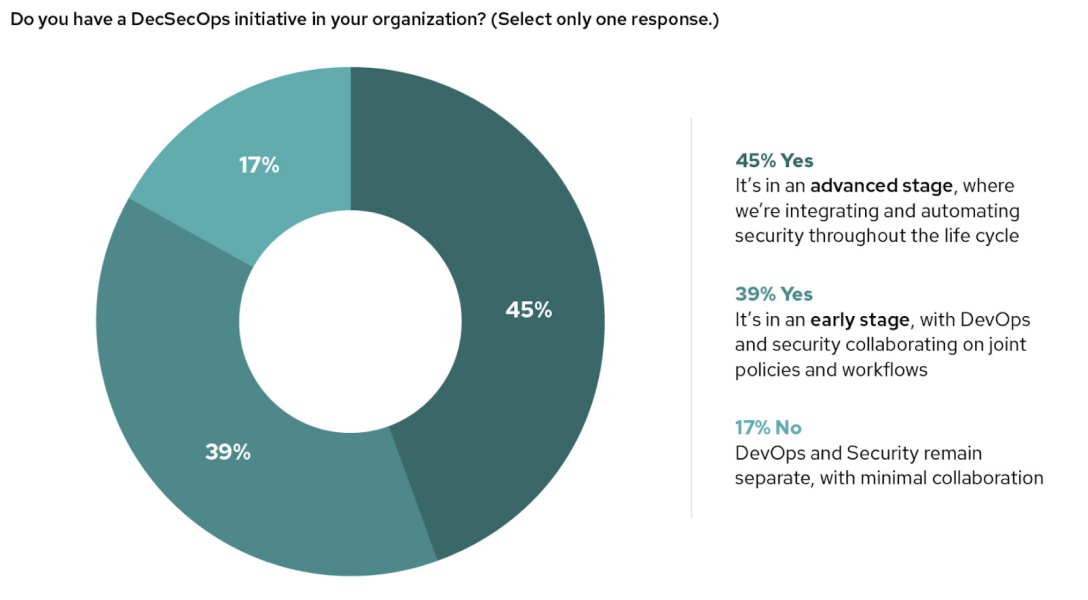

Data Point: A Red Hat survey of Kubernetes adoption and security showed that, of 500 DevOps professionals surveyed shown the following.

Data Point: A Red Hat survey of Kubernetes adoption and security showed that, of 500 DevOps professionals surveyed shown the following.

Observability and Monitoring

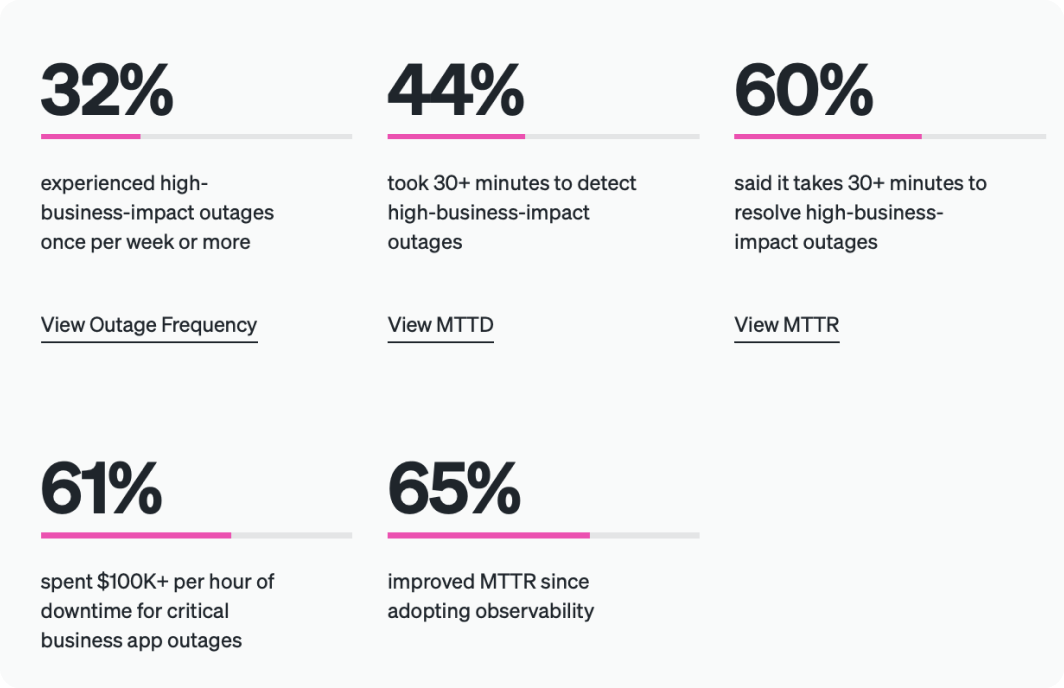

Incorporating comprehensive observability and monitoring solutions like Prometheus and Grafana is crucial for maintaining insight into the health and performance of Kubernetes clusters.

Real-World Impact: According to a report by New Relic, incorporating observability tools can improve the mean time to resolution (MTTR), making systems more reliable and responsive.

Real-World Impact: According to a report by New Relic, incorporating observability tools can improve the mean time to resolution (MTTR), making systems more reliable and responsive.

Performance Optimization

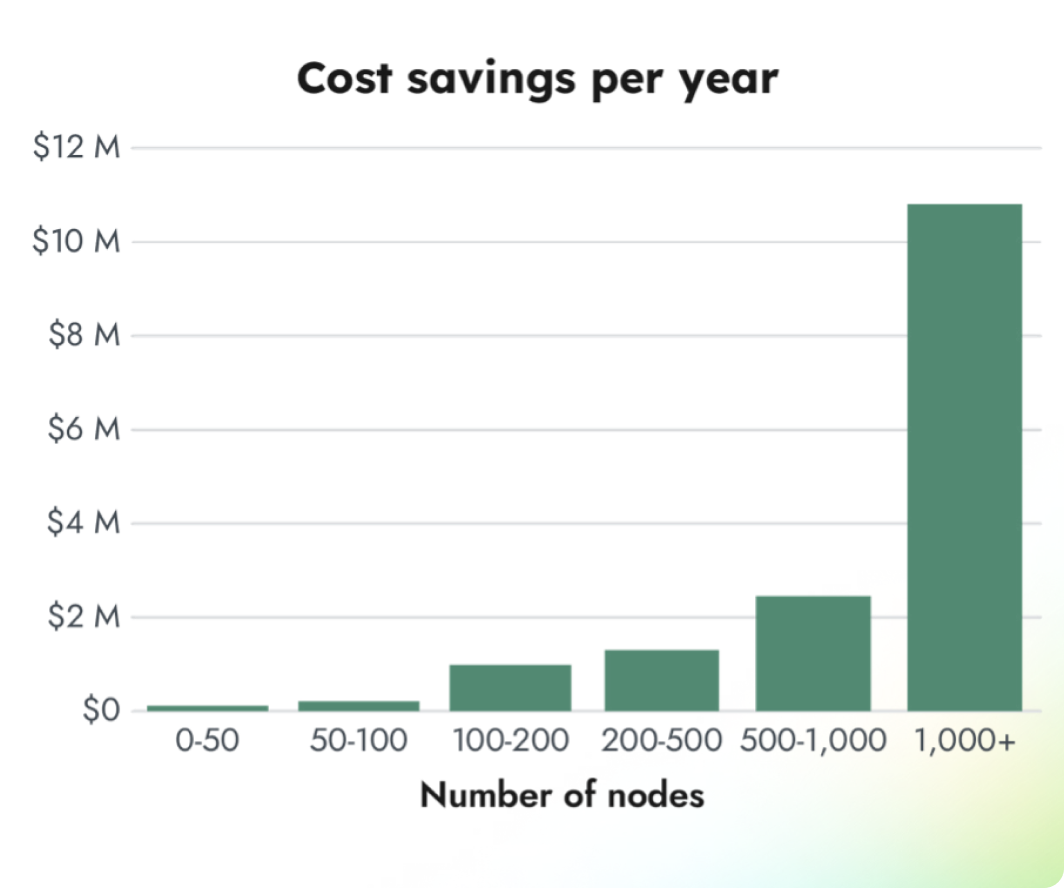

Regularly profiling workloads with tools like Kubernetes’ Vertical Pod Autoscaler and Horizontal Pod Autoscaler ensures that applications are running with the optimal resources required, preventing over-provisioning and reducing costs.

Industry Insight: A Gartner report suggests that through effective resource management and autoscaling, organizations can expect up to a big cost saving on cloud resources, highlighting the financial impact of performance optimization.

Industry Insight: A Gartner report suggests that through effective resource management and autoscaling, organizations can expect up to a big cost saving on cloud resources, highlighting the financial impact of performance optimization.

https://www.gartner.com/en/documents/3982411

By integrating these best practices with real-world evidence and data points, organizations can not only follow the path of successful Kubernetes deployments but also build a robust, secure, and efficient infrastructure that is ready for the demands of modern applications.

By integrating these best practices with real-world evidence and data points, organizations can not only follow the path of successful Kubernetes deployments but also build a robust, secure, and efficient infrastructure that is ready for the demands of modern applications.

Most Common Challenge and Solutions

Complexity of Management

Kubernetes' complex components and architecture require significant expertise for effective management.

Solutions

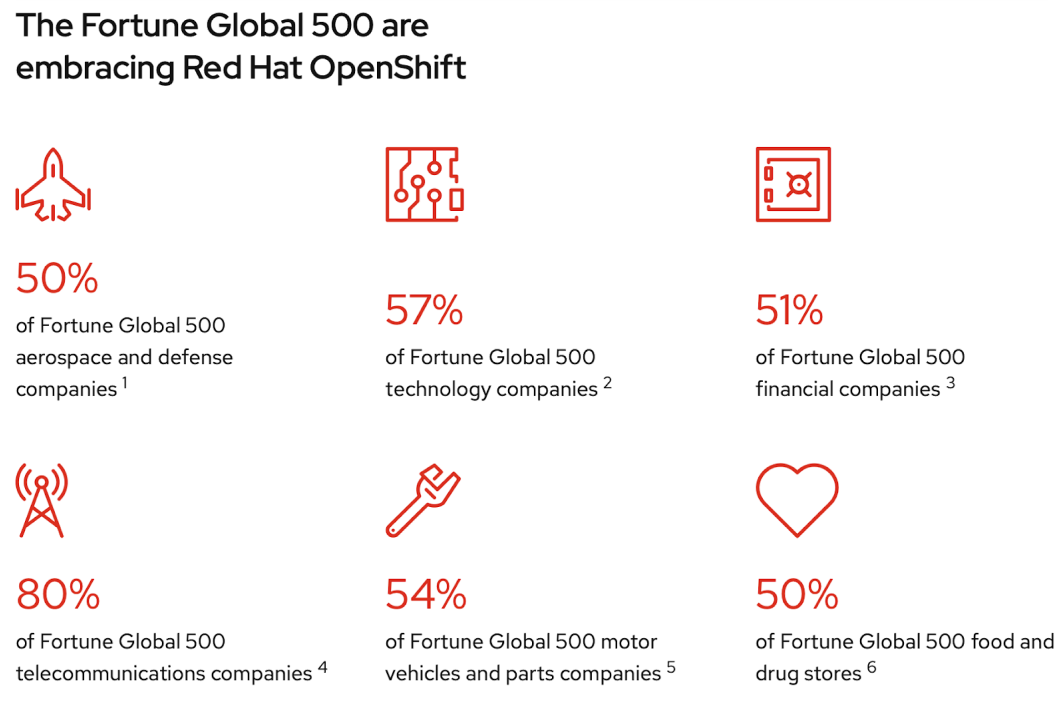

Adopting Kubernetes Management Platforms like OpenShift or Rancher:

These platforms provide additional layers of abstraction and management tools on top of Kubernetes, simplifying various tasks like deployment, scaling, and monitoring. OpenShift, developed by Red Hat, and Rancher, now part of SUSE, are known for their user-friendly interfaces and additional features that make Kubernetes easier to manage, especially for organizations that might not have deep Kubernetes expertise.

Investing in Comprehensive Training Programs for IT Staff:

Kubernetes is complex, and effective management often requires specialized knowledge. Training programs can significantly enhance the skills and understanding of IT staff regarding Kubernetes. This investment in training helps in building a knowledgeable in-house team capable of efficiently managing Kubernetes environments. It reduces reliance on external expertise and can improve the overall effectiveness of Kubernetes deployment and management.

Solutions

Adopting Kubernetes Management Platforms like OpenShift or Rancher:

These platforms provide additional layers of abstraction and management tools on top of Kubernetes, simplifying various tasks like deployment, scaling, and monitoring. OpenShift, developed by Red Hat, and Rancher, now part of SUSE, are known for their user-friendly interfaces and additional features that make Kubernetes easier to manage, especially for organizations that might not have deep Kubernetes expertise.

Investing in Comprehensive Training Programs for IT Staff:

Kubernetes is complex, and effective management often requires specialized knowledge. Training programs can significantly enhance the skills and understanding of IT staff regarding Kubernetes. This investment in training helps in building a knowledgeable in-house team capable of efficiently managing Kubernetes environments. It reduces reliance on external expertise and can improve the overall effectiveness of Kubernetes deployment and management.

Supporting Data

The Fortune Global 500 are embracing Red Hat OpenShift

The Fortune Global 500 are embracing Red Hat OpenShift

“Employers modernizes, improves 3-year sales by 40%”

https://www.redhat.com/en/success-stories/employers

More success stories of adoption of Open shift..

https://www.redhat.com/en/success-stories

https://www.redhat.com/en/success-stories/employers

More success stories of adoption of Open shift..

https://www.redhat.com/en/success-stories

Networking Intricacies

Kubernetes networking involves complex elements such as services, ingresses, and network policies, which can be challenging to configure and manage.

Solutions

Solutions

Utilizing CNI-Compliant Network Plugins like Calico or WeaveThe Container Network Interface (CNI) is a standard that allows for the integration of different network solutions with container orchestration systems like Kubernetes. Network plugins that comply with CNI, such as Calico and Weave, can simplify and streamline network management in Kubernetes.

- Calico, for instance, provides advanced networking features, network policy enforcement, and is known for its strong security capabilities. It supports a variety of deployment options and is flexible enough to cater to diverse networking requirements.

- Weave Net is another popular choice known for its simplicity and ease of use, especially in scenarios where cross-host communication is a priority.

Ensuring Security and Efficiency

These CNI-compliant tools not only simplify the configuration and management of complex networking elements in Kubernetes but also enhance security and performance. They allow administrators to define fine-grained network policies and ensure efficient communication between pods and services. This is particularly important in multi-tenant environments or in scenarios where strict network isolation and security are critical.

Industry Example

According to a case study by Tigera, Calico has helped numerous Kubernetes deployments to simplify their networking, providing scalable and secure network infrastructure.

https://www.tigera.io/upwork-case-study/

More case studies and success stories :

https://www.tigera.io/types/case-study/

More case studies and success stories :

https://www.tigera.io/types/case-study/

Monitoring and Logging

In a distributed system, obtaining insights and diagnosing issues can be complex due to the sheer volume and dynamism of the data involved.

Solutions

1. Implementing Robust Monitoring and Logging Tools:

In the context of Kubernetes and distributed systems, monitoring and logging are crucial for gaining visibility into the system's performance and health. The tools you mentioned are widely recognized for their effectiveness in these areas.

Solutions

1. Implementing Robust Monitoring and Logging Tools:

In the context of Kubernetes and distributed systems, monitoring and logging are crucial for gaining visibility into the system's performance and health. The tools you mentioned are widely recognized for their effectiveness in these areas.

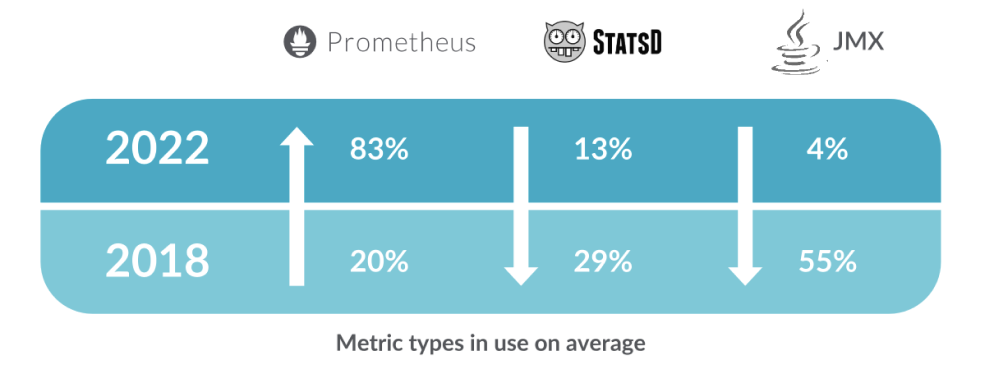

Prometheus for Metrics:

Prometheus is a powerful open-source monitoring solution that is particularly well-suited for collecting and processing metrics in a Kubernetes environment. It's designed for reliability and scalability, offering features like multi-dimensional data modeling, a flexible query language, and integration with Kubernetes for discovering and scraping metrics.“Of the three mainstay solutions, JMX, StatsD, and Prometheus, it was Prometheus that gained for the third year in a row. Year‑over‑year, Prometheus metric use increased to 83% compared to 62% last year”

Prometheus is a powerful open-source monitoring solution that is particularly well-suited for collecting and processing metrics in a Kubernetes environment. It's designed for reliability and scalability, offering features like multi-dimensional data modeling, a flexible query language, and integration with Kubernetes for discovering and scraping metrics.“Of the three mainstay solutions, JMX, StatsD, and Prometheus, it was Prometheus that gained for the third year in a row. Year‑over‑year, Prometheus metric use increased to 83% compared to 62% last year”

ELK Stack or Fluentd for Logging:

The ELK Stack (Elasticsearch, Logstash, Kibana) is a popular choice for log processing and analysis. Elasticsearch provides a scalable search engine, Logstash is used for log aggregation and processing, and Kibana is used for visualizing and querying log data.

Fluentd is another efficient option for log data collection and aggregation. It's known for its lightweight and plugin-based architecture, making it highly customizable and well-suited for Kubernetes environments.

2. Providing Necessary Visibility into System Performance and Health:

These tools collectively enable comprehensive monitoring and logging capabilities. They help in detecting anomalies, understanding system behavior, and diagnosing issues in a distributed system. This is especially important in Kubernetes, where the dynamic nature of containerized applications and microservices architecture can make troubleshooting challenging.

Evidence:

Tigera, talks about top 5 kubernetes monitoring tools here.

https://www.tigera.io/learn/guides/kubernetes-monitoring/

The ELK Stack (Elasticsearch, Logstash, Kibana) is a popular choice for log processing and analysis. Elasticsearch provides a scalable search engine, Logstash is used for log aggregation and processing, and Kibana is used for visualizing and querying log data.

Fluentd is another efficient option for log data collection and aggregation. It's known for its lightweight and plugin-based architecture, making it highly customizable and well-suited for Kubernetes environments.

2. Providing Necessary Visibility into System Performance and Health:

These tools collectively enable comprehensive monitoring and logging capabilities. They help in detecting anomalies, understanding system behavior, and diagnosing issues in a distributed system. This is especially important in Kubernetes, where the dynamic nature of containerized applications and microservices architecture can make troubleshooting challenging.

Evidence:

Tigera, talks about top 5 kubernetes monitoring tools here.

https://www.tigera.io/learn/guides/kubernetes-monitoring/

Data Persistence

The ephemeral nature of containers presents challenges for persisting data, which is crucial for stateful applications.

Solutions

1. Leveraging Kubernetes Persistent Volumes:

Persistent Volumes (PVs) in Kubernetes are an effective way to manage data persistence. They provide a method for storage in Kubernetes that outlives the lifecycle of individual pods. This means that even if a pod is deleted or restarted, the data stored in the Persistent Volume remains intact. PVs can be provisioned statically or dynamically and can be connected to a variety of external storage systems, such as cloud-based storage services or on-premises storage solutions.

https://kubernetes.io/docs/concepts/storage/persistent-volumes/

1. Leveraging Kubernetes Persistent Volumes:

Persistent Volumes (PVs) in Kubernetes are an effective way to manage data persistence. They provide a method for storage in Kubernetes that outlives the lifecycle of individual pods. This means that even if a pod is deleted or restarted, the data stored in the Persistent Volume remains intact. PVs can be provisioned statically or dynamically and can be connected to a variety of external storage systems, such as cloud-based storage services or on-premises storage solutions.

https://kubernetes.io/docs/concepts/storage/persistent-volumes/

2. Using StatefulSets for Stateful Applications

StatefulSets are a Kubernetes resource designed specifically for stateful applications. They manage the deployment and scaling of a set of Pods and provide guarantees about the ordering and uniqueness of these Pods. Unlike Deployments, StatefulSets maintain a sticky identity for each of their Pods. These Pods are created from the same specification, but are not interchangeable: each has a persistent identifier that it maintains across any rescheduling. When used in combination with Persistent Volumes, StatefulSets ensure that the same volume is reattached to the pod even after rescheduling, which is crucial for applications that need consistent and persistent data storage.

StatefulSets are a Kubernetes resource designed specifically for stateful applications. They manage the deployment and scaling of a set of Pods and provide guarantees about the ordering and uniqueness of these Pods. Unlike Deployments, StatefulSets maintain a sticky identity for each of their Pods. These Pods are created from the same specification, but are not interchangeable: each has a persistent identifier that it maintains across any rescheduling. When used in combination with Persistent Volumes, StatefulSets ensure that the same volume is reattached to the pod even after rescheduling, which is crucial for applications that need consistent and persistent data storage.

Resource Management

Optimal resource allocation is essential to prevent resource contention and to ensure the efficient operation of applications.

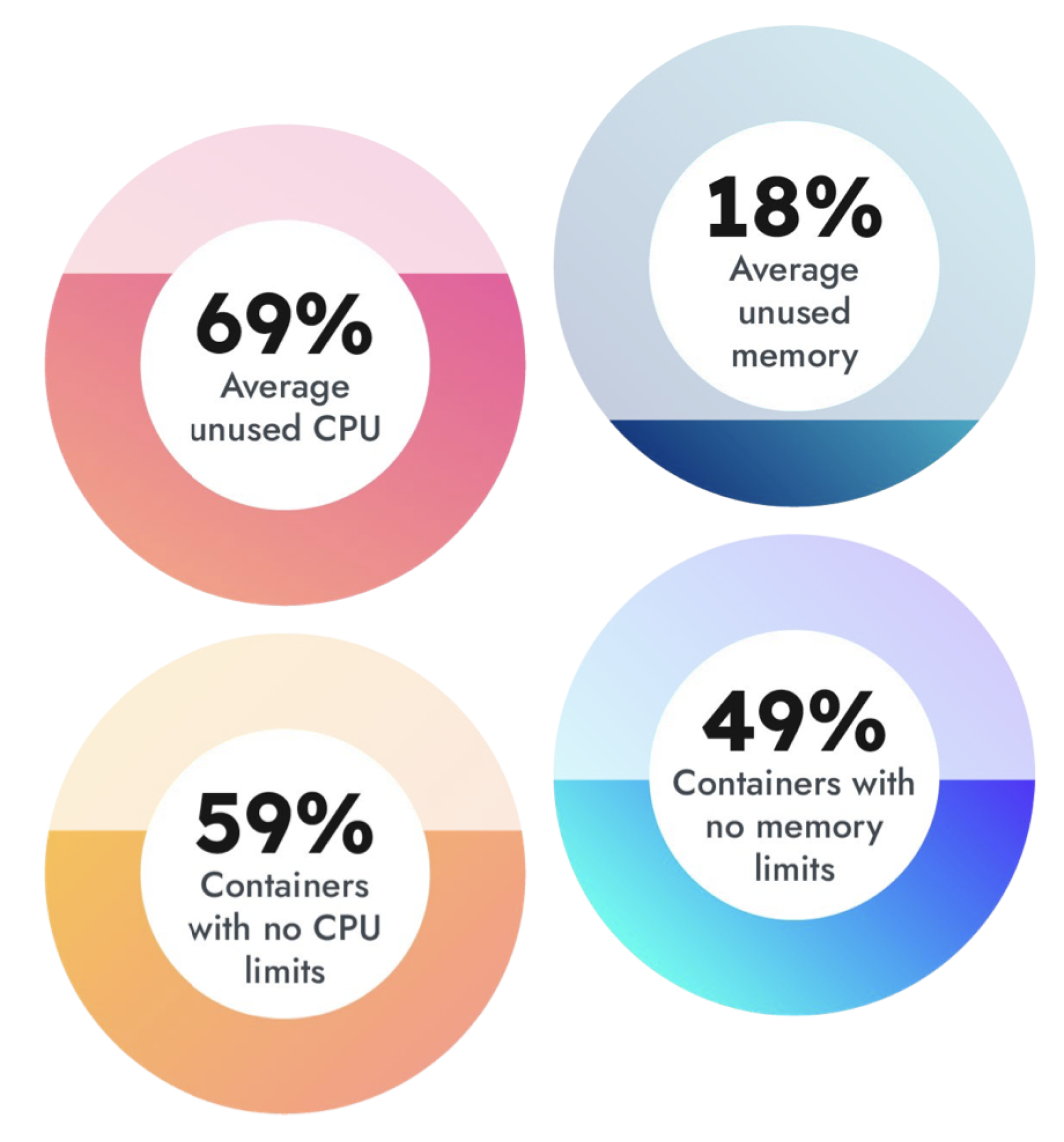

According to 2023 Cloud-Native Security & Usage Report by Sysdig,“found that 59% of containers had no CPU limits defined and 49% had no memory limits defined. In terms of unused resources, an average of 69% of requested CPU cores and 18% of requested memory were unused.Digging deeper into container efficiency, our internal reports indicate that on average, 69% of containers are using fewer than 25% of requested CPU resources. ”

https://sysdig.com/content/c/pf-2023-cloud-native-security-and-usage-report?x=u_WFRi&mkt_tok=MDY3LVFaVC04ODEAAAGPZpqRrFnVHX5v01nwakvyf9w66iB7hGrq-BSnvAaGpnn4MzC8jnPny8GWZ68lTi6f7nY-9GOt_r_l-Tia8irPu7k2H6Er_zpgkhKs0MQIeMUy&_pfses=D2ZDfJVRAcCEPgLLorZygZYH

According to 2023 Cloud-Native Security & Usage Report by Sysdig,“found that 59% of containers had no CPU limits defined and 49% had no memory limits defined. In terms of unused resources, an average of 69% of requested CPU cores and 18% of requested memory were unused.Digging deeper into container efficiency, our internal reports indicate that on average, 69% of containers are using fewer than 25% of requested CPU resources. ”

https://sysdig.com/content/c/pf-2023-cloud-native-security-and-usage-report?x=u_WFRi&mkt_tok=MDY3LVFaVC04ODEAAAGPZpqRrFnVHX5v01nwakvyf9w66iB7hGrq-BSnvAaGpnn4MzC8jnPny8GWZ68lTi6f7nY-9GOt_r_l-Tia8irPu7k2H6Er_zpgkhKs0MQIeMUy&_pfses=D2ZDfJVRAcCEPgLLorZygZYH

Solutions

Kubernetes Resource Quotas:

Resource Quotas in Kubernetes are used to limit the overall consumption of resources (like CPU, memory, and storage) in a given namespace. This is crucial in multi-tenant environments where different teams or projects share the same Kubernetes cluster. By setting resource quotas, administrators can prevent any single team or project from consuming more than its fair share of resources, thus avoiding resource contention and ensuring a more balanced and efficient use of the cluster resources.

Limit Ranges:

Limit Ranges are another Kubernetes feature that complements Resource Quotas. While Resource Quotas set constraints on resource usage at the namespace level, Limit Ranges apply more granular controls at the pod or container level. They allow administrators to specify the minimum and maximum amount of resources (CPU and memory) that can be requested or consumed by individual pods or containers within a namespace. This helps in preventing a single pod or container from using an excessive amount of resources, which could potentially impact other applications running in the same namespace.

By addressing these challenges with proven solutions and supporting data, organizations can navigate the complexities of Kubernetes and optimize their cloud-native infrastructure for better performance, reliability, and cost-efficiency.

Advance Your Kubernetes Skills with KodeKloud's Specialized Learning Path

If you're looking to deepen your understanding of Kubernetes, KodeKloud offers an advanced learning path tailored for you. As you progress beyond the fundamentals, our courses are designed to equip you with the expertise needed for complex Kubernetes environments.

180+

Courses

1280

Hands-on Labs

75+

DevOps & Cloud Playgrounds

In-Depth Learning for Advanced Kubernetes Practitioners

Our curriculum is focused on advanced aspects of Kubernetes, including detailed configuration, security optimization, and resource management. This comprehensive approach ensures you're well-prepared to handle sophisticated Kubernetes tasks.

Tailored Content for Advanced Learners

We believe in learning by doing. Our courses include a variety of real-world scenarios and hands-on labs, providing you with practical experience in managing Kubernetes. You'll tackle challenges such as service discovery, load balancing, and automated rollouts, enhancing your ability to implement Kubernetes effectively.

Tailored Content for Advanced Learners

Our educational content is specifically designed for those progressing beyond beginner levels. If you’re ready to take your Kubernetes skills to the next level, KodeKloud is here to guide you through this journey with advanced, real-world aligned content.

Enroll in our advanced Kubernetes courses today and join a community of professionals mastering the latest in cloud technology. With KodeKloud, you're not just learning; you're preparing for the next big step in your tech career.

.svg)