Container Runtime Interfaces In Kubernetes

Containers provide an efficient way to package and deploy applications. They are lightweight, portable, and can be easily moved between different environments without any changes to the application code. With Kubernetes, developers can easily manage and orchestrate containerized applications at scale, ensuring that they are always available and running smoothly. The task of running containers is made possible by a container runtime interface.

This article will discuss what a container runtime interface (CRI) is and why it is important.

What is Container Runtime?

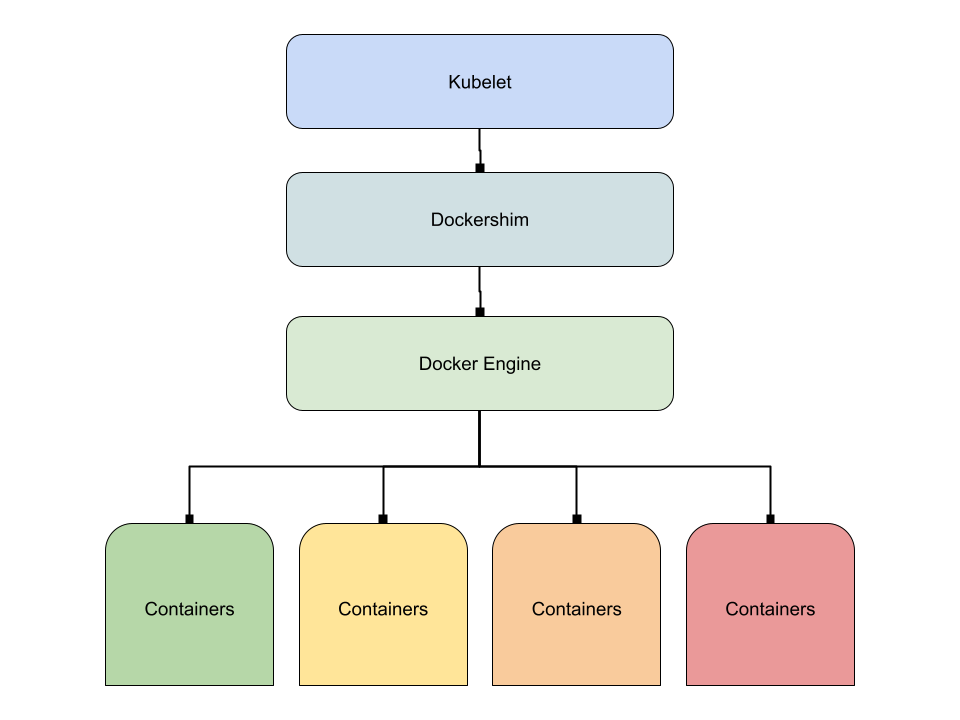

While Kubernetes is a container orchestration tool, it is not the one that creates, starts, or stops your containers whenever you run kubectl commands. Inside Kubernetes, there is another layer - called Dockershim - where the communication is made to the component that is responsible for managing your containers. That component is called a container runtime.

The diagram below illustrates running kubectl commands sent over to the Dockershim component to manage containers. Dockershim is a special integration component that enables Kubernetes to communicate with Docker.

Read more about the role of Dockershim and Docker runtime in Kubernetes in this blog post: Docker vs. Containerd: A Quick Comparison.

The Open Container Initiative (OCI)

In 2015, the Open Container Initiative (OCI) was founded and tasked with creating an industry standard for containers and runtimes. This set the standards for the thousands of images in image repositories such as Docker Hub, AWS Amazon Elastic Container Registry (ECR), and Google Container Registry. The same standard also paved the way to enable Kubernetes to support different container runtimes other than Docker Engine.

What is Container Runtimes Interface (CRI)

Kubernetes aims to give users more options by supporting different container runtimes with different functionality and features. Thus, the Container Runtime Interface (CRI) standard was created. It was derived from the Open Container Initiative (OCI) standard, and the idea is as long as the container runtime adheres to CRI standards, it will be compatible with Kubernetes.

Here’s a list of container runtimes that meet the CRI standard today:

- Containerd - Backed by CNCF, it was the 5th project to graduate. It has more than 400+ contributors in its GitHub repository, and it's primarily written in Go Language. It is the most mature container runtime available to Kubernetes. It touts low CPU and memory usage and is presented as a very stable runtime (if your container crashes, it does not corrupt or mess with your data)

- Cri-O - The CRI pertains to the CRI standard, and O refers to the Open Container Initiative (OCI). Unlike the other container runtimes mentioned here, It is custom-built to be used for Kubernetes. Its GitHub repository has over 200 contributors, and it's written in Go Language. CRI-O emphasizes security and low resource overhead by its containers.

- Kata Containers - Started as an open-source project from the OpenStack Foundation, it aims to closely match the isolation and security benefits you can get from a Virtual Machine while not sacrificing much for the performance. For Kubernetes, kata containers also utilize CRI-O for the Container Runtime Interface.

- Mirantis Container Runtime - formerly known as Docker Enterprise Edition, it is a commercial container runtime solution from Mirantis and Docker. It uses cri-dockerd, a standalone shim adapter that allows communication with Docker from Kubernetes. Its GitHub repository has 150+ contributors, and it's also written in Go.

A note on Docker Engine

Docker Engine, on its own, is not compliant with the CRI standard. Kubernetes came up with Dockershim - as a temporary solution - to enable between Docker and Kubernetes. A while back, Kubernetes announced that it would replace Docker with another container runtime, Containerd, after v1.20.

Learn more about the impact and reason for Kubernetes dropping Docker from our blog: Kubernetes Dropping Docker: What Happens Now?

Container runtime hands-on

Now that we understand container runtime, let’s go ahead and do some hands-on practice. For this, we’ll use a tool called minikube. It is a single-node, local Kubernetes sandbox used for testing, learning, and practicing commands. You can follow the installation guide here if you don’t have it yet.

We’ll start our minikube instance and use the default container runtime, Docker Engine. You can also use other container runtimes, such as Containerd and Cri-O, as specified here. Note that choosing these runtimes may require additional setup.

Let’s start our minikube with the following command.

minikube startNow that our minikube has started. Let's first confirm which container runtime is being used in our instance with the following command:

kubectl get node -o=jsonpath="{.items[0].status.nodeInfo.containerRuntimeVersion}"Output:

docker://20.10.8We see that it’s using the Docker Engine for the container runtime. Let's now proceed to create an nginx pod in the cluster.

kubectl run nginx --image=nginxRun the command kubectl get pod to verify that the pod has been created:

NAME READY STATUS RESTARTS AGE

nginx 1/1 Running 0 30sThe pod is now in a running state. Let us get the id of the container that is running inside the nginx pod.

kubectl get pod -l run=nginx -o=jsonpath="{.items[0].status.containerStatuses[0].containerID}"Output:

docker://126a4911f5fbb4889416c8f6e9ce942cddfc39f0556aa7d1e25a42d845206ee2Remember that Kubernetes is not responsible for creating or removing your containers. It simply sends an instruction to the container runtime, which then performs the creation of the container on behalf of Kubernetes. Here, we see that the pod created with the kubectl command is now associated with a docker container.

Since we’re using Docker as the container runtime, any containers created in the Kubernetes cluster can also be managed directly using Docker commands.

If we list the containers currently running in our Docker instance, we see that one of the containers matches the container id (first 12 characters) of our nginx pod.

docker exec minikube docker psOutput:

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

126a4911f5fb nginx "/docker-entrypoint...." 29 minutes ago Up 29 minutes k8s_nginx_nginx_default_7b578365-b9bc-4876-bc1d-2a7bd5bbe20d_0Now, let’s try to remove the container using docker commands and see how Kubernetes will react to the change.

docker exec minikube docker rm -f 126a4911f5fbThen let’s list the pods in Kubernetes.

kubectl get podsOutput:

NAME READY STATUS RESTARTS AGE

nginx 1/1 ContainerCreating 1 33mNotice how our nginx number of restarts changed from 0 to 1. This indicates that when we removed the container directly using docker commands, Kubernetes reacted automatically and sent an instruction again to the container runtime to create another container for the pod.

Let’s go and inspect the new container information running in this pod with the command:

kubectl get pod -l run=nginx -o=jsonpath="{.items[0].status.containerStatuses[0].containerID}"Output:

docker://b40e4dc9b0a5652be1e4be2765461117eafdbf307d08795abf1bf1f346194c98Notice how we get a different container id. This means a different container is now in place (replacing the old one that we removed) for our Nginx pod in Kubernetes. It will be the responsibility of Kubernetes to maintain the operability of the pods by sending instructions to the container runtime. It can be an action to create a new container, or it can be an action to remove the container.

Container standards that make your life easier

Thanks to the Container Runtimes Interface (CRI) specification, the behavior should be similar no matter which container runtime you choose. What will set them apart, however, will be the CLI commands that you will use to interact with the container and the different sets of features they come with.

So, depending on what you're focusing on, whether it will be ease of use, performance, and/or security, or all of them, you’ll have quite a number of options to choose from. And the best part is that since most of them are compliant with Open Container Initiative OCI, it won’t be as if you just choose one and you’re locked in.

It is possible to switch between the different container runtimes. So, if you decide to try out another container runtime one day, you can easily do that without affecting your already running containers and overhauling your Kubernetes cluster.

ENROLL in our Kubernetes for the Absolute Beginners Course to polish your skills with hands-on labs.

.png)

Conclusion

Container runtimes are one of the most integral parts of Kubernetes. We might see more container runtimes developed with better resource management and security features added to the list. If you have an older Kubernetes cluster and are planning to upgrade to the latest version, you may need to consider first the impact of having Dockershim removed before proceeding.

More on Kubernetes:

- Demystifying Container Orchestration: How Kubernetes Works with Docker

- Understanding the Kubernetes API: A Comprehensive Guide

- Deploying, Maintaining, and Scaling Kubernetes Clusters

- How to Use Kubectl Scale on Deployment

- Container Security Best Practices in DevOps Environments

- 21 Popular Kubernetes Interview Questions and Answers

- What Are Objects Used for in Kubernetes? 11 Types of Objects Explained

- Vertical Pod Autoscaler (VPA) in Kubernetes Explained through an Example