In recent years, WebAssembly (Wasm) has gained immense popularity as a powerful binary format for executing code in the browser. The main emphasis of the initial section in this article is to provide a comprehensive recap of the remarkable rise of WebAssembly (Wasm) in recent years as a formidable binary format for browser code execution and revisit its comparison with Docker.

However, Wasm's potential extends far beyond the confines of the web. In this exploration, we will delve into the core features of Wasm, unravel its seamless integration within the cloud-native landscape, and uncover its symbiotic relationship with Kubernetes. Brace yourself for an illuminating exploration of Wasm's vast potential in the cloud-native realm.

The Wasm and its Core Features Recap

This section summarizes one of the top-rated articles by KodeKloud on the topic of WASM vs Docker. It's considered one of the best explanations available on Google for understanding both WASM and the comparison between WASM and Docker. Give it a read for a clear understanding, as it's written in simple language for easy comprehension.

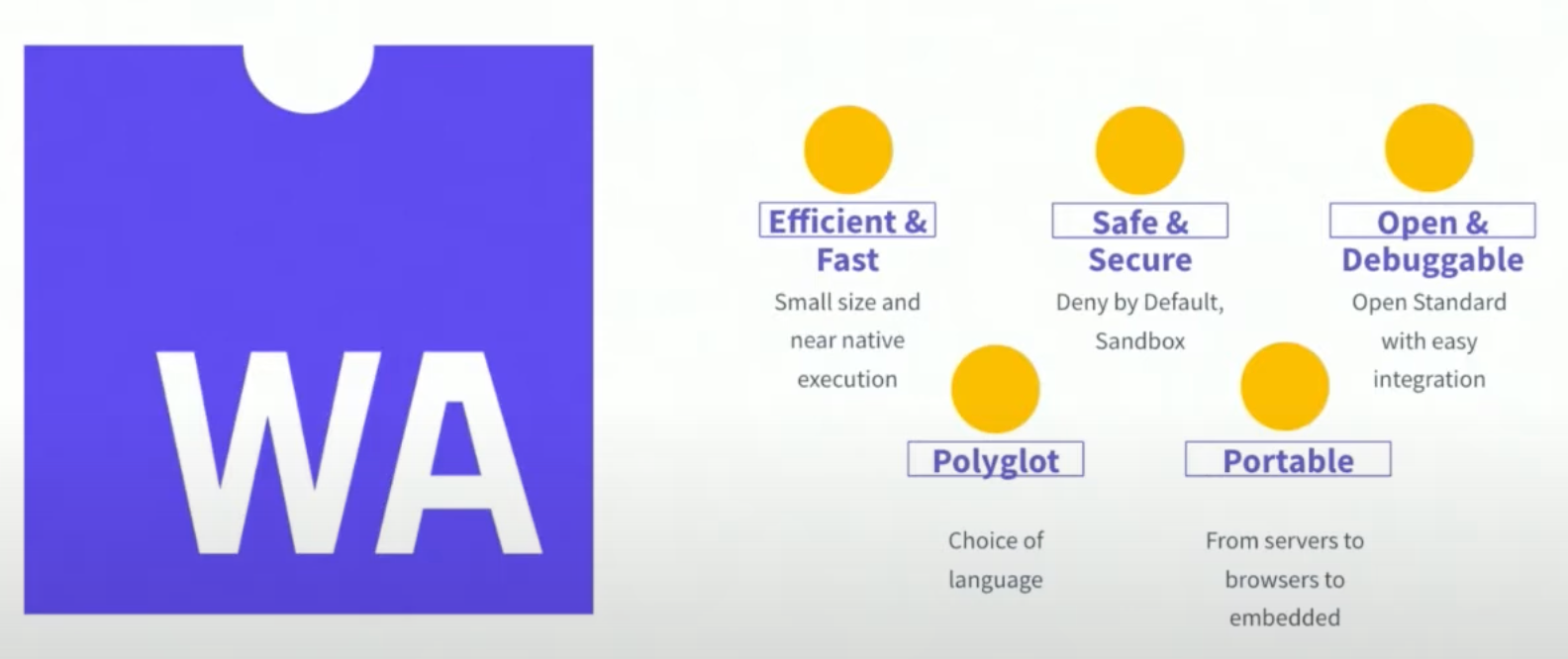

WebAssembly, or Wasm, is a low-level bytecode format designed to be fast, efficient, and secure. Originally created to enable high-performance web applications, Wasm allows developers to compile code written in languages like C++, Rust, and Go into a compact binary format that can be executed in a virtual machine. Its key features include:

- Portability: For example, a Wasm module compiled from Rust code can run on various operating systems such as Linux, Windows, or macOS without the need for modification, providing a consistent experience across different platforms.

- Security: Wasm provides a sandboxed environment, ensuring code execution is isolated and safe. This prevents malicious code from accessing or modifying sensitive resources on the host system, making it a secure execution environment for cloud-native applications.

- Efficiency: Wasm's binary format is designed for fast loading and execution, delivering high performance. For example, a Wasm module can be loaded and executed significantly faster compared to traditional interpreted JavaScript code, improving overall application performance.

Compare with Docker Recap

While Docker revolutionized containerization and application deployment, it primarily focuses on operating system-level virtualization. In contrast, Wasm brings a new level of abstraction, enabling developers to package and distribute applications without the need for traditional containerization. Wasm modules can be executed on various platforms, making them more flexible and lightweight compared to Docker containers.

For example, in a Docker-based setup, a web application might be packaged along with the necessary operating system and runtime dependencies into a container image. This container image needs to be specifically built for each target architecture or operating system, resulting in multiple images to maintain. In contrast, a Wasm module can be compiled once and executed across different platforms without modification. This eliminates the need to maintain multiple container images and simplifies the deployment process.

Here's a link to one of the top-rated YouTube videos about the Wasm vs. Docker comparison from scratch to advanced concepts. It's considered one of the best resources out there, so I recommend watching it before proceeding with this blog. The video breaks down the topic in a simple and easy-to-understand way.

Let's jump right into today's topic and explore it in-depth.

Why WebAssembly Extends Its Relevance to the Cloud Beyond Containers?

The cloud, just like the ever-evolving web, embarks on an infinite expedition towards greater speed, enhanced security, heightened portability, and delightful efficiency. Wasm's usage is not limited to web browsers. Embracing WebAssembly (Wasm) in cloud-native environments presents numerous advantages, making it the perfect match for the next wave of cloud computing.

Polyglot: Empowering Flexibility and Efficiency

In a cloud-native application, different components can be written in different languages.

For example, a cloud-native application might utilize a Wasm module written in Rust for computationally intensive tasks while using JavaScript for the front-end code.

This flexibility empowers developers to utilize their preferred languages and libraries in a cloud-native environment, enabling efficient development and integration of different components.

Efficient Resource Utilization: Maximizing Performance and Cost Savings

Wasm modules are lightweight and have minimal resource requirements. For example, a Wasm module compiled from C++ code can have a significantly smaller memory footprint compared to a traditional virtual machine or container running the same functionality. This efficient resource utilization allows for a higher density of application instances on a given infrastructure, reducing costs and improving scalability.

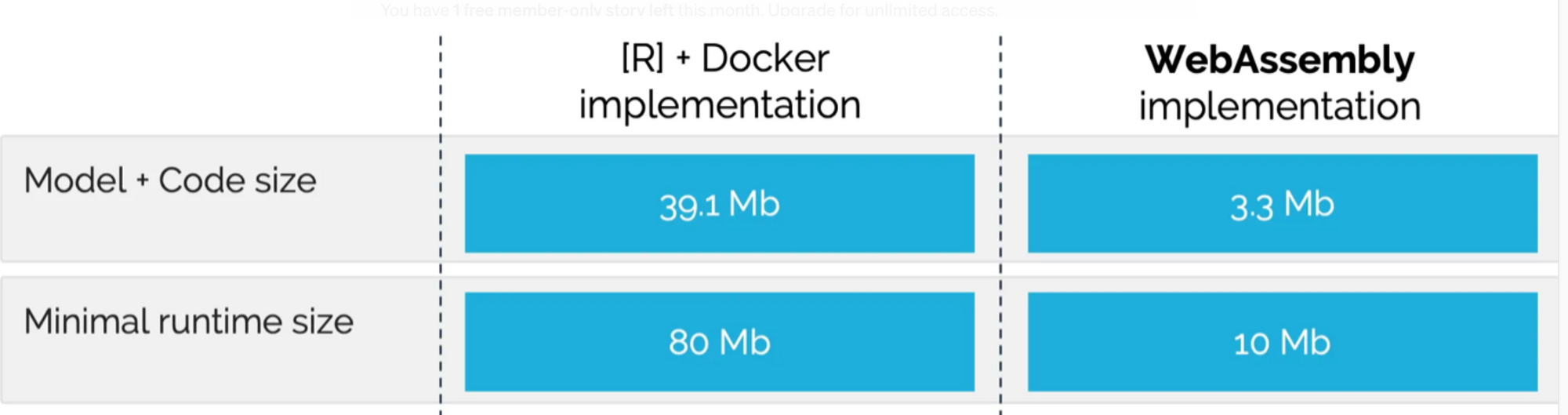

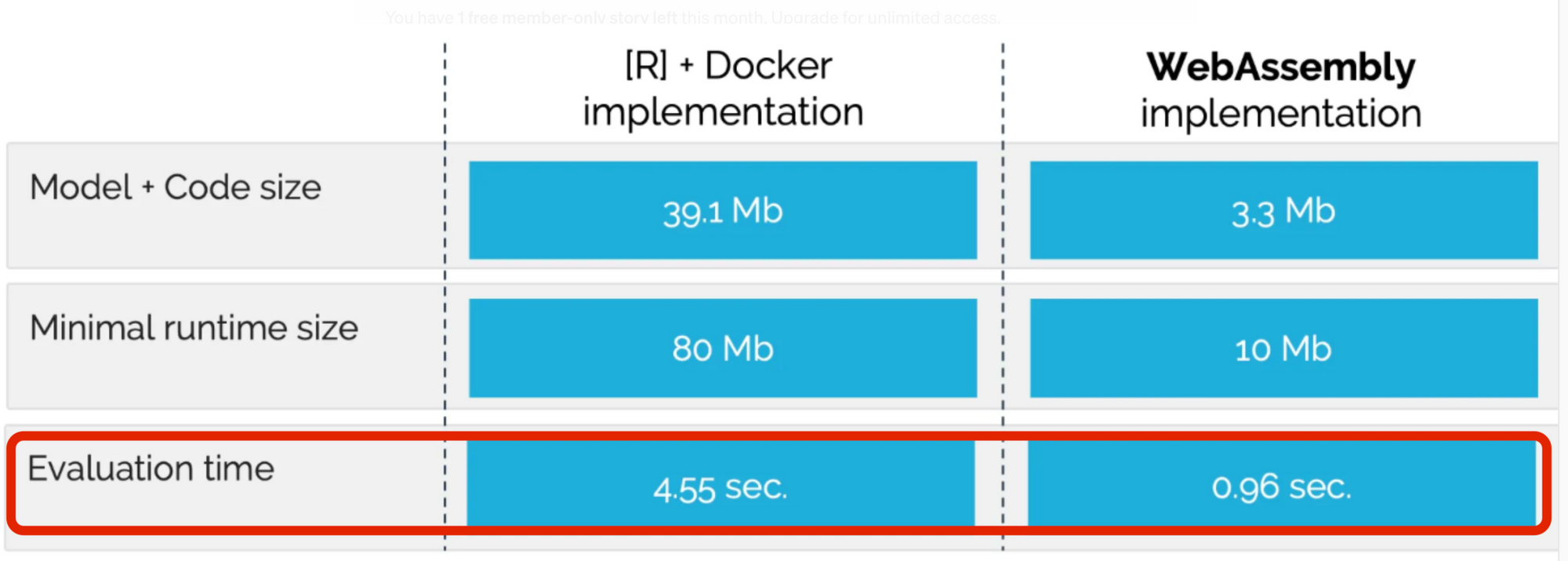

Maurits Kaptein, a researcher from the University of Tilburg, conducted a study investigating the size of WebAssembly and Docker in the context of machine learning. Through a series of experiments involving inference operations, Kaptein found that WebAssembly exhibits a significantly smaller footprint compared to Docker, roughly 10 times smaller.

Fast Startup: Accelerating Performance and Responsiveness

Wasm's binary format enables rapid startup times. For example, a cloud-native Wasm application can have near-instantaneous startup times, enabling quick scaling to meet varying demands. This is especially beneficial for applications with unpredictable or bursty traffic patterns, ensuring responsiveness and optimal user experience.

Furthermore, the investigation conducted by Maurits Kaptein from the University of Tilburg provides additional evidence supporting the notion that WebAssembly surpasses Docker in terms of performance. Specifically, the research highlights a substantial speed advantage of around 5 times in favor of WebAssembly over Docker.

Certain findings presented on pages 3 and 4 of this concise report indicate a remarkable disparity in startup speed between WebAssembly (Wasm) applications and containers. According to the data, Wasm apps exhibit a starting time that is notably faster, ranging from 10 times to an impressive 500 times quicker than their container counterparts. Additionally, the report suggests that execution times of Wasm apps can be up to 10 times faster.

Apart from the specific report, it is widely acknowledged that the cold-start times of Wasm apps have revolutionary implications, enabling the realization of true scale-to-zero architectures. The rapidity of Wasm cold start times is such that the traditional need to maintain a fleet of pre-warmed containers prepared to handle incoming requests becomes unnecessary.

Isolation and Security: Strengthening Cloud-Native Application Security

Wasm's sandboxed execution environment ensures strong isolation and enhanced security for cloud-native applications. Each Wasm module runs in its own isolated environment, preventing interference and potential vulnerabilities. This isolation provides an additional layer of security, making it harder for attackers to exploit vulnerabilities in the host system or other modules.

Containers initially adopt a permissive allow-by-default model, providing extensive access to the host kernel. This unrestricted access necessitates significant efforts to fortify security measures effectively. In contrast, WebAssembly apps operate within a secure deny-by-default sandbox, residing outside the kernel. Within this environment, all access to capabilities must be explicitly granted, ensuring a robust and controlled system.

Empowering Cross-Platform Compatibility: The Portability Advantage

In today's technology landscape, compatibility issues arise when deploying applications across different operating systems (OS) and central processing unit (CPU) architectures. For instance, a container designed for Linux/amd64 won't function on Linux/arm64, let alone on Windows/amd64 or Windows/arm64.

This dilemma leads to image sprawl, where organizations must develop and manage multiple application images for each specific OS and CPU architecture in their environments. It becomes a burdensome task to ensure compatibility and maintain numerous images.

However, a promising solution to address this challenge is WebAssembly. By creating a unified WebAssembly module that can run on any system, it offers a game-changing approach. This is achieved by utilizing a distinct bytecode format that necessitates a runtime for execution. When you build your application as wasm32/wasi, it becomes compatible with any host that has a WebAssembly runtime.

To illustrate this concept, let's consider a scenario where you develop a WebAssembly application on your laptop. Subsequently, you can utilize the wasmtime runtime to execute it seamlessly on various combinations of Linux, macOS, and Windows, regardless of whether they are running on AMD64 or ARM64 architectures.

Moreover, there are other Wasm runtimes available that can execute your application on even more specialized architectures, such as those found on Internet of Things (IoT) devices and edge devices.

Cloud-native WASM, cloud-side WebAssembly, server-side WebAssembly, or WebAssembly on the server

What does all of the above actually mean?

It refers to the utilization of WebAssembly(Wasm) within cloud environments to enable the development and deployment of cloud applications and cater to various cloud-based use cases.

How Wasm Fits into the Cloud-Native Landscape

Wasm complements cloud-native principles by providing a new deployment option for cloud-native applications. By leveraging Wasm, developers can encapsulate individual functionalities or microservices into standalone modules, enabling easy composition, reuse, and scaling. Wasm modules can be dynamically loaded and executed in cloud-native environments, enhancing flexibility and enabling efficient resource utilization.

For example, in a cloud-native architecture, a Wasm module can be deployed as a sidecar alongside a microservice. The Wasm module can handle specific tasks, such as image processing, encryption, or machine learning, while the main microservice focuses on business logic. This modular approach allows for easier scaling, versioning, and replacement of specific functionalities without impacting the entire application.

Fortunately, thanks to the efforts of startups and CNCF projects, we now have access to WebAssembly (Wasm) runtimes that serve as the missing piece of the puzzle. These runtimes allow us to effortlessly execute our apps, which have been transformed into Wasm modules. The beauty of this approach lies in the fact that we no longer need to compile our apps for specific platforms such as Linux/arm64, Linux/amd64, or Windows amd64. Instead, we compile them into the WebAssembly bytecode format (wasm32/wasi), which stands on its own.

To bring our Wasm modules to life, we rely on the power of Wasm runtimes like wasmtime and WasmEdge. wasmtime, a project developed by the Bytecode Alliance, is specifically tailored for running on servers and in the cloud. On the other hand, WasmEdge, an initiative under CNCF, places a greater emphasis on edge devices. These runtimes act as intermediaries, skillfully translating the Wasm bytecode into the language understood by the native machine of our cloud server.

Unleashing the Potential: WebAssembly Meets Kubernetes

In the world of cloud-native technologies, one name stands out: Kubernetes. This powerful platform has revolutionized the way we manage and deploy applications in the cloud.

Now, let's dive into an exciting development that has been gaining momentum. Leading industry players, including Microsoft, WasmEdge, and Docker, have collaborated to create something truly remarkable: runwasi, a containerd shim. This ingenious innovation seamlessly integrates containerd with Wasm runtimes, giving us complete control over the lifecycle management of Wasm applications.

Why is this significant?

Well, containerd happens to be the go-to runtime for Kubernetes deployments. With the integration of runwasi, a whole new world of possibilities emerges. Imagine effortlessly scheduling and managing Wasm applications within your Kubernetes clusters. It's a game-changer.

A shining example of this progress can be found in Azure AKS, where you can already create Wasm node pools powered by the runwasi containerd shim. By defining these node pools as a RuntimeClass, Kubernetes becomes incredibly adept at intelligently scheduling Wasm workloads onto them. It's a seamless and efficient way to harness the power of WebAssembly.

While alternative approaches, like Krustlet, have been explored, the utilization of runwasi and the shim approach shows the most promising path forward.

The future is bright, and we can confidently anticipate the integration of Wasm applications into Kubernetes becoming a reality in the very near future.

Get ready to unlock the full potential of WebAssembly within your Kubernetes ecosystem. Exciting times lie ahead as we witness the convergence of these two powerful technologies.

Check out KodeKloud's WebAssembly course:

Conclusion

The rise of Cloud-Native Wasm opens up a world of exciting possibilities, extending the power of WebAssembly beyond the confines of the web browser. With Cloud-Native Wasm, developers can encapsulate functionalities within Wasm modules, enabling the creation of flexible, scalable, and secure cloud-native applications. It's a paradigm shift that promises to reshape the way we build and deploy applications in the cloud.

Coming Up...

In our upcoming article of this series, we delve into practical explanations and demonstrations, guiding you through the process of building and deploying Cloud-Native Wasm applications. We will explore popular frameworks and tools that facilitate the seamless integration of Wasm into your cloud-native workflow. From setting up your development environment to implementing advanced features, we leave no stone unturned.

Read more:

- Immutable Infrastructure as Code (The Future of Scalable DevOps)

- Demystifying Container Orchestration: How Kubernetes Works with Docker

- Security & Containerization

- Complete Docker + Wasm Tutorial: From C++ Code to Wasm Container

- 10 Essential DevOps Tools You Should Learn in 2024

- 17 DevOps Monitoring Tools to Learn in 2024

- Top 15 DevOps Automation Tools You Should Learn in 2024

- Container Security Best Practices in DevOps Environments

Discussion