Kubernetes Update 1.27: Chill Vibes Edition - Exploring the Latest Enhancements

Today, our focus will be on delving into the most recent developments and alterations within the Kubernetes platform. We will specifically concentrate on Version 1.27, the latest release that has been made available. This update is packed with new features, API changes, improved documentation, cleanups, and deprecations that can help you streamline your container orchestration workflow.

Kubernetes releases follow a semantic versioning terminology where the releases are in the form x.y.z where x is the major version, y is the minor version and z is the patch version.

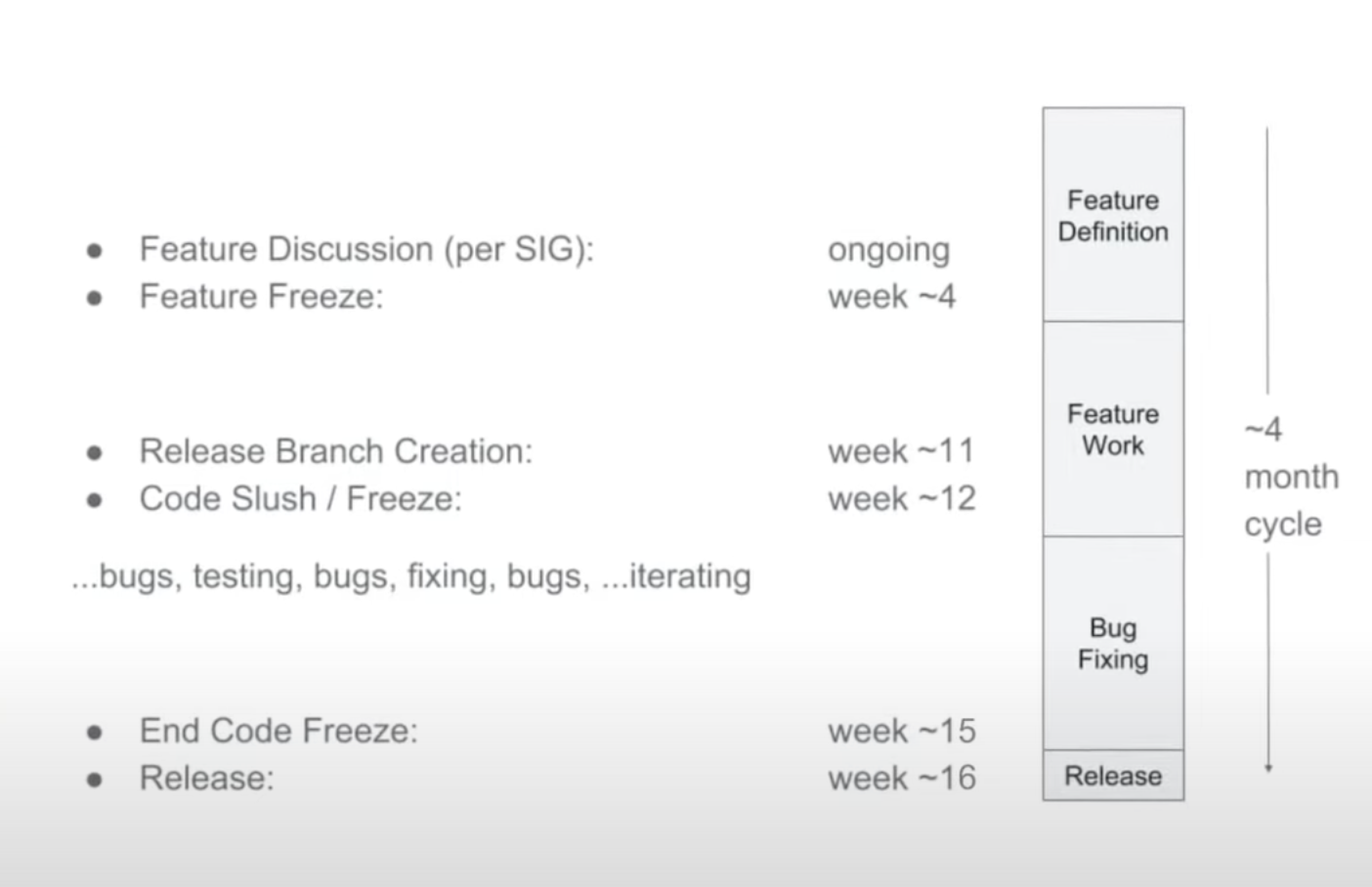

Kubernetes releases happen approximately 3 times every year. Each release has a 4 month lifecycle. We have an upcoming video where we deep dive into Kubernetes release cycles, so be sure to subscribe to our channel to be notified when that video is out.

Kubernetes has a tradition of selecting a release theme for each major release, which reflects the significant shifts and the community's efforts towards the Kubernetes project.

The theme for the version 1.25 released in August 2022 was named combiner. The theme reflected upon the many, many individual components that, when combined, take the form of the project you see today.

The theme for the version 1.26 released in December 2022 was named Electrifying. The theme "Electrifying" for Kubernetes v1.26 is about recognizing the importance of the diverse computing resources used to develop and deploy Kubernetes while also raising awareness about the need to consider energy consumption and environmental sustainability. The release is dedicated to the coordinated efforts of volunteers who made it possible and emphasizes the importance of individual contributions to the release process.

The theme for the latest release is 'Chill Vibes,' which symbolizes the calmness of the 1.27 release. The theme is inspired by the effective management of the release by the Kubernetes community.

This release is the first release of 2023 and includes 60 enhancements. Typically, Kubernetes employs a multi-stage feature release process where each enhancement goes through alpha, beta, GA, and stable phases. If you're interested in learning more about how an enhancement request goes through Kubernetes, check out our video on Kubernetes Enhancements Proposals.

Notably, the 1.27 release saw 9 enhancements promoted to the Stable stage, marking them as production-ready.

What’s New - A 20,000 Feet View

Now, Let's take a high-level look at the changes in Kubernetes 1.27 release. Each release comes with enhancements that can be categorized as Features, API changes, Documentation, Deprecation, and Bugs/Regression. As such, we have a big list of enhancements in the 1.27 release as listed in the release notes.

- The K8s.gcr.io image registry has been frozen.

- SeccompDefault has graduated to stable.

- Node log access is now possible via the Kubernetes API.

- Mutable scheduling directives for Jobs have graduated to GA, while Mutable Pod Scheduling Directives have gone to beta.

- DownwardAPIHugePages has graduated to stable

- Pod Scheduling Readiness has gone to beta.

- ReadWriteOncePod PersistentVolume access mode has also gone to beta.

- We now have Faster SELinux volume relabeling using mounts

- Robust VolumeManager reconstruction has gone to beta.

You are invited to explore our popular YouTube video pertaining to this specific topic. The link provided will direct you to the most viewed video concerning the Kuebrentes 1.27 release.

Let's proceed to the premier item on the list.

The K8s.gcr.io Image Registry Has Been Frozen

As you maybe aware, Kubernetes relied on a custom GCR or Google Container Registry domain called k8s.gcr.io, for hosting its container images, including the official Kubernetes images, as well as various Kubernetes add-ons and tools.

While this arrangement has served the project well, there are now other cloud providers and vendors who would like to offer hosting services for Kubernetes images. Consequently, Google has generously committed to donating $3 million to support the infrastructure of the project, and Amazon has announced a matching donation during their Kubecon NA 2022 keynote in Detroit.

The introduction of additional hosting providers will bring several benefits, including faster downloads for users (since the servers will be closer to them) and reduced egress bandwidth and costs for GCR. To facilitate this expansion, the images are moved from k8s.gcr.io to registry.k8s.io which will distribute the load between Google and Amazon, with more providers to be added in the future.

So, one significant update in the recent past is that the k8s.gcr.io container image registry was frozen on April 3rd and it has been replaced with a new registry at registry.k8s.io, which is now controlled by the Kubernetes community.

It is important to note that the previous k8s.gcr.io registry is no longer functional and no future Kubernetes-related images or sub-projects will be uploaded there. It is recommended that users who are part of a Kubernetes sub-project update their Helm charts and manifest files to replace the old URL "k8s.gcr.io" with the new registry URL "registry.k8s.io".

For more information on this topic, please visit the URL given in the description below:

https://github.com/kubernetes-sigs/community-images

Upcoming in our sequence is...

SeccompDefault Graduates to Stable

Container security is a crucial aspect of any Kubernetes deployment. Without proper security measures in place, containers can be vulnerable to attacks that can compromise the entire cluster.Seccomp (short for secure computing mode) is a Linux kernel feature that restricts the system calls that a process can make.

In Kubernetes, seccomp can be used to enhance the security of containers by limiting their ability to perform certain privileged operations. Seccomp profiles define a set of rules that specify which systemcalls a container is allowed to make, effectively reducing the attack surface of the container by limiting the available kernel interfaces.

Kubernetes allows you to use custom seccomp profiles to restrict the system calls that containers can make, or to use a default seccomp profile provided by your container runtime to provide a secure baseline configuration.

However this is disabled by default. If we enable seccomp by default, we make Kubernetes more secure implicitly. And this release of Kubernetes has the option to specify a default Seccomp profile that will be used for all containers.

To use this feature, simply run the kubelet with the --seccomp-default command line flag enabled for each node where you want to use it. When enabled, the kubelet will use the RuntimeDefault seccomp profile by default, which is defined by the container runtime. This is an improvement from the previous default mode of Unconfined (where seccomp was disabled), which posed a significant security risk.

It is important to note that the default profiles may vary between container runtimes and their release versions, so it is recommended that you review your container runtime's documentation for specific details on their default seccomp profiles. Overall, this update provides Kubernetes users with a safer and more secure default configuration for their workloads.

Moving forward… The next major feature is that there is a new API available for accessing logs from the nodes.

Node Log Access via Kubernetes API

A node, as you all know, is a control plane or worker machine part of the kubernetes cluster. A "node log" refers to the log data generated by a particular node in a cluster. The logs generated by a node can be helpful for debugging issues that arise in services running on that node.

Keeping that in mind, When services running on a node within a cluster encounter issues, cluster administrators may find it challenging to identify the problem. Typically, they need to SSH or RDP into the node to examine the service logs and diagnose the issue. Well, The Kubernetes Enhancement Proposal: 2258 proposed a feature to allow viewing node logs via API! With this 1.27 release, the Node log query feature simplifies this process by enabling the administrator to access logs using kubectl.

This is particularly helpful when working with Windows nodes as problems like CNI misconfigurations and other hard-to-detect issues can prevent containers from starting up even though the node is ready. By utilizing this feature, administrators can easily investigate and solve such problems, improving the overall efficiency and reliability of the cluster.

So, What's the underlying mechanism that makes it function?

You know that the node proxy endpoint already has a /var/log/ viewer available through the kubelet. With the new feature, a shim is added that leverages journalctl on Linux nodes and the Get-WinEvent cmdlet on Windows nodes to supplement this endpoint. The commands' existing filters are used to facilitate log filtering. Additionally, the kubelet applies heuristics to obtain logs by first checking the native operating system logger. If that is not available, the logs are retrieved from these locations

/var/log/<servicename>

/var/log/<servicename>.log

/var/log/<servicename>/<servicename>.log.

So how do we use this feature?

To utilize the Node Log Query feature, it's important to enable the NodeLogQuery feature gate for the relevant node and ensure that both the enableSystemLogHandler and enableSystemLogQuery options are set to true in the kubelet configuration. Once these prerequisites are met, you can retrieve logs from your nodes. For instance, you can retrieve the kubelet service logs from a node using the following example command:

# Fetch kubelet logs from a node named AIWorkload-node.example kubectl get --raw "/api/v1/nodes/node.example/proxy/logs/?query=kubelet"

Follow the documentation for all the available options for querying node logs

Here’s the next major update…

Mutable Scheduling Directives for Jobs Graduates to GA

In Kubernetes, a parallel job refers to a type of workload that allows multiple pods to be run concurrently to complete a job. Parallel jobs are used for computationally intensive tasks or batch processing, where the workload can be split into smaller pieces that can be executed in parallel to reduce processing time.

When running parallel jobs in Kubernetes, it's often necessary to have specific constraints for the pods, such as running all pods in the same availability zone, or ensuring they only run on certain types of hardware, like GPU model X or Y, but not a mix of both.

To achieve this, Kubernetes uses a 'suspend' field that allows custom queue controllers to decide when a job should start. When a job is suspended, it remains idle until the custom queue controller decides to unsuspend it, based on various scheduling factors.

However, once a job is unsuspended, the actual placement of pods is handled by the Kubernetes scheduler and the custom queue controller has no influence on where the pods will land. This is where the new feature of mutable scheduling directives for Jobs comes into play.

So, here's what happens: This feature lets you update a Job's scheduling directives before it even starts! This means custom queue controllers can influence pod placement without having to handle the actual pod-to-node assignment themselves.

But here's the catch: this feature only works for Jobs that have never been unsuspended before and are currently suspended. So, keep that in mind when you're using it.

With this feature, now it allows you to update fields in a Job's pod template, like node affinity, node selector, tolerations, labels, annotations, and scheduling gates. This gives you more control over where the pods are placed, making sure they are in the best possible location based on specific constraints.

If you want to know more about this feature, check out the KEP called "Allow updating scheduling directives of jobs." It's got all the juicy details.

Final one in our list is,

Single Pod Access Mode for PersistentVolumes Graduates to Beta

So persistent volumes have several access modes available. Such as ReadOnlyMany where the volume can be mounted as read-only by many nodes. ReadWriteMany where the volume can be mounted as read-write by many nodes. ReadWriteOnce is where the volume can be mounted as read-write by a single node. However it can allow multiple pods to access the volume when the pods are running on the same node.

And a new mode named ReadWriteOncePod feature was introduced in Kubernetes v1.22. It limits volume access to only one pod in the cluster. This approach ensures that only a single pod can write to the volume at a time, making it particularly helpful for stateful workloads that require exclusive access to storage.

You can read more about this feature in this link.

This feature has now graduated to Beta.

So, How Do We Use This Feature?

Kubernetes v1.27 and later will have ReadWriteOncePod beta enabled by default. However, it's worth noting that this feature is exclusively supported for CSI volumes. To enable this feature simply add the ReadWriteOncePod mode to the access modes while creating the PVC.

For further information on the ReadWriteOncePod access mode you can refer to KEP-2485.

Conclusion

From here you can checkout the following resources to gain a thorough understanding of the Kubernetes 1.27 release.

It is packed with new features, enhancements, and deprecations. To explore the exhaustive list of changes, you can check out the release notes page.

Additionally, the release has seen 9 enhancements being promoted to the stable stage, which you can read about in detail in the Kubernetes blog.

On the other hand, some features have been deprecated or removed, which you can also find in the blog post.

For an in-depth understanding of the major features, removals, and deprecations, you can watch the video by the Kubernetes v1.27 release team, held on April 14th. With these advancements and updates, Kubernetes continues to be a leading platform for container orchestration.