Kubernetes Pods Stuck in Terminating: A Resolution Guide

In this article, you will discover the common causes of the “Kubernetes Pod Stuck In Termination” problem, the commands to troubleshoot it, and the best practices to fix it and avoid it in the future.

If you are a DevOps engineer who works with Kubernetes, you might have encountered a situation where some of your Pods get stuck in a terminating state and refuse to go away. This can be frustrating, especially if you need to free up resources or deploy new versions of your applications.

In this article, I will explain why this happens, how to diagnose the problem, and how to resolve it in a few simple steps.

Why do Pods get stuck in terminating?

When you delete a Pod, either manually or through deployment, the Pod enters the terminating phase. This means that the Pod is scheduled to be deleted, but it is not yet removed from the node.

The terminating phase is supposed to be a short-lived transitional state, where the Pod gracefully shuts down its containers, releases its resources, and sends a termination signal to the kubelet. The kubelet then removes the Pod from the API server and deletes its local data.

However, sometimes a Pod will get stuck in the terminating phase, meaning the deletion process is incomplete. Below are some of the reasons why this may happen:

- The Pod has a finalizer that prevents it from being deleted until a certain condition is met. A finalizer is a field in the Pod's metadata that specifies an external controller or resource that needs to perform some cleanup or finalization tasks before the Pod is deleted. For example, a Pod might have a finalizer that waits for a backup to finish or a volume to unmount.

- The Pod has a preStop hook that takes too long to execute or fails. A preStop hook is a command or a script that runs inside the container before it is terminated. It is used to perform some graceful shutdown actions, like closing connections, flushing buffers, or sending notifications. However, if the preStop hook takes longer than the terminationGracePeriodSeconds (which defaults to 30 seconds), the kubelet will forcefully kill the container, and the Pod will remain in the terminating state. Similarly, if the preStop hook fails or returns a non-zero exit code, the Pod will not be deleted.

- The Pod is part of a StatefulSet that has a PodManagementPolicy of OrderedReady. A StatefulSet is a controller that manages Pods that have a stable identity and order. The PodManagementPolicy determines how the Pods are created and deleted. If the policy is OrderedReady, the Pods are created and deleted one by one in a strict order. This means that if a Pod is stuck in terminating, the next Pod in the sequence will not be created or deleted until the previous one is resolved.

If you need a refresher on Pods in Kubernetes, you can read our article on What Are Pods in Kubernetes? A Quick Explanation for a simple overview.

Resolving Pod Stuck in Termination Due to Finalizer

In this section, we shall use a simple example to demonstrate how to resolve the termination issue due to the finalizer.

Create a YAML file named deployment.yaml with the following specs:

apiVersion: v1

kind: Pod

metadata:

name: finalizer-demo

finalizers:

- kubernetes

spec:

containers:

- name: finalizer-demo

image: nginx:latest

ports:

- containerPort: 80

Create a Pod by running the following command:

kubectl create -f deployment.yamlVerify the Pod has been created using the following command:

kubectl get podsAfter the Pod creation process is complete, delete it by running the following command:

kubectl delete pod finalizer-demoIf you run the kubectl get pods again, you’ll see the Pod is now stuck in the terminating stage. When you deleted the Pod, it was not deleted. Instead, it was modified to include deletion time. To view this, run the following command and check the metadata section:

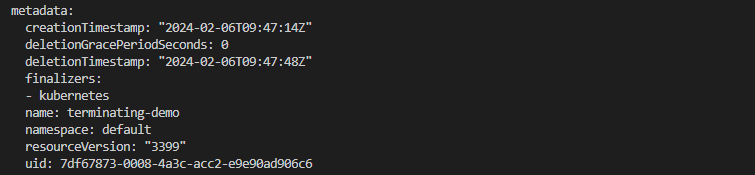

kubectl get pod/finalizer-demo -o yamlYou should see this in the metadata section:

To delete this pod, you’ll need to remove the finalizer manually. You do this by running the command:

kubectl edit pod finalizer-demo -n defaultThis will open the Pod's YAML definition in your default editor. Find the finalizers field in the metadata section and delete the line that contains the finalizer name. Save and exit the editor. This will update the Pod's definition and trigger its deletion.

Resolving Pod Stuck in Termination Due to PreStop Hook

Just like in the previous section, we shall use a simple example to demonstrate how to resolve the termination issue due to the preStop hook.

Create a YAML file named deployment.yaml with the following specs:

apiVersion: v1

kind: Pod

metadata:

name: prestop-demo

spec:

terminationGracePeriodSeconds: 3600

containers:

- name: prestop-demo

image: nginx:latest

ports:

- containerPort: 80

lifecycle:

preStop:

exec:

command:

- /bin/sh

- -c

- sleep 3600

Create a Pod by running the following command:

kubectl create -f deployment.yamlVerify the Pod has been created using the following command:

kubectl get podsAfter the Pod creation process is complete, delete it by running the following command:

kubectl delete pod prestop-demoIf you run the kubectl get pods again, you’ll see the Pod is now stuck in the terminating stage. When you deleted the Pod, it was not deleted. Instead, it was modified to include deletion time. To view this, run the following command and check the metadata section:

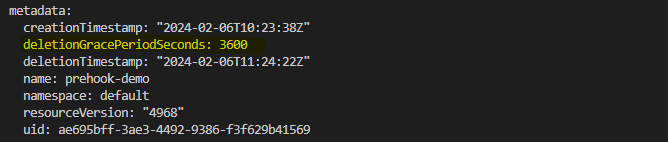

kubectl get pod/prestop-demo -o yamlYou should see this in the metadata section:

Note that the deletionGracePeriodSeconds is set at 3600. This means the pod will be terminated one hour after the delete command is executed. However, you can remove it instantly and bypass the grace period. You do that by setting the new grace period to 0 using the following command:

kubectl delete Pod prestop-demo -n default --force --grace-period=0The command above bypasses the graceful termination process and sends a SIGKILL signal to the Pod's containers. This might cause some data loss or corruption, so use this method with caution.

Resolving Pod Stuck In Termination Due To PodManagementPolicy of OrderedReady

Again, we shall use a simple example to demonstrate how to resolve the termination issue due to PodManagementPolicy of OrderedReady.

Create a YAML file named statefulset.yaml with the following specs.

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

spec:

serviceName: "nginx"

replicas: 3

podManagementPolicy: OrderedReady # default value

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

name: web

volumeMounts:

- name: www

mountPath: /usr/share/nginx/html

volumeClaimTemplates:

- metadata:

name: www

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 1Gi

Create a StatefulSet by running the following command:

kubectl create -f statefulset.yamlVerify that StatefulSet has been created using the following command:

kubectl get statefulsetsNow, try to delete the StatefulSet to see if it will be stuck in the terminating stage:

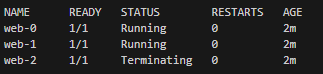

kubectl delete statefulset webIf you run the kubectl get pods command, you will see that the Pods are deleted one by one, starting from the highest ordinal to the lowest. This is because the OrderedReady policy ensures that the Pods are created and deleted in order. This can be slow and inefficient, especially if you have a large number of Pods.

To change the PodManagementPolicy to Parallel, you need to edit the StatefulSet’s YAML definition. You do this by running the command:

kubectl edit statefulset web -n defaultThis will open the StatefulSet’s YAML definition in your default editor. Find the podManagementPolicy field in the spec section and change its value from OrderedReady to Parallel. Save and exit the editor. This will update the StatefulSet’s definition and allow it to create and delete Pods in parallel without waiting for the previous ones to be resolved.

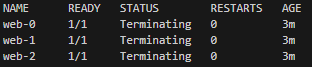

Now, if you try to delete the StatefulSet again, you will see that the Pods are deleted in parallel, without any order. This can be faster and more efficient, especially if you have a large number of Pods.

kubectl delete statefulset webIf you run the kubectl get pods command, you will see something like this:

Prevent Future Occurrences

Below are some preventative measures you can take to avoid having Pods stuck in termination:

- Implement Pod hooks and preStop for graceful shutdown. You can use these hooks to perform some cleanup or finalization tasks, such as closing connections, flushing buffers, or sending notifications. This will help the Pod to shut down gracefully and avoid errors or data loss.

- Add liveness/readiness probes to prevent recreating loops. Use these probes to detect and recover from failures, such as deadlocks, crashes, or network issues. This will prevent the Pod from getting stuck in a loop of recreating and terminating.

Learn more about readiness probe in this article:

Kubernetes Readiness Probe: A Simple Guide with Examples

- Handle orphaned/erroneous child processes. Sometimes, a container might spawn child processes that are not properly terminated when the container exits. These processes might keep running in the background, consuming resources and preventing the Pod from being deleted. Below are the methods you can use to handle these processes:

- Use a PID namespace. A PID namespace is a feature that isolates the process IDs of a group of processes. This means that each Pod has its own set of process IDs, and the processes inside the Pod cannot interact with the processes outside the Pod. This will ensure that all the processes in the Pod are terminated together when the Pod is deleted.

- Use an init container. An init container is a special type of container that runs before the main containers in the Pod. It is used to perform some initialization tasks, such as setting up the environment, installing dependencies, or configuring the network. You can also use an init container to run a process manager, such as tini or dumb-init, that will act as the parent process of the main containers and handle the signals and reaping of the child processes. This will ensure that no processes are left behind when the Pod is deleted.

- Monitor and alert on long termination times. Use metrics and events to monitor and alert on long termination times. This will allow you to address the termination issues as soon as they occur.

Check out our Kubernetes Learning Path to start learning Kubernetes today

Conclusion

In this article, we have explained why Kubernetes Pods get stuck in the ‘Terminating’ phase, how to diagnose the problem, and how to resolve it in a few simple steps.

I hope you found this article helpful and interesting. If you have any questions or feedback, please feel free to leave a comment below.

Are you ready for practical learning? Subscribe now on our plan and pricing page to unlock 70+ top DevOps courses. Begin your DevOps journey today!