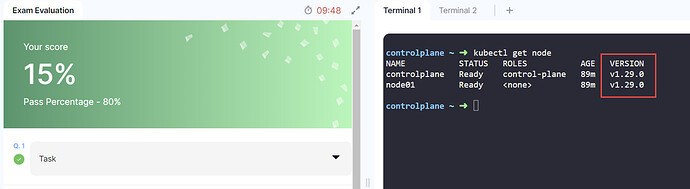

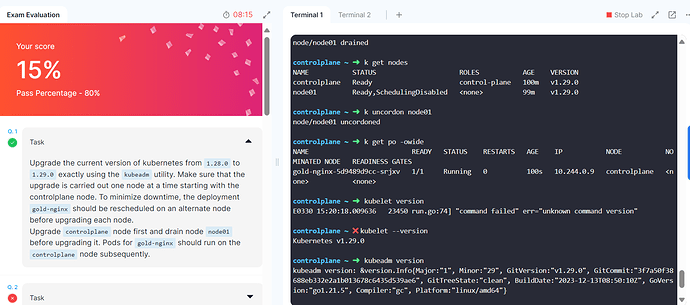

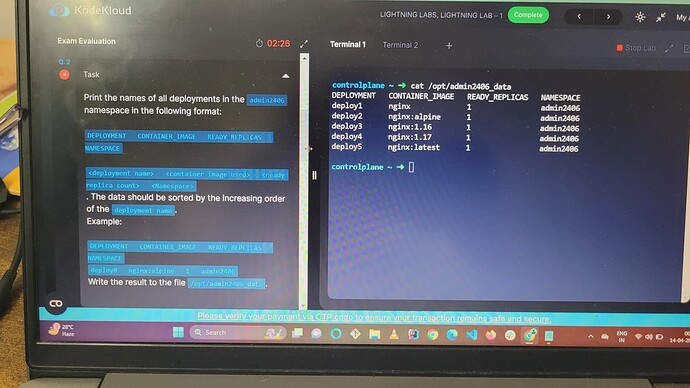

KUBEADM cluster upgrade; please verify my solution or evaluation or validation criteria.

I attempted with below commands and am submitting whatever solution I provided in same.

controlplane ~ ➜ echo "deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.29/deb/ /" | sudo tee /etc/apt/sources.list.d/kubernetes.list

deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.29/deb/ /

controlplane ~ ➜ curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.29/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

File '/etc/apt/keyrings/kubernetes-apt-keyring.gpg' exists. Overwrite? (y/N) y

controlplane ~ ➜ sudo apt-get update

Get:2 https://download.docker.com/linux/ubuntu focal InRelease [57.7 kB]

Get:1 https://prod-cdn.packages.k8s.io/repositories/isv:/kubernetes:/core:/stable:/v1.29/deb InRelease [1,186 B]

Get:3 http://archive.ubuntu.com/ubuntu focal InRelease [265 kB]

Get:4 https://download.docker.com/linux/ubuntu focal/stable amd64 Packages [47.8 kB]

Get:5 http://security.ubuntu.com/ubuntu focal-security InRelease [114 kB]

Get:6 https://prod-cdn.packages.k8s.io/repositories/isv:/kubernetes:/core:/stable:/v1.29/deb Packages [6,511 B]

Get:7 http://archive.ubuntu.com/ubuntu focal-updates InRelease [114 kB]

Get:8 http://archive.ubuntu.com/ubuntu focal-backports InRelease [108 kB]

Get:9 http://archive.ubuntu.com/ubuntu focal/multiverse amd64 Packages [177 kB]

Get:10 http://archive.ubuntu.com/ubuntu focal/universe amd64 Packages [11.3 MB]

Get:11 http://archive.ubuntu.com/ubuntu focal/main amd64 Packages [1,275 kB]

Get:12 http://archive.ubuntu.com/ubuntu focal/restricted amd64 Packages [33.4 kB]

Get:13 http://archive.ubuntu.com/ubuntu focal-updates/restricted amd64 Packages [3,593 kB]

Get:14 http://archive.ubuntu.com/ubuntu focal-updates/multiverse amd64 Packages [32.4 kB]

Get:15 http://archive.ubuntu.com/ubuntu focal-updates/main amd64 Packages [3,980 kB]

Get:16 http://archive.ubuntu.com/ubuntu focal-updates/universe amd64 Packages [1,489 kB]

Get:17 http://archive.ubuntu.com/ubuntu focal-backports/universe amd64 Packages [28.6 kB]

Get:18 http://archive.ubuntu.com/ubuntu focal-backports/main amd64 Packages [55.2 kB]

Get:19 http://security.ubuntu.com/ubuntu focal-security/universe amd64 Packages [1,194 kB]

Get:20 http://security.ubuntu.com/ubuntu focal-security/main amd64 Packages [3,505 kB]

Get:21 http://security.ubuntu.com/ubuntu focal-security/restricted amd64 Packages [3,442 kB]

Get:22 http://security.ubuntu.com/ubuntu focal-security/multiverse amd64 Packages [29.7 kB]

Fetched 30.9 MB in 3s (10.7 MB/s)

Reading package lists... Done

controlplane ~ ➜ sudo apt update

Hit:2 https://download.docker.com/linux/ubuntu focal InRelease

Hit:3 http://archive.ubuntu.com/ubuntu focal InRelease

Hit:4 http://security.ubuntu.com/ubuntu focal-security InRelease

Hit:1 https://prod-cdn.packages.k8s.io/repositories/isv:/kubernetes:/core:/stable:/v1.29/deb InRelease

Hit:5 http://archive.ubuntu.com/ubuntu focal-updates InRelease

Hit:6 http://archive.ubuntu.com/ubuntu focal-backports InRelease

Reading package lists... Done

Building dependency tree

Reading state information... Done

80 packages can be upgraded. Run 'apt list --upgradable' to see them.

controlplane ~ ➜ sudo apt-cache madison kubeadm

kubeadm | 1.29.3-1.1 | https://pkgs.k8s.io/core:/stable:/v1.29/deb Packages

kubeadm | 1.29.2-1.1 | https://pkgs.k8s.io/core:/stable:/v1.29/deb Packages

kubeadm | 1.29.1-1.1 | https://pkgs.k8s.io/core:/stable:/v1.29/deb Packages

kubeadm | 1.29.0-1.1 | https://pkgs.k8s.io/core:/stable:/v1.29/deb Packages

controlplane ~ ➜ kubectl drain controlplane --ignire-daemonsets

error: unknown flag: --ignire-daemonsets

See 'kubectl drain --help' for usage.

controlplane ~ ✖ kubectl drain controlplane --ignore-daemonsets

node/controlplane cordoned

Warning: ignoring DaemonSet-managed Pods: kube-system/kube-proxy-qq69r, kube-system/weave-net-kvrmn

evicting pod kube-system/coredns-5dd5756b68-klcmk

evicting pod kube-system/coredns-5dd5756b68-hslq7

pod/coredns-5dd5756b68-hslq7 evicted

pod/coredns-5dd5756b68-klcmk evicted

node/controlplane drained

controlplane ~ ➜ sudo apt-mark unhold kubeadm && \

> sudo apt-get update && sudo apt-get install -y kubeadm='1.29.3-1.1' && \

> sudo apt-mark hold kubeadm

kubeadm was already not hold.

Hit:2 https://download.docker.com/linux/ubuntu focal InRelease

Hit:3 http://security.ubuntu.com/ubuntu focal-security InRelease

Hit:4 http://archive.ubuntu.com/ubuntu focal InRelease

Hit:5 http://archive.ubuntu.com/ubuntu focal-updates InRelease

Hit:6 http://archive.ubuntu.com/ubuntu focal-backports InRelease

Hit:1 https://prod-cdn.packages.k8s.io/repositories/isv:/kubernetes:/core:/stable:/v1.29/deb InRelease

Reading package lists... Done

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following packages will be upgraded:

kubeadm

1 upgraded, 0 newly installed, 0 to remove and 79 not upgraded.

Need to get 10.1 MB of archives.

After this operation, 2,408 kB disk space will be freed.

Get:1 https://prod-cdn.packages.k8s.io/repositories/isv:/kubernetes:/core:/stable:/v1.29/deb kubeadm 1.29.3-1.1 [10.1 MB]

Fetched 10.1 MB in 0s (43.2 MB/s)

debconf: delaying package configuration, since apt-utils is not installed

(Reading database ... 20477 files and directories currently installed.)

Preparing to unpack .../kubeadm_1.29.3-1.1_amd64.deb ...

Unpacking kubeadm (1.29.3-1.1) over (1.28.0-1.1) ...

Setting up kubeadm (1.29.3-1.1) ...

kubeadm set on hold.

controlplane ~ ➜ kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"29", GitVersion:"v1.29.3", GitCommit:"6813625b7cd706db5bc7388921be03071e1a492d", GitTreeState:"clean", BuildDate:"2024-03-15T00:06:16Z", GoVersion:"go1.21.8", Compiler:"gc", Platform:"linux/amd64"}

controlplane ~ ➜ sudo kubeadm upgrade plan v1.29.3

[upgrade/config] Making sure the configuration is correct:

[upgrade/config] Reading configuration from the cluster...

[upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[preflight] Running pre-flight checks.

[upgrade] Running cluster health checks

[upgrade] Fetching available versions to upgrade to

[upgrade/versions] Cluster version: v1.28.0

[upgrade/versions] kubeadm version: v1.29.3

[upgrade/versions] Target version: v1.29.3

[upgrade/versions] Latest version in the v1.28 series: v1.29.3

Components that must be upgraded manually after you have upgraded the control plane with 'kubeadm upgrade apply':

COMPONENT CURRENT TARGET

kubelet 2 x v1.28.0 v1.29.3

Upgrade to the latest version in the v1.28 series:

COMPONENT CURRENT TARGET

kube-apiserver v1.28.0 v1.29.3

kube-controller-manager v1.28.0 v1.29.3

kube-scheduler v1.28.0 v1.29.3

kube-proxy v1.28.0 v1.29.3

CoreDNS v1.10.1 v1.11.1

etcd 3.5.9-0 3.5.12-0

You can now apply the upgrade by executing the following command:

kubeadm upgrade apply v1.29.3

_____________________________________________________________________

The table below shows the current state of component configs as understood by this version of kubeadm.

Configs that have a "yes" mark in the "MANUAL UPGRADE REQUIRED" column require manual config upgrade or

resetting to kubeadm defaults before a successful upgrade can be performed. The version to manually

upgrade to is denoted in the "PREFERRED VERSION" column.

API GROUP CURRENT VERSION PREFERRED VERSION MANUAL UPGRADE REQUIRED

kubeproxy.config.k8s.io v1alpha1 v1alpha1 no

kubelet.config.k8s.io v1beta1 v1beta1 no

_____________________________________________________________________

controlplane ~ ➜ sudo kubeadm upgrade apply v1.29.3

[upgrade/config] Making sure the configuration is correct:

[upgrade/config] Reading configuration from the cluster...

[upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[preflight] Running pre-flight checks.

[upgrade] Running cluster health checks

[upgrade/version] You have chosen to change the cluster version to "v1.29.3"

[upgrade/versions] Cluster version: v1.28.0

[upgrade/versions] kubeadm version: v1.29.3

[upgrade] Are you sure you want to proceed? [y/N]: y

[upgrade/prepull] Pulling images required for setting up a Kubernetes cluster

[upgrade/prepull] This might take a minute or two, depending on the speed of your internet connection

[upgrade/prepull] You can also perform this action in beforehand using 'kubeadm config images pull'

W0330 14:29:17.115596 14448 checks.go:835] detected that the sandbox image "k8s.gcr.io/pause:3.6" of the container runtime is inconsistent with that used by kubeadm. It is recommended that using "registry.k8s.io/pause:3.9" as the CRI sandbox image.

[upgrade/apply] Upgrading your Static Pod-hosted control plane to version "v1.29.3" (timeout: 5m0s)...

[upgrade/etcd] Upgrading to TLS for etcd

[upgrade/staticpods] Preparing for "etcd" upgrade

[upgrade/staticpods] Renewing etcd-server certificate

[upgrade/staticpods] Renewing etcd-peer certificate

[upgrade/staticpods] Renewing etcd-healthcheck-client certificate

[upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/etcd.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2024-03-30-14-29-20/etcd.yaml"

[upgrade/staticpods] Waiting for the kubelet to restart the component

[upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s)

[apiclient] Found 1 Pods for label selector component=etcd

[upgrade/staticpods] Component "etcd" upgraded successfully!

[upgrade/etcd] Waiting for etcd to become available

[upgrade/staticpods] Writing new Static Pod manifests to "/etc/kubernetes/tmp/kubeadm-upgraded-manifests1796710358"

[upgrade/staticpods] Preparing for "kube-apiserver" upgrade

[upgrade/staticpods] Renewing apiserver certificate

[upgrade/staticpods] Renewing apiserver-kubelet-client certificate

[upgrade/staticpods] Renewing front-proxy-client certificate

[upgrade/staticpods] Renewing apiserver-etcd-client certificate

[upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-apiserver.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2024-03-30-14-29-20/kube-apiserver.yaml"

[upgrade/staticpods] Waiting for the kubelet to restart the component

[upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s)

[apiclient] Found 1 Pods for label selector component=kube-apiserver

[upgrade/staticpods] Component "kube-apiserver" upgraded successfully!

[upgrade/staticpods] Preparing for "kube-controller-manager" upgrade

[upgrade/staticpods] Renewing controller-manager.conf certificate

[upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-controller-manager.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2024-03-30-14-29-20/kube-controller-manager.yaml"

[upgrade/staticpods] Waiting for the kubelet to restart the component

[upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s)

[apiclient] Found 1 Pods for label selector component=kube-controller-manager

[upgrade/staticpods] Component "kube-controller-manager" upgraded successfully!

[upgrade/staticpods] Preparing for "kube-scheduler" upgrade

[upgrade/staticpods] Renewing scheduler.conf certificate

[upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-scheduler.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2024-03-30-14-29-20/kube-scheduler.yaml"

[upgrade/staticpods] Waiting for the kubelet to restart the component

[upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s)

[apiclient] Found 1 Pods for label selector component=kube-scheduler

[upgrade/staticpods] Component "kube-scheduler" upgraded successfully!

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster

[upgrade] Backing up kubelet config file to /etc/kubernetes/tmp/kubeadm-kubelet-config2303617595/config.yaml

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "super-admin.conf" kubeconfig file

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

[upgrade/successful] SUCCESS! Your cluster was upgraded to "v1.29.3". Enjoy!

[upgrade/kubelet] Now that your control plane is upgraded, please proceed with upgrading your kubelets if you haven't already done so.

controlplane ~ ➜ sudo apt-mark unhold kubelet kubectl && \

> sudo apt-get update && sudo apt-get install -y kubelet='1.29.3-1.1' kubectl='1.29.3-1.1' && \

> sudo apt-mark hold kubelet kubectl

kubelet was already not hold.

kubectl was already not hold.

Hit:2 https://download.docker.com/linux/ubuntu focal InRelease

Hit:3 http://archive.ubuntu.com/ubuntu focal InRelease

Hit:4 http://archive.ubuntu.com/ubuntu focal-updates InRelease

Hit:1 https://prod-cdn.packages.k8s.io/repositories/isv:/kubernetes:/core:/stable:/v1.29/deb InRelease

Hit:5 http://archive.ubuntu.com/ubuntu focal-backports InRelease

Hit:6 http://security.ubuntu.com/ubuntu focal-security InRelease

Reading package lists... Done

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following packages will be upgraded:

kubectl kubelet

2 upgraded, 0 newly installed, 0 to remove and 77 not upgraded.

Need to get 30.3 MB of archives.

After this operation, 1,090 kB of additional disk space will be used.

Get:1 https://prod-cdn.packages.k8s.io/repositories/isv:/kubernetes:/core:/stable:/v1.29/deb kubectl 1.29.3-1.1 [10.5 MB]

Get:2 https://prod-cdn.packages.k8s.io/repositories/isv:/kubernetes:/core:/stable:/v1.29/deb kubelet 1.29.3-1.1 [19.8 MB]

Fetched 30.3 MB in 0s (65.5 MB/s)

debconf: delaying package configuration, since apt-utils is not installed

(Reading database ... 20477 files and directories currently installed.)

Preparing to unpack .../kubectl_1.29.3-1.1_amd64.deb ...

Unpacking kubectl (1.29.3-1.1) over (1.28.0-1.1) ...

Preparing to unpack .../kubelet_1.29.3-1.1_amd64.deb ...

Unpacking kubelet (1.29.3-1.1) over (1.28.0-1.1) ...

Setting up kubectl (1.29.3-1.1) ...

Setting up kubelet (1.29.3-1.1) ...

kubelet set on hold.

kubectl set on hold.

controlplane ~ ➜ sudo systemctl daemon-reload

controlplane ~ ➜ sudo systemctl restart kubelet

controlplane ~ ➜ k get odes

error: the server doesn't have a resource type "odes"

controlplane ~ ✖ k get nodes

NAME STATUS ROLES AGE VERSION

controlplane NotReady,SchedulingDisabled control-plane 90m v1.29.3

node01 Ready <none> 90m v1.28.0

controlplane ~ ➜ k uncordon controlplane

node/controlplane uncordoned

controlplane ~ ➜ k get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

gold-nginx-5d9489d9cc-ffq2t 1/1 Running 0 11m 10.244.192.1 node01 <none> <none>

controlplane ~ ➜ k drain node01 --ignore-daemonsets

node/node01 cordoned

Warning: ignoring DaemonSet-managed Pods: kube-system/kube-proxy-84c8j, kube-system/weave-net-mbqzq

evicting pod kube-system/coredns-76f75df574-xnx7l

evicting pod admin2406/deploy2-5d4697f587-rm7tw

evicting pod admin2406/deploy5-7d5f6f769b-p6zjr

evicting pod default/gold-nginx-5d9489d9cc-ffq2t

evicting pod kube-system/coredns-76f75df574-vm5zs

evicting pod admin2406/deploy4-c669bb985-t4tk2

evicting pod admin2406/deploy1-67b55d4f9f-t7vfr

evicting pod admin2406/deploy3-59985b7bb9-ll2ql

pod/deploy1-67b55d4f9f-t7vfr evicted

pod/deploy5-7d5f6f769b-p6zjr evicted

pod/deploy3-59985b7bb9-ll2ql evicted

pod/deploy4-c669bb985-t4tk2 evicted

I0330 14:33:43.620808 19706 request.go:697] Waited for 1.045947503s due to client-side throttling, not priority and fairness, request: GET:https://controlplane:6443/api/v1/namespaces/default/pods/gold-nginx-5d9489d9cc-ffq2t

pod/gold-nginx-5d9489d9cc-ffq2t evicted

pod/deploy2-5d4697f587-rm7tw evicted

pod/coredns-76f75df574-vm5zs evicted

pod/coredns-76f75df574-xnx7l evicted

node/node01 drained

controlplane ~ ➜ k get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

gold-nginx-5d9489d9cc-h8ct4 1/1 Running 0 11s 10.244.0.6 controlplane <none> <none>

controlplane ~ ➜ ssh node01

root@node01 ~ ➜ echo "deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.29/deb/ /" | sudo tee /etc/apt/sources.list.d/kubernetes.list

deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.29/deb/ /

root@node01 ~ ➜ curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.29/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

File '/etc/apt/keyrings/kubernetes-apt-keyring.gpg' exists. Overwrite? (y/N) y

root@node01 ~ ➜ sudo apt-get update

Get:2 https://download.docker.com/linux/ubuntu focal InRelease [57.7 kB]

Get:1 https://prod-cdn.packages.k8s.io/repositories/isv:/kubernetes:/core:/stable:/v1.29/deb InRelease [1186 B]

Get:3 http://security.ubuntu.com/ubuntu focal-security InRelease [114 kB]

Get:4 http://archive.ubuntu.com/ubuntu focal InRelease [265 kB]

Get:5 https://download.docker.com/linux/ubuntu focal/stable amd64 Packages [47.8 kB]

Get:6 https://prod-cdn.packages.k8s.io/repositories/isv:/kubernetes:/core:/stable:/v1.29/deb Packages [6511 B]

Get:7 http://archive.ubuntu.com/ubuntu focal-updates InRelease [114 kB]

Get:8 http://security.ubuntu.com/ubuntu focal-security/multiverse amd64 Packages [29.7 kB]

Get:9 http://archive.ubuntu.com/ubuntu focal-backports InRelease [108 kB]

Get:10 http://security.ubuntu.com/ubuntu focal-security/universe amd64 Packages [1194 kB]

Get:11 http://archive.ubuntu.com/ubuntu focal/restricted amd64 Packages [33.4 kB]

Get:12 http://archive.ubuntu.com/ubuntu focal/universe amd64 Packages [11.3 MB]

Get:13 http://security.ubuntu.com/ubuntu focal-security/main amd64 Packages [3505 kB]

Get:14 http://security.ubuntu.com/ubuntu focal-security/restricted amd64 Packages [3442 kB]

Get:15 http://archive.ubuntu.com/ubuntu focal/multiverse amd64 Packages [177 kB]

Get:16 http://archive.ubuntu.com/ubuntu focal/main amd64 Packages [1275 kB]

Get:17 http://archive.ubuntu.com/ubuntu focal-updates/main amd64 Packages [3980 kB]

Get:18 http://archive.ubuntu.com/ubuntu focal-updates/universe amd64 Packages [1489 kB]

Get:19 http://archive.ubuntu.com/ubuntu focal-updates/restricted amd64 Packages [3593 kB]

Get:20 http://archive.ubuntu.com/ubuntu focal-updates/multiverse amd64 Packages [32.4 kB]

Get:21 http://archive.ubuntu.com/ubuntu focal-backports/universe amd64 Packages [28.6 kB]

Get:22 http://archive.ubuntu.com/ubuntu focal-backports/main amd64 Packages [55.2 kB]

Fetched 30.9 MB in 2s (12.9 MB/s)

Reading package lists... Done

root@node01 ~ ➜ sudo apt-mark unhold kubeadm && \

> sudo apt-get update && sudo apt-get install -y kubeadm='1.29.3-1.1' && \

> sudo apt-mark hold kubeadm

Canceled hold on kubeadm.

Hit:2 http://archive.ubuntu.com/ubuntu focal InRelease

Hit:1 https://prod-cdn.packages.k8s.io/repositories/isv:/kubernetes:/core:/stable:/v1.29/deb InRelease

Hit:3 http://security.ubuntu.com/ubuntu focal-security InRelease

Hit:4 https://download.docker.com/linux/ubuntu focal InRelease

Hit:5 http://archive.ubuntu.com/ubuntu focal-updates InRelease

Hit:6 http://archive.ubuntu.com/ubuntu focal-backports InRelease

Reading package lists... Done

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following packages will be upgraded:

kubeadm

1 upgraded, 0 newly installed, 0 to remove and 73 not upgraded.

Need to get 10.1 MB of archives.

After this operation, 2408 kB disk space will be freed.

Get:1 https://prod-cdn.packages.k8s.io/repositories/isv:/kubernetes:/core:/stable:/v1.29/deb kubeadm 1.29.3-1.1 [10.1 MB]

Fetched 10.1 MB in 0s (41.7 MB/s)

debconf: delaying package configuration, since apt-utils is not installed

(Reading database ... 14854 files and directories currently installed.)

Preparing to unpack .../kubeadm_1.29.3-1.1_amd64.deb ...

Unpacking kubeadm (1.29.3-1.1) over (1.28.0-1.1) ...

Setting up kubeadm (1.29.3-1.1) ...

kubeadm set on hold.

root@node01 ~ ➜ sudo kubeadm upgrade node

[upgrade] Reading configuration from the cluster...

[upgrade] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[preflight] Running pre-flight checks

[preflight] Skipping prepull. Not a control plane node.

[upgrade] Skipping phase. Not a control plane node.

[upgrade] Backing up kubelet config file to /etc/kubernetes/tmp/kubeadm-kubelet-config62544515/config.yaml

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[upgrade] The configuration for this node was successfully updated!

[upgrade] Now you should go ahead and upgrade the kubelet package using your package manager.

root@node01 ~ ➜ sudo apt-mark unhold kubelet kubectl && \

> sudo apt-get update && sudo apt-get install -y kubelet='1.29.3-1.1' kubectl='1.29.3-1.1' && \

> sudo apt-mark hold kubelet kubectl

Canceled hold on kubelet.

Canceled hold on kubectl.

Hit:2 https://download.docker.com/linux/ubuntu focal InRelease

Hit:3 http://archive.ubuntu.com/ubuntu focal InRelease

Hit:4 http://security.ubuntu.com/ubuntu focal-security InRelease

Hit:1 https://prod-cdn.packages.k8s.io/repositories/isv:/kubernetes:/core:/stable:/v1.29/deb InRelease

Hit:5 http://archive.ubuntu.com/ubuntu focal-updates InRelease

Hit:6 http://archive.ubuntu.com/ubuntu focal-backports InRelease

Reading package lists... Done

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following packages will be upgraded:

kubectl kubelet

2 upgraded, 0 newly installed, 0 to remove and 71 not upgraded.

Need to get 30.3 MB of archives.

After this operation, 1090 kB of additional disk space will be used.

Get:1 https://prod-cdn.packages.k8s.io/repositories/isv:/kubernetes:/core:/stable:/v1.29/deb kubectl 1.29.3-1.1 [10.5 MB]

Get:2 https://prod-cdn.packages.k8s.io/repositories/isv:/kubernetes:/core:/stable:/v1.29/deb kubelet 1.29.3-1.1 [19.8 MB]

Fetched 30.3 MB in 0s (78.9 MB/s)

debconf: delaying package configuration, since apt-utils is not installed

(Reading database ... 14854 files and directories currently installed.)

Preparing to unpack .../kubectl_1.29.3-1.1_amd64.deb ...

Unpacking kubectl (1.29.3-1.1) over (1.28.0-1.1) ...

Preparing to unpack .../kubelet_1.29.3-1.1_amd64.deb ...

Unpacking kubelet (1.29.3-1.1) over (1.28.0-1.1) ...

Setting up kubectl (1.29.3-1.1) ...

Setting up kubelet (1.29.3-1.1) ...

kubelet set on hold.

kubectl set on hold.

root@node01 ~ ➜ sudo systemctl daemon-reload

root@node01 ~ ➜ sudo systemctl restart kubelet

root@node01 ~ ➜ sudo ssh controlplane

The authenticity of host 'controlplane (192.26.153.9)' can't be established.

ECDSA key fingerprint is SHA256:9umP71M8VMSb00TaFo8fb9/VBtQI28lTwOmUqE1ynfo.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added 'controlplane,192.26.153.9' (ECDSA) to the list of known hosts.

root@controlplane's password:

Permission denied, please try again.

root@controlplane's password:

Permission denied, please try again.

root@controlplane's password:

root@node01 ~ ✖ exit

logout

Connection to node01 closed.

controlplane ~ ✖ k get nodes

NAME STATUS ROLES AGE VERSION

controlplane Ready control-plane 94m v1.29.3

node01 Ready,SchedulingDisabled <none> 93m v1.29.3

controlplane ~ ➜ k uncordon node01

node/node01 uncordoned

controlplane ~ ➜ k get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

gold-nginx-5d9489d9cc-h8ct4 1/1 Running 0 3m17s 10.244.0.6 controlplane <none> <none>

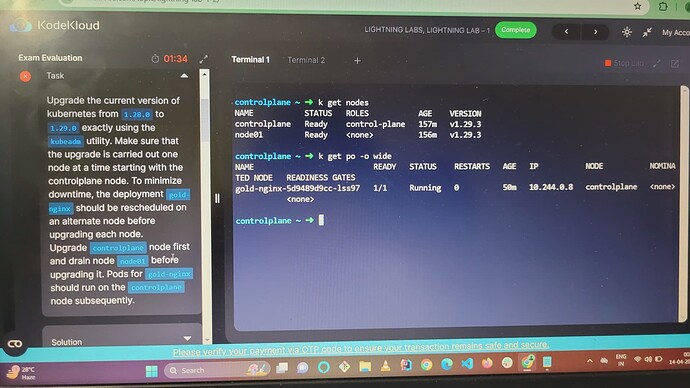

controlplane ~ ➜ k get nodes

NAME STATUS ROLES AGE VERSION

controlplane Ready control-plane 94m v1.29.3

node01 Ready <none> 93m v1.29.3

controlplane ~ ➜ k get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

gold-nginx-5d9489d9cc-h8ct4 1/1 Running 0 3m27s 10.244.0.6 controlplane <none> <none>

controlplane ~ ➜