Q. For this question, please set the context to cluster1 by running:

kubectl config use-context cluster1

There is an existing persistent volume called orange-pv-cka13-trb. A persistent volume claim called orange-pvc-cka13-trb is created to claim storage from orange-pv-cka13-trb.

However, this PVC is stuck in a Pending state. As of now, there is no data in the volume.

Troubleshoot and fix this issue, making sure that orange-pvc-cka13-trb PVC is in Bound state.

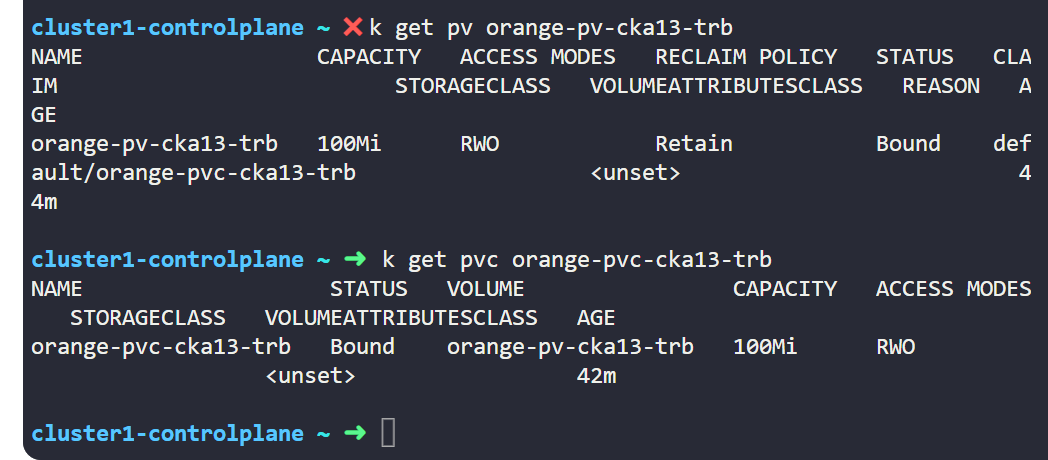

Not sure why this was marked as incorrect…even though pv and pvc are bound…can someone from kodekloud team can answer my query

PFA attached SS…

1st SS-- my terminal

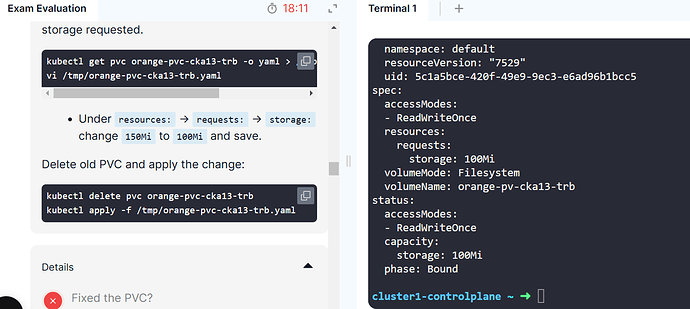

2nd SS–explanation given by exam evaluator also compared both…i changed the request to 100Mi…still marking it as incorrect

Please take a look at this thread; it may help resolve your problem.

Hi @rob_kodekloud … i have gone through the thread…it seems correct to me…following things which i understood from thread:

- i am creating pv and pvc in correct context that is cluster1

- for pvc…i have changed the resource request from 150Mi to 100Mi

- both pv and pvc are in bound state

it would be really great if you can review my solution once…and let me know where i am going wrong…

It would help if you could put the YAML from your attempt in a

code block and

type or paste code there

Use the </> key to get the empty block. Short of that I’d just be guessing from your JPEGs, really.

currently i don’t have that yaml because session has ended…but both pv and pvc are bounded to each other…and in the resources—>requests–> storage is 100Mi only…which was earlier 150Mi…and u can see i have used the correct pv also in pvc…under volumeName: parameter

I’ll still need to YAML. I can’t really tell much from what you’ve posted so far, unfortunately.

apiVersion: v1

items:

- apiVersion: v1

kind: PersistentVolumeClaim

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","kind":"PersistentVolumeClaim","metadata":{"annotations":{},"creationTimestamp":"2025-02-04T05:40:28Z","finalizers":["kubernetes.io/pvc-protection"],"labels":{"type":"local"},"name":"orange-pvc-cka13-trb","namespace":"default","resourceVersion":"1618","uid":"5a037cb9-4ffe-4adf-a307-4a9e026aceea"},"spec":{"accessModes":["ReadWriteOnce"],"resources":{"requests":{"storage":"100Mi"}},"volumeMode":"Filesystem","volumeName":"orange-pv-cka13-trb"},"status":{"phase":"Pending"}}

pv.kubernetes.io/bind-completed: "yes"

creationTimestamp: "2025-02-04T05:41:25Z"

finalizers:

- kubernetes.io/pvc-protection

labels:

type: local

name: orange-pvc-cka13-trb

namespace: default

resourceVersion: "1730"

uid: fa035649-47e2-4ded-8aa7-19d17395ce66

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 100Mi

volumeMode: Filesystem

volumeName: orange-pv-cka13-trb

status:

accessModes:

- ReadWriteOnce

capacity:

storage: 100Mi

phase: Bound

kind: List

metadata:

resourceVersion: ""

This is the yaml…will this help?

I just tried the lab. The technique I used:

- Used

k describe pvc orange-whatever to get the cause – resource ask too big.

- Created pvc.yaml by doing

k get pvc orange-whatever -o yaml.

- Edited the file by changing the request size to 100Mi and removing the

status: block

- Deleted the existing PVC and applied the yaml.

The grader passed this. Yaml was:

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","kind":"PersistentVolumeClaim","metadata":{"annotations":{},"labels":{"type":"local"},"name":"orange-pvc-cka13-trb","namespace":"default"},"spec":{"accessModes":["ReadWriteOnce"],"resources":{"requests":{"storage":"150Mi"}},"storageClassName":null,"volumeName":"orange-pv-cka13-trb"}}

creationTimestamp: "2025-02-04T06:02:52Z"

finalizers:

- kubernetes.io/pvc-protection

labels:

type: local

name: orange-pvc-cka13-trb

namespace: default

resourceVersion: "1754"

uid: 40a12126-9520-4c0e-a504-38ea61c14075

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 100Mi

volumeMode: Filesystem

volumeName: orange-pv-cka13-trb