Maiky

November 18, 2024, 4:56am

#1

Hey, I’m playing your mock exams for CKA, but I have problems with questions 7 and 9 of the

Question 7:

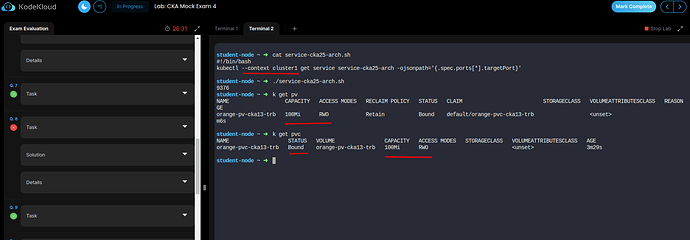

Update `service-cka25-arch.sh` script:

student-node ~ ➜ vi service-cka25-arch.sh

Add below command in it:

kubectl --context cluster1 get service service-cka25-arch -o jsonpath='{.spec.ports[0].targetPort}'

My question was the following, which is basically the same, but marked as wrong:

student-node ~ ➜ cat /root/service-cka25-arch.sh

#!/bin/bash

kubectl get svc service-cka25-arch -o jsonpath='{.spec.ports[0].targetPort}'

Question 9:

student-node ~ ➜ kubectl describe pv orange-pv-cka13-trb

Name: orange-pv-cka13-trb

Labels: type=local

Annotations: pv.kubernetes.io/bound-by-controller: yes

Finalizers: [kubernetes.io/pv-protection]

StorageClass:

Status: Bound

Claim: default/orange-pvc-cka13-trb

Reclaim Policy: Retain

Access Modes: RWO

VolumeMode: Filesystem

Capacity: 100Mi

Node Affinity: <none>

Message:

Source:

Type: HostPath (bare host directory volume)

Path: /mnt/orange-pv-cka13-trb

HostPathType:

Events: <none>

student-node ~ ➜ kubectl describe pvc orange-pvc-cka13-trb

Name: orange-pvc-cka13-trb

Namespace: default

StorageClass:

Status: Bound

Volume: orange-pv-cka13-trb

Labels: type=local

Annotations: pv.kubernetes.io/bind-completed: yes

Finalizers: [kubernetes.io/pvc-protection]

Capacity: 100Mi

Access Modes: RWO

VolumeMode: Filesystem

Used By: <none>

Events: <none>

Hi @Maiky

Regarding your query on Q7, the script you’ve posted does not define the context. The script should look as in the attached image.

With regards to Q9, the describe output of PV and PVC looks correct and the PVC is in a Bound state and should result in a pass.

Regards.

1 Like

Maiky

November 19, 2024, 6:42am

#3

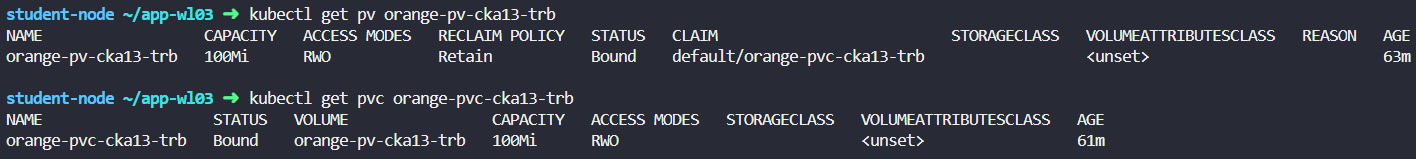

Sure, here you go the YAML of the PV and the PVC. I played again and it failed again.

PV:

student-node ~/app-wl03 ➜ kubectl get pv orange-pv-cka13-trb -o yaml

apiVersion: v1

kind: PersistentVolume

metadata:

annotations:

pv.kubernetes.io/bound-by-controller: "yes"

creationTimestamp: "2024-11-19T05:36:10Z"

finalizers:

- kubernetes.io/pv-protection

labels:

type: local

name: orange-pv-cka13-trb

resourceVersion: "6414"

uid: ec1d4152-2859-442a-b736-53a0712ccae0

spec:

accessModes:

- ReadWriteOnce

capacity:

storage: 100Mi

claimRef:

apiVersion: v1

kind: PersistentVolumeClaim

name: orange-pvc-cka13-trb

namespace: default

resourceVersion: "6412"

uid: c421d520-a306-47be-80b3-f6051256fa0e

hostPath:

path: /mnt/orange-pv-cka13-trb

type: ""

persistentVolumeReclaimPolicy: Retain

volumeMode: Filesystem

status:

lastPhaseTransitionTime: "2024-11-19T05:38:25Z"

phase: Bound

PVC:

student-node ~/app-wl03 ✖ kubectl get pvc orange-pvc-cka13-trb -o yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","kind":"PersistentVolumeClaim","metadata":{"annotations":{},"creationTimestamp":"2024-11-19T05:36:11Z","finalizers":["kubernetes.io/pvc-protection"],"labels":{"type":"local"},"name":"orange-pvc-cka13-trb","namespace":"default","resourceVersion":"6196","uid":"0bd90be9-1b2e-40e3-b20b-0c8b4775c7da"},"spec":{"accessModes":["ReadWriteOnce"],"resources":{"requests":{"storage":"100Mi"}},"volumeMode":"Filesystem","volumeName":"orange-pv-cka13-trb"},"status":{"phase":"Pending"}}

pv.kubernetes.io/bind-completed: "yes"

creationTimestamp: "2024-11-19T05:38:25Z"

finalizers:

- kubernetes.io/pvc-protection

labels:

type: local

name: orange-pvc-cka13-trb

namespace: default

resourceVersion: "6416"

uid: c421d520-a306-47be-80b3-f6051256fa0e

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 100Mi

volumeMode: Filesystem

volumeName: orange-pv-cka13-trb

status:

accessModes:

- ReadWriteOnce

capacity:

storage: 100Mi

phase: Bound

As you can see, they’re bound:

Please, let me know if I’m wrong, I’d appreciate it.

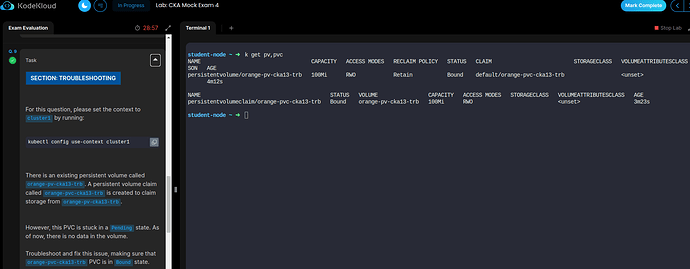

This looks correct to me and should result in a pass.150Mi to a value equal to or less than 100Mi.

Can you please try it afresh?cluster1

kubectl config use-context cluster1

Regards.