main.tf:

provider “aws” {

region = “us-east-1”

}

Create S3 bucket

Create S3 bucket

resource “aws_s3_bucket” “firehose_bucket” {

bucket = var.KKE_S3_BUCKET_NAME

}

Create IAM role for Firehose

Create IAM role for Firehose

resource “aws_iam_role” “firehose_role” {

name = var.KKE_FIREHOSE_ROLE_NAME

assume_role_policy = jsonencode({

Version = “2012-10-17”

Statement = [

{

Effect = “Allow”

Action = “sts:AssumeRole”

Principal = {

Service = “firehose.amazonaws.com”

}

}

]

})

}

Attach IAM policy to allow Firehose to write to S3

Attach IAM policy to allow Firehose to write to S3

resource “aws_iam_role_policy” “firehose_policy” {

name = “firehose-s3-policy”

role = aws_iam_role.firehose_role.id

policy = jsonencode({

Version = “2012-10-17”

Statement = [

{

Effect = “Allow”

Action = [

“s3:PutObject”,

“s3:PutObjectAcl”,

“s3:ListBucket”

]

Resource = [

aws_s3_bucket.firehose_bucket.arn,

“${aws_s3_bucket.firehose_bucket.arn}/*”

]

}

]

})

}

Create Kinesis Firehose Delivery Stream

Create Kinesis Firehose Delivery Stream

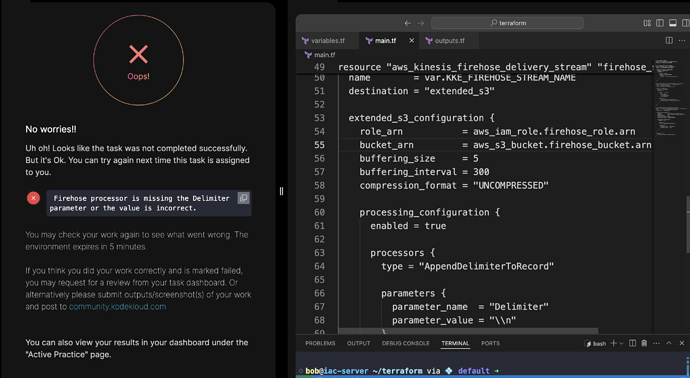

resource “aws_kinesis_firehose_delivery_stream” “firehose_stream” {

name = var.KKE_FIREHOSE_STREAM_NAME

destination = “extended_s3”

extended_s3_configuration {

role_arn = aws_iam_role.firehose_role.arn

bucket_arn = aws_s3_bucket.firehose_bucket.arn

buffering_size = 5

buffering_interval = 300

compression_format = “UNCOMPRESSED”

processing_configuration {

enabled = true

processors {

type = "AppendDelimiterToRecord"

parameters {

parameter_name = "Delimiter"

parameter_value = "\\n"

}

}

}

}

depends_on = [

aws_iam_role_policy.firehose_policy

]

}

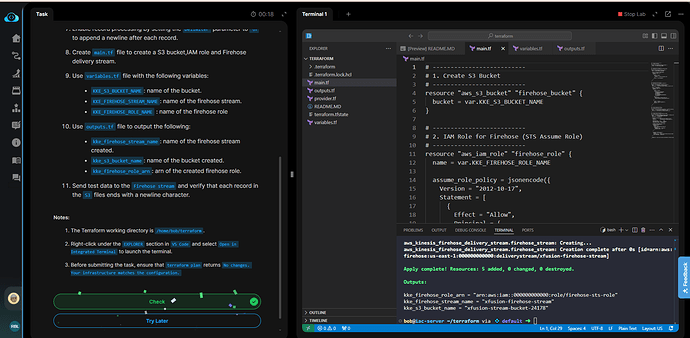

variables.tf:

variable “KKE_S3_BUCKET_NAME” {

description = “Name of the S3 bucket for Firehose delivery”

type = string

default = “devops-stream-bucket-12310”

}

variable “KKE_FIREHOSE_STREAM_NAME” {

description = “Name of the Firehose delivery stream”

type = string

default = “devops-firehose-stream”

}

variable “KKE_FIREHOSE_ROLE_NAME” {

description = “Name of the IAM role for Firehose”

type = string

default = “firehose-sts-role”

}

@raymond.baoly Although, there is only a need of main.tf file here to examine , as the error on page shows “Firehose processor is missing the Delimiter” , as I mentioned that outputs file working fine and I am getting the expected output, but the task is failing with "Firehose processor is missing the Delimiter