Thiru:

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 41h v1.18.1

worker2 Ready <none> 41h v1.18.1

For ref:

[root@master ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx 1/1 Running 0 14h 192.168.1.10 worker2 <none> <none>

num1-d9ddf646c-k2vft 1/1 Running 0 39h 192.168.1.4 worker2 <none> <none>

From master node cant able to ping the pod

[root@master ~]# ping 192.168.1.10

PING 192.168.1.10 (192.168.1.10) 56(84) bytes of data.

^C

— 192.168.1.10 ping statistics —

20 packets transmitted, 0 received, 100% packet loss, time 21247ms

Pod placed in the worker2 .so its natural to ping inside worker2…

[root@worker2 ~]# ping 192.168.1.10

PING 192.168.1.10 (192.168.1.10) 56(84) bytes of data.

64 bytes from 192.168.1.10: icmp_seq=1 ttl=64 time=0.914 ms

64 bytes from 192.168.1.10: icmp_seq=2 ttl=64 time=0.112 ms

But it cant staisfy k8s rule .every pod can be reachable from every machine…is my statement correct

CNI-pods are running fine…

[root@master ~]# kubectl get pods -n kube-system -o wide| grep flannel

kube-flannel-ds-47gv8 1/1 Running 0 40h 192.168.102.50 master <none> <none>

kube-flannel-ds-4d8k7 1/1 Running 0 40h 192.168.102.52 worker2 <none> <none>

guys can you help me out here…? why the nginx pod cant reachable from master machine…

Alistair Mackay:

Hi,

What they mean is “every pod can be reachable by every other pod within the same cluster regardless of which node the pod is on”

In a properly set up production cluster you should not reach the pod network directly from the host machines. This is what the concept of services and ingress is for.

Thiru:

Thanks @Alistair Mackay For clarifying the concept…

Thiru:

@Alistair Mackay To access the pod network from the host i need to create clusterip service…so now i need to create clusterip svc for nginx pod?

Alistair Mackay:

You can’t make a bridge to the entire pod network

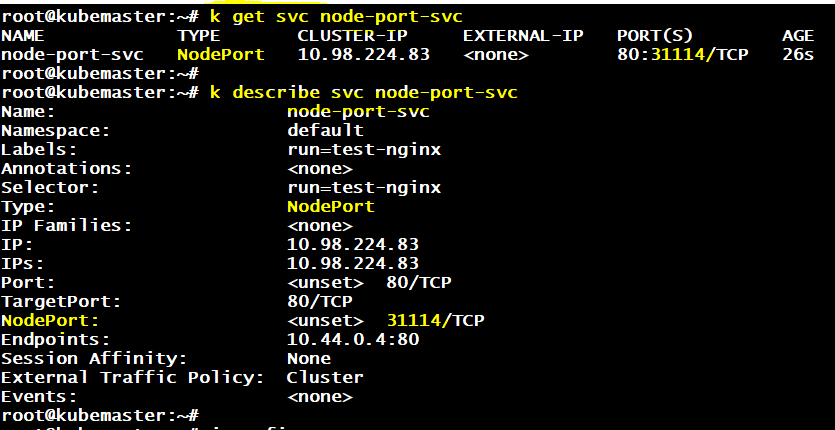

If you want to access the pod from your machine, the simplest way is to create a node port service. Here I’m assuming that nignx is listening on port 80

kubectl expose pod nginx --name=nginx-svc --type=NodePort --port=80 --target-port=80 --dry-run=client -o yaml > svc.yaml

vi svc.yaml

# Edit the yaml file and add nodePort: 30080 alongside port and target port

kubectl create -f svc.yaml

Now your service should be running, and should be able to be connected to via <http://worker2:30080>

Alistair Mackay:

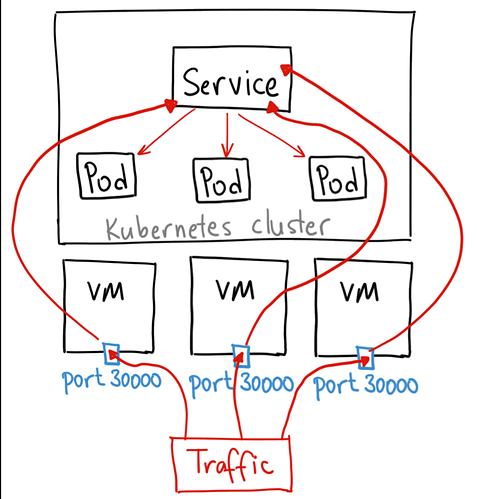

Note that node ports must be between 30000 and 32000

Node port should not be used in production scenarios

Thiru:

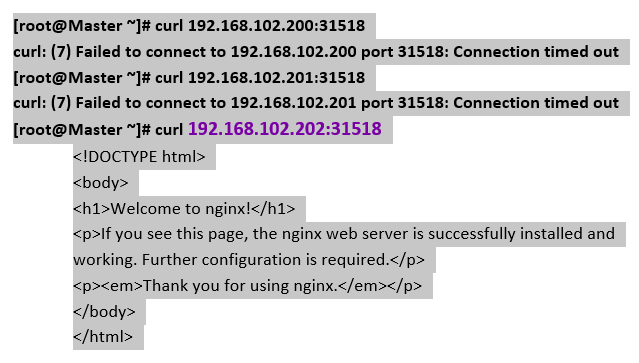

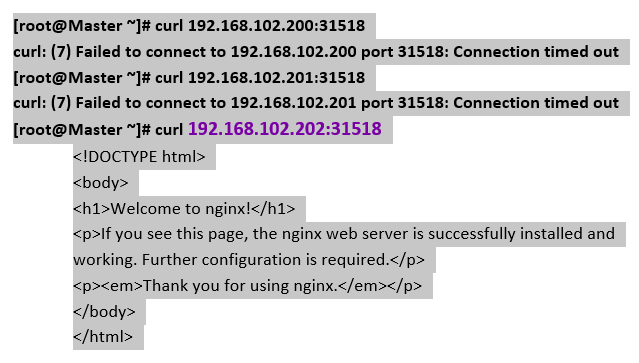

@Alistair Mackay already i did above and i can reach the pod outside only by using worker2ip:nodeport …not by masterip:nodeport

unnivkn:

Hi @Thiru Do you have any pod instance running on master node? master node is not meant for handling worker loads. fyr:

Thiru:

Don't have any pods in Master node.. Not untainted.. @unnivkn ..

If the application example Nginx is in inside container on workernode2 .. To reach it outside I have to use nodeport svc...

1..It means i can access the nginx container outside only by using the workernode2:nodeport

is it Correct.......

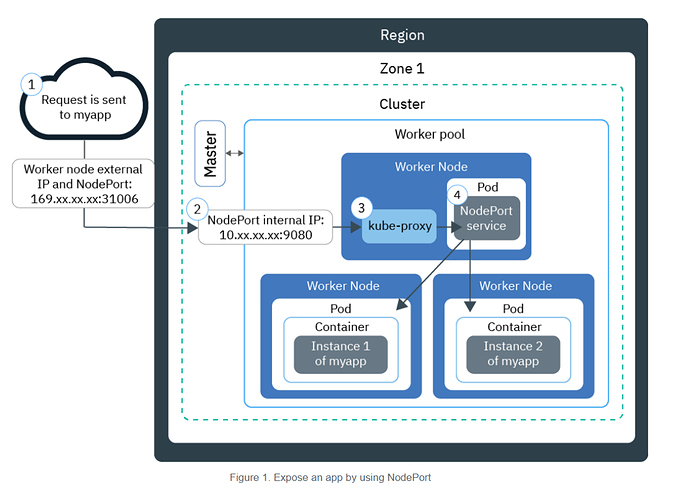

2..But i heard it somewhere like I could access the particular application by any node ip with nodeport( belongs to the particular application)..

Can you solve my misunderstanding on above @unnivkn

Thiru:

@unnivkn kindly go through the above and make me clarify …

Alistair Mackay:

The node port service once created successfully should be accessible at all workers in the cluster.

It might not be exposed at master/controlplane nodes. That would be for cluster security.

You should only use nodeport for testing. For production use, should be ingress.

https://kubernetes.io/docs/concepts/services-networking/ingress/

unnivkn:

Hi @Thiru your question is well explained here. Please have a look.

Thiru:

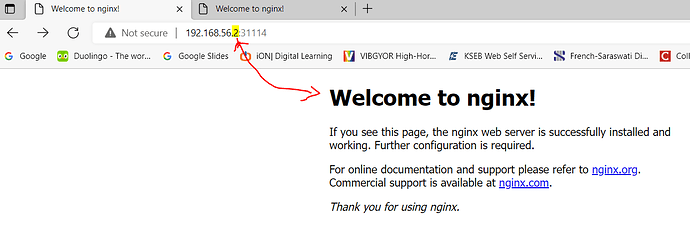

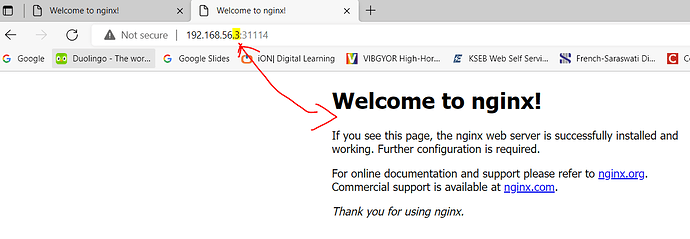

i can only access the container by using ip which worker node its belongs to…Not by controlnode ip or others even i exposed through nodeport…

Thiru:

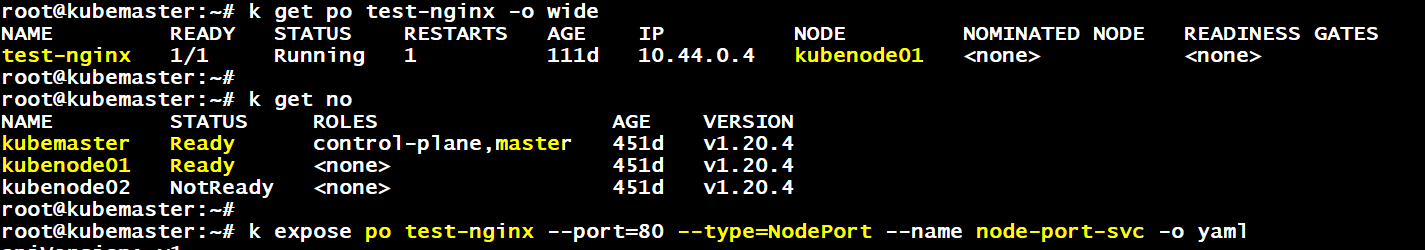

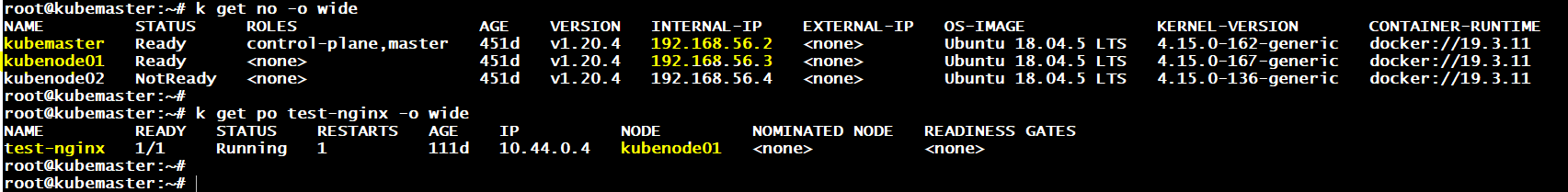

Kindly go through the document i attached …Make me clear in this topic… @unnivkn @Alistair Mackay

Thiru:

I think regardless of nodeip ,nodeport need to bring the data from the cluster ip and need to expose outside…

Thiru:

i mean if the service belongs to the particular pod we could reach the pod with clusterip:port in locally … nodeport and clusterip belongs to service object … so if i give the nodeport with any one of the cluster nodeip ,nodeport need to contact the cluster ip and clusterip will make a way to reach the container in a pod as nodeport wants …so here nodeip not a problem nodeport plays major role… Am i understand correct @unnivkn …If my understanding is correct why i am facing issue above…??

Thiru:

kindly share your thoughts @unnivkn

unnivkn:

Did you tried this from browser?