I’m trying to deploy a cluster using vagrant and virtual box v 6.1.34. I get my vms deployed but not able to ssh into it. keep getting “connect to host vagrant port 22: Connection refused” Any help?

strange I can’t even get into the vm

Can you share your vagrantfile?

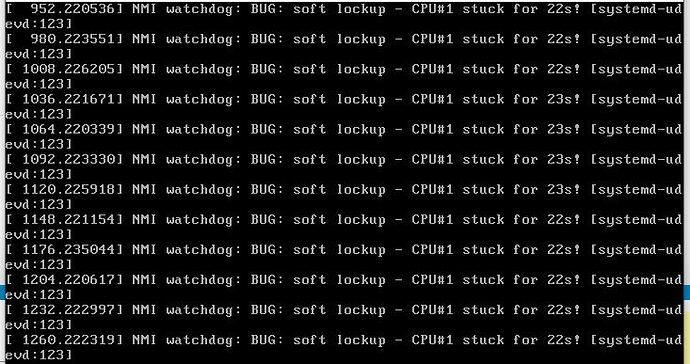

I’m practicing how to write simple Vagrantfiles with this whilst comparing with the vagrantfile for the cluster. For some reason even a single VM doesn’t allow ssh. Even when I try to start the VM from virtualbox directly it just freezes

:

vi: set ft=ruby :

All Vagrant configuration is done below. The “2” in Vagrant.configure

configures the configuration version (we support older styles for

backwards compatibility). Please don’t change it unless you know what

you’re doing.

Vagrant.configure(“2”) do |config|

config.vm.define “web-server”

config.vm.hostname = “web-server”

config.vm.box = “bento/ubuntu-16.04”

config.vm.network “forwarded_port”, guest: 80, host: 8080, host_ip: “127.0.0.1”

Create a private network, which allows host-only access to the machine

using a specific IP.

config.vm.network “private_network”, ip: “192.168.33.10”

Create a public network, which generally matched to bridged network.

Bridged networks make the machine appear as another physical device on

your network.

config.vm.network “public_network”

Share an additional folder to the guest VM. The first argument is

the path on the host to the actual folder. The second argument is

the path on the guest to mount the folder. And the optional third

argument is a set of non-required options.

config.vm.synced_folder "./html/", "/var/www/html/"

Provider-specific configuration so you can fine-tune various

backing providers for Vagrant. These expose provider-specific options.

Example for VirtualBox:

config.vm.provider “virtualbox” do |vb|

vb.gui = false

vb.memory = “2048”

end

View the documentation for the provider you are using for more

information on available options.

Enable provisioning with a shell script. Additional provisioners such as

Ansible, Chef, Docker, Puppet and Salt are also available. Please see the

documentation for more information about their specific syntax and use.

config.vm.provision "shell", inline: <<-SHELL

apt-get update

apt-get install -y apache2

SHELL

config.vm.provision "shell", inline: <<-SHELL

echo "Hello from the Vagrantfile"

SHELL

end

Hi @cambell79

Can you please try from our vagrantfile which is available in our GitHub repo?

GitHub - kodekloudhub/certified-kubernetes-administrator-course: Certified Kubernetes Administrator - CKA Course

If you are sharing codes then please use this format.

Start with a triple backtick (```) then add/paste the codes and at the end close with a triple backtick again.

How do I go about creating this cluster on a bare-metal vmware esxi server?

you should create the virtual machines and follow these instructions Creating a cluster with kubeadm | Kubernetes

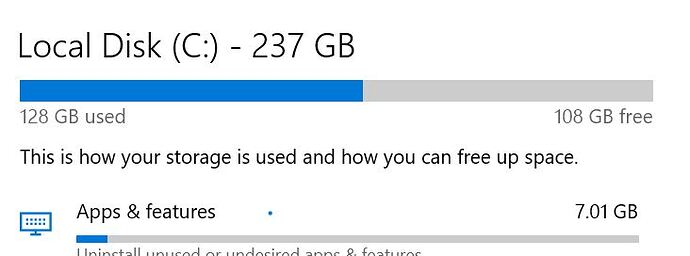

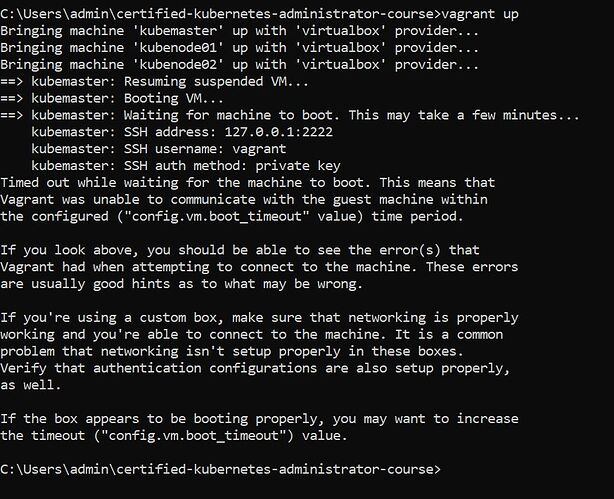

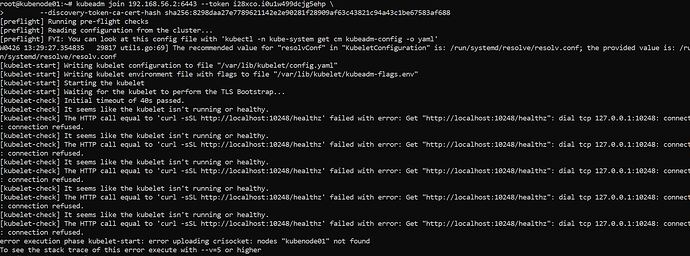

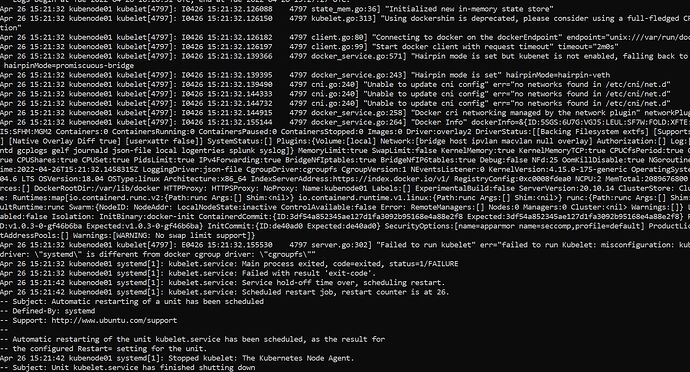

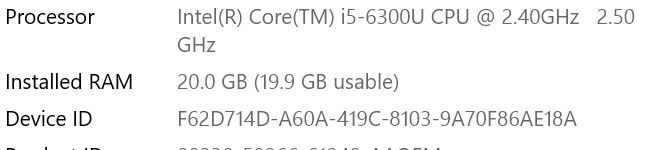

I did clone this repo and still unable to deploy the VMs. I can see kubemaster in a running state on my virtualbox and I get this error. Following the instructions on github and my system requirements seem to be ok. Any idea what I could be doing wrong?

Thanks

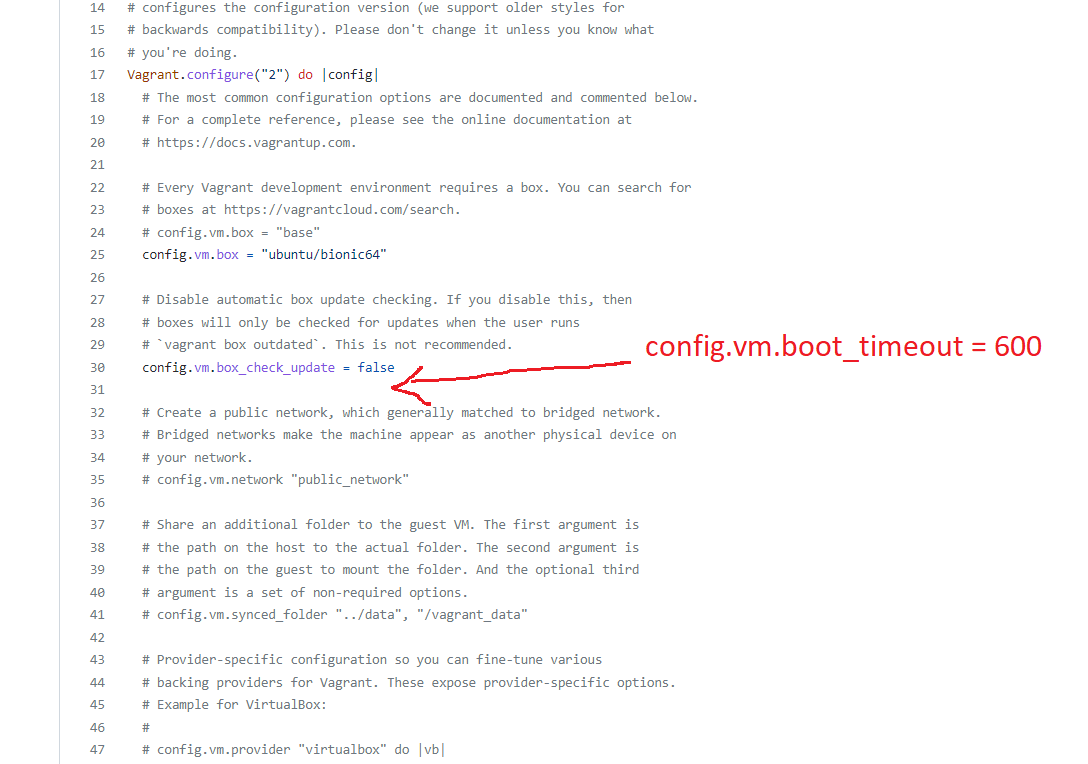

Hi @cambell79,

Destroy the existing vms by running the command: -

vagrant destroy

and add the field as shown in the screenshot and run it again the command: -

vagrant up

Default is 300 seconds, so maybe it needs more time to boot up.

Reference: -

config.vm - Vagrantfile | Vagrant by HashiCorp (vagrantup.com)

Regards,

KodeKloud Support

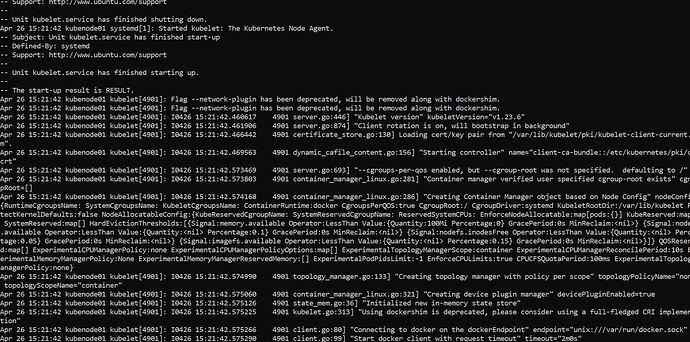

Thanks, I uninstalled my docker desktop and it worked but then I’m unable to get the 2 nodes join the cluster.

- Provision VMs from the vagrant file

- Install one of the container runtimes on all VMs

- Then install the kubeadm, kubelet and kubectl on all VMs

- Then make use of the kubeadm tool to create a cluster on controlplane node

- Then use a token to join/add worker nodes.

Container runtime is Docker engine right? I did install that I followed the video step-by-step

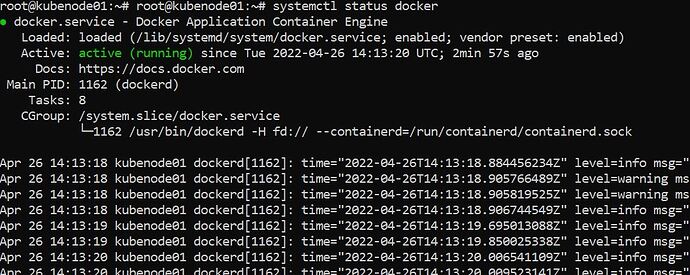

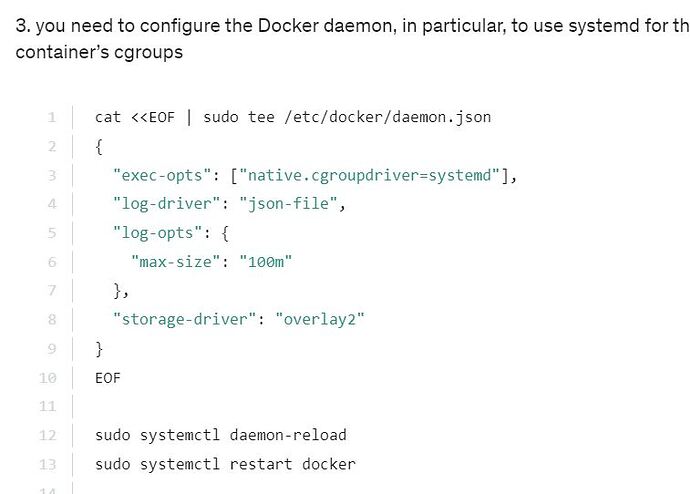

I have docker service running on my nodes and I also configured docker daemon. Been on this for hours now just don’t seem to get it right

please share the logs of kubelet.

journalctl -xe -u kubelet

Can you please share the output of the below command?

cat /etc/docker/daemon.json