Hi,

I am deploying kubernetes cluster on VMs on proxmox with mentioned details

total nodes (3 x Master, 1 x Worker)

M1: 172.16.100.2

M2: 172.16.100.3

M3: 172.16.100.4

VIP: 172.16.100.5

W1: 172.16.100.6

what i did is

export VIP=172.16.100.5

export CIDR=27

export INTERFACE=eth0

export VIP_SUBNET=27

docker run --rm --net=host ghcr.io/kube-vip/kube-vip:v1.0.3 manifest pod --interface $INTERFACE --vip $VIP --vipSubnet $VIP_SUBNET --controlplane --arp > /etc/kubernetes/manifests/kube-vip.yaml

the content of the file looks like

apiVersion: v1

kind: Pod

metadata:

name: kube-vip

namespace: kube-system

spec:

containers:

- args:

- manager

env: - name: vip_arp

value: “true” - name: port

value: “6443” - name: vip_nodename

valueFrom:

fieldRef:

fieldPath: spec.nodeName - name: vip_interface

value: eth0 - name: vip_subnet

value: --controlplane - name: dns_mode

value: first - name: dhcp_mode

value: ipv4 - name: vip_leaderelection

value: “true” - name: vip_leasename

value: plndr-cp-lock - name: vip_leaseduration

value: “5” - name: vip_renewdeadline

value: “3” - name: vip_retryperiod

value: “1” - name: vip_address

value: 172.16.100.5 - name: prometheus_server

value: :2112

image: ghcr.io/kube-vip/kube-vip:v1.0.3

imagePullPolicy: IfNotPresent

name: kube-vip

resources: {}

securityContext:

capabilities:

add:- NET_ADMIN

- NET_RAW

drop: - ALL

volumeMounts:

- mountPath: /etc/kubernetes/admin.conf

name: kubeconfig

hostAliases:

- manager

- hostnames:

- kubernetes

ip: 127.0.0.1

hostNetwork: true

volumes:

- kubernetes

- hostPath:

path: /etc/kubernetes/admin.conf

name: kubeconfig

status: {}

when i run

kubeadm init --control-plane-endpoint “172.16.100.5:6443” --upload-certs --cri-socket=unix:///var/run/cri-dockerd.sock --v=5 --pod-network-cidr=10.244.0.0/16

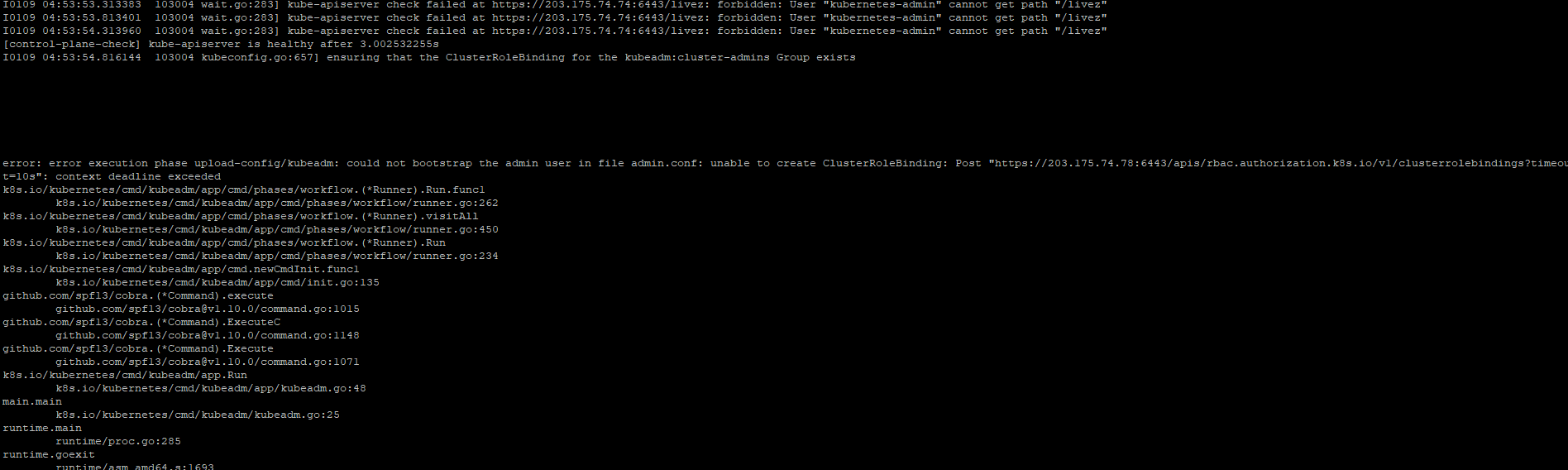

it gets stuck at

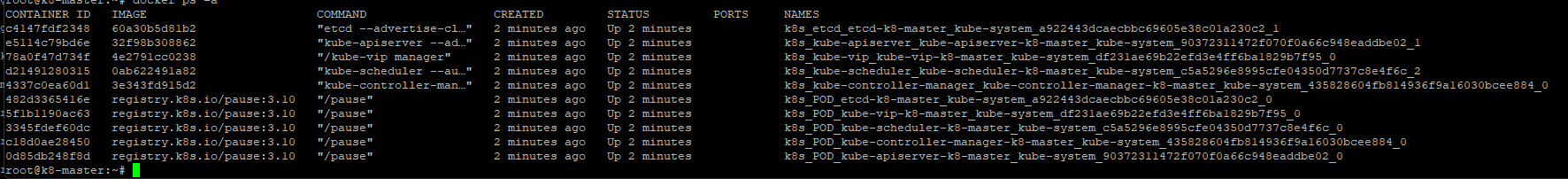

kube-apiserver starts successfully

kube-scheduler starts successfully

kube-controllmanaget starts successfully

etc starts successfully

kube-proxy starts successfully

kube-vip container also starts successfully but

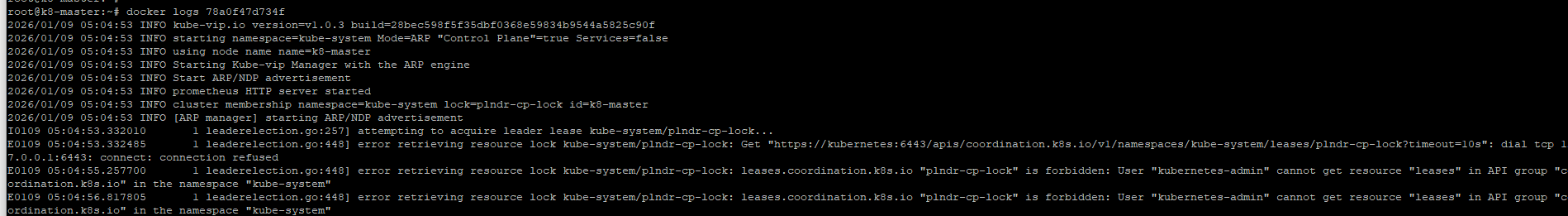

when debug the docker container for kube-vip, its logs show output

ERROR:

I0109 05:04:53.332010 1 leaderelection.go:257] attempting to acquire leader lease kube-system/plndr-cp-lock…

E0109 05:04:53.332485 1 leaderelection.go:448] error retrieving resource lock kube-system/plndr-cp-lock: Get “https://kubernetes:6443/apis/coordination.k8s.io/v1/namespaces/kube-system/leases/plndr-cp-lock?timeout=10s”: dial tcp 127.0.0.1:6443: connect: connection refused

I need suggestions what could be the issue