Hi Everyone!

I have deployed a kubernetes cluster with mentioned details

1 control-plane node (expandable to HA )

2 worker nodes

Container Runtime → docker

CNI → calico

I want to expand it to HA topology (stacked)

I have configured haproxy and keepalive as a static pod on control-plan

for multiple (3) control plan nodes, i have plan to deploy haproxy and keepalive on all of them

is it a good configuration using HA proxy with keepalive?

if yes, how will I be managing the load balancing on apiserver?

any recommended config.

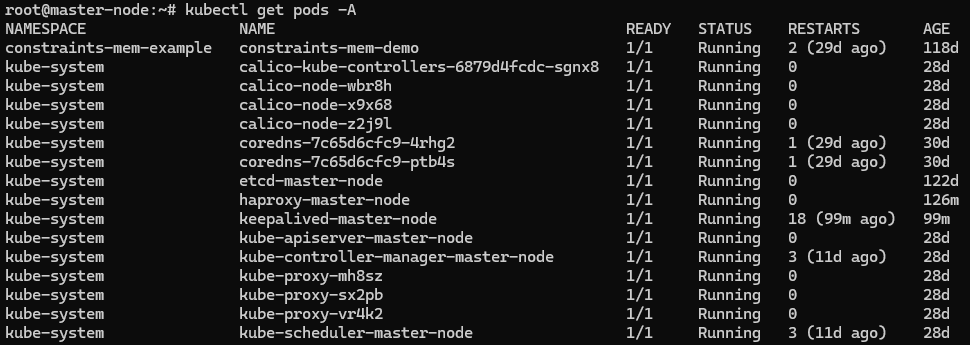

PFB the kubectl get pods -A out put

Hi,

From my experience, there are two separate nodes for HAProxy and Keepalived. A VIP (Virtual IP) is used to load balance traffic to the three master nodes. Each HAProxy node only needs around 2 CPU cores and 2GB of memory. If the application scales, we can increase resources later, but for now, it’s enough since their main job is just forwarding requests as a reverse proxy.

With this setup, your Kubernetes master nodes stay inside the private network, while only the two HAProxy nodes, with the VIP, handle incoming traffic.

Hi @raymond.baoly ,

Thanks for the reply.

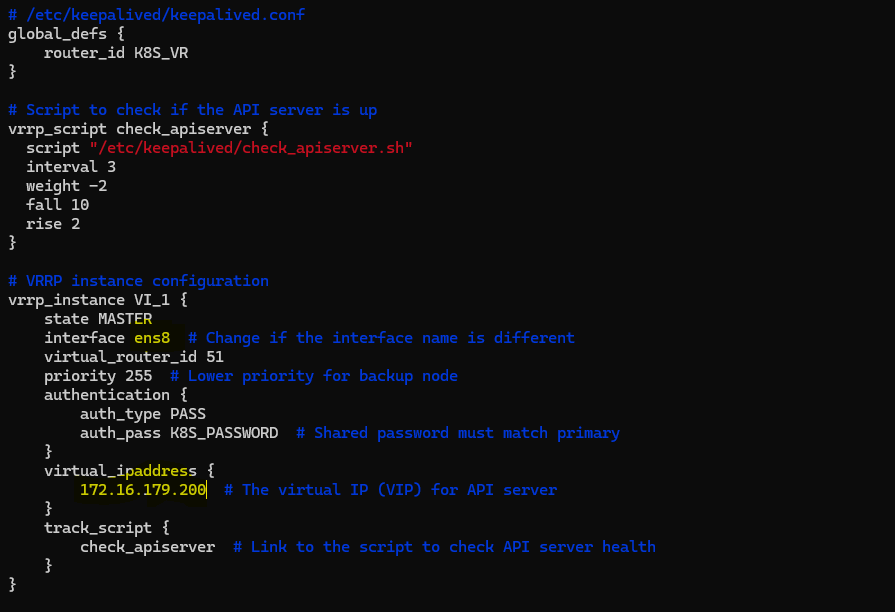

i have mentioned keepalive.config file

=

the keepalive IP (floating IP ) is on the separate interface of master node but from same subnet as of masternode IP

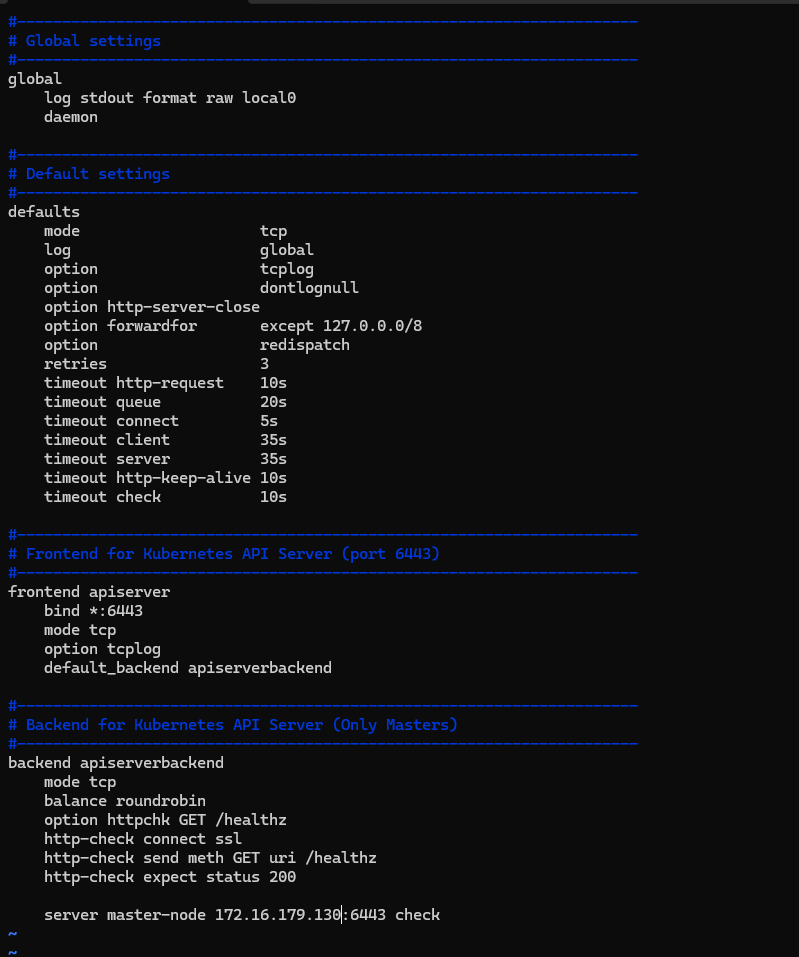

and this is haproxy.config file

Both HA proxy and keepalive are running as a pod on master node

Hi,

I’m a bit confused. What issue are you facing? I’ve explained how we should implement HAProxy and keepalived with the K8s cluster. I don’t have experience setting it up with HAProxy as a pod, and I’m not really in favor of this approach. Have you read my previous comment about my idea? Let me know if you have any questions.

yes, I have read your idea of configuring two HA proxies externally (not as a pod) and managing their HA through keepalive

I will try that and let you know. I was thinking of it to be deployed as a pod.

There are many blogs about Kubernetes cluster architecture with HAProxy and VIP. You might find it helpful to follow them. For example: KubeSphere HA Cluster Guide.

Thank you, HAPROXY Worked.

Thank you so much for help.

I’ll be taking guidance if required

I’m happy to help! Hopefully, you’ll get the cluster running properly with HAProxy. It will be great once it’s done!

yeah, Thanks! Now moving forward to resolve some CNI (Calico) issues.

can you suggest which one is a better CNI

I personally prefer Calico because I’ve been using it for years with over five clusters, and it has been reliable with any issues being easy to resolve. I don’t want to spend more time comparing CNIs since I haven’t switched before. However, if you have a specific use case that requires a different CNI, feel free to consider it; otherwise, I would prefer to Calico.

thanks for recommendation, I have successfully deployed it with Calico!

Now I am going to add New Master node to cluster!

Hoping for the good response!

Please note that you should set up the K8s cluster by following this document: Creating Highly Available Clusters with kubeadm | Kubernetes. You can’t just use the default kubeadm init command.

yes, I’m using the same documentation.

Thanks for mentioning

Hi!

Hope you are doing well

Messed up with my cluster

I mistakenly ran command on the existing master node rather than new one

After resolving that, I faced certificate uploading issue on new control plan

then I figured out my mistake

meanwhile I had issue with the contol-plan as kubeadm init was configured without HA -proxy

Then I had to reset it and reconfigure the **kubeadm init ** with all required

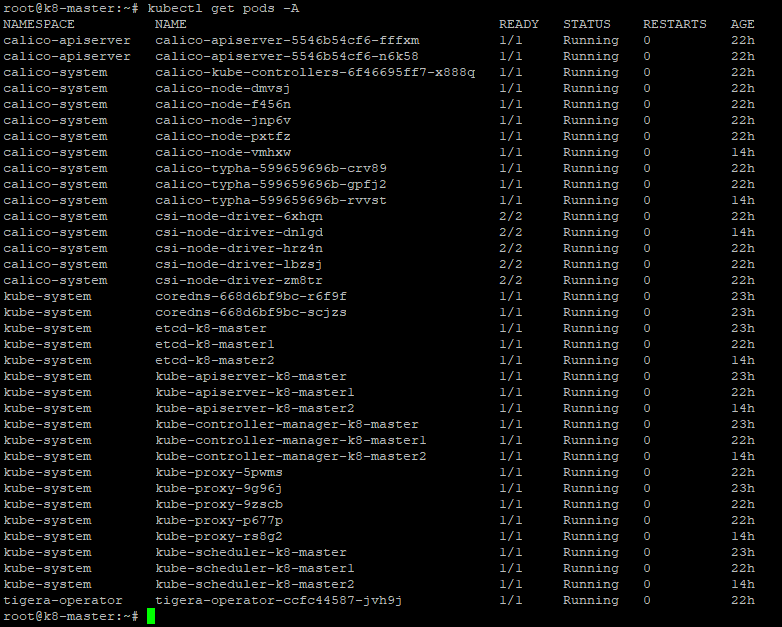

After all i successfully added new master node to my cluster with api-servers managed through HA-proxy

I used calico as CNI

WOOOOOOO!!!

I’m glad to hear that! That sounds like a great experience.

After a successful setup, I recommend testing high availability (HA) with a virtual IP (VIP). You can:

- Deploy an Nginx image with a replica set equal to the number of worker nodes.

- Use Ingress to forward requests to the deployment.

- Access the service using the public VIP and verify that the pods respond.

- Test HA load balancing by SSHing into a worker node and stopping the

kubelet service.

- To check failover, stop the

haproxy service and see if the VIP remains accessible.

1 Like

I was definitely looking for some guidance to test my setup.

Thanks for providing for details to test it.

One important thing I want to know

I have read that containerd or cri-o is better than docker? is it?

I’ll update after testing it.

pic is of current setup

There’s no need to use Docker with Kubernetes anymore since containerd is lighter and works more smoothly with K8s now. In my case, I only use Docker to build images locally for development or testing.