I failed to complete the configmap volume only in Kubernetes Challenges | KodeKloud

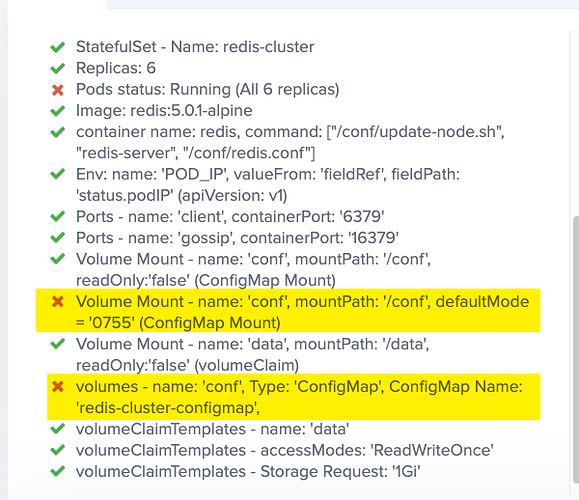

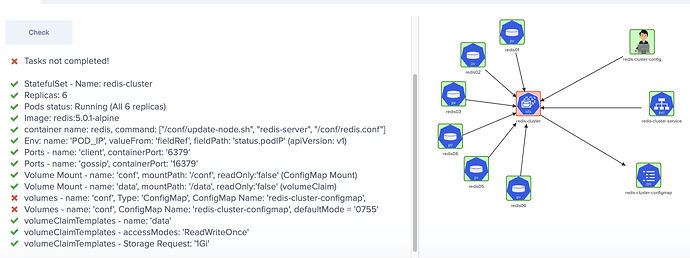

failed steps below:

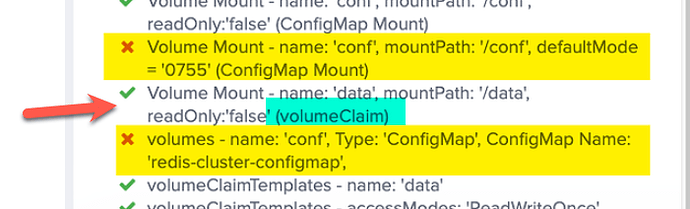

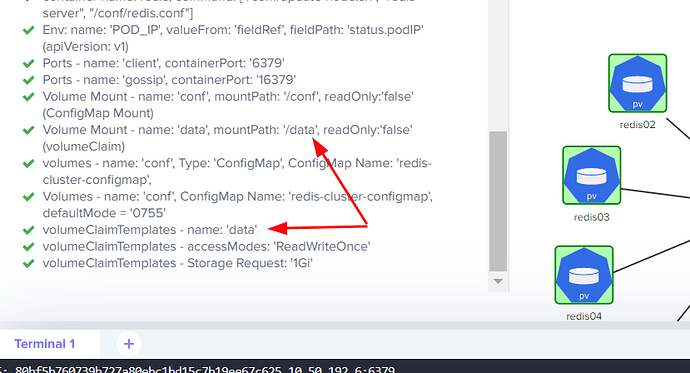

- Volume Mount - name: ‘conf’, mountPath: ‘/conf’, defaultMode = ‘0755’ (ConfigMap Mount)

- volumes - name: ‘conf’, Type: ‘ConfigMap’, ConfigMap Name: ‘redis-cluster-configmap’

For me, it looks correct. It may be a bug in the challenge or I am missing something and I cannot see:

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: redis-cluster

spec:

selector:

matchLabels:

app: redis-cluster # has to match .spec.template.metadata.labels

serviceName: "redis-cluster"

replicas: 6 # by default is 1

minReadySeconds: 10 # by default is 0

template:

metadata:

labels:

app: redis-cluster # has to match .spec.selector.matchLabels

spec:

terminationGracePeriodSeconds: 10

volumes:

- name: data

persistentVolumeClaim:

claimName: data

- name: conf

configMap:

name: redis-cluster-configmap

defaultMode: 0755

containers:

- name: redis

image: redis:5.0.1-alpine

command: ["/conf/update-node.sh", "redis-server", "/conf/redis.conf"]

env:

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

ports:

- containerPort: 6379

name: client

- containerPort: 16379

name: gossip

volumeMounts:

- name: data

mountPath: /data

readOnly: false

- name: conf

mountPath: /conf

volumeClaimTemplates:

- metadata:

name: data

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 1Gi

Thanks for highlighting this issue. We are working on it. I will update you once it’s fixed.

Regards,

KodeKloud Support

1 Like

We resolved it and updated the context. Please give it another try.

Regards,

I am still getting the exact same error.

1 Like

Why are you creating a persistent volume claim? We haven’t mentioned in the question.

- Is this relevant to the problem I am reporting?

- you are asking for a volume mount with volumeclaim. How can I do it without claiming it?

Do I understand the question in the wrong way? If so why the validation works fine for data mount?

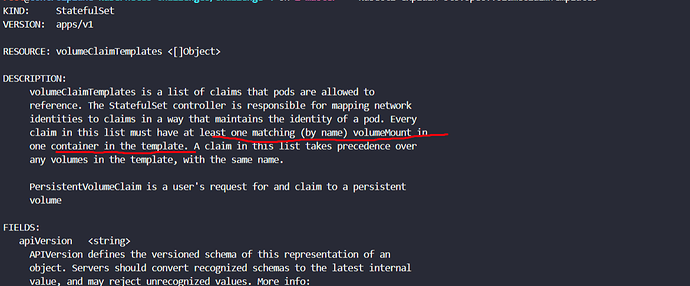

volumeClaim is a short hint for volumeClaimTemplates.

Basically volumeClaimTemplates will create a persistent volume claim for each pod so we don’t need to create them separately.

When I remove the persistentvolume claim from pod it works.

but the challenge is buggy. without changing anything with configmap mount is now accepting the solution.

The configmap details should be accepted with or without a mistake from somewhere else.

This is misleading.

Thanks for solving my issue anyhow.

1 Like

Hi,

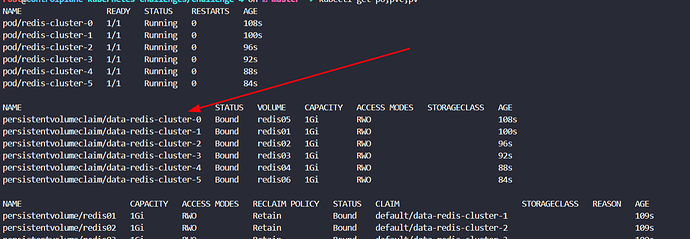

I have a problem with the configmap, I attach the events

Events:

Type Reason Age From Message

Warning FailedScheduling 12m default-scheduler 0/2 nodes are available: 2 pod has unbound immediate PersistentVolumeClaims.

Normal Scheduled 12m default-scheduler Successfully assigned default/redis-cluster-0 to node01

Warning FailedMount 7m42s kubelet Unable to attach or mount volumes: unmounted volumes=[conf], unattached volumes=[data kube-api-access-94x6l conf]: timed out waiting for the condition

Warning FailedMount 3m11s (x2 over 9m59s) kubelet Unable to attach or mount volumes: unmounted volumes=[conf], unattached volumes=[conf data kube-api-access-94x6l]: timed out waiting for the condition

Warning FailedMount 106s (x13 over 12m) kubelet MountVolume.SetUp failed for volume “conf” : configmap “redis-cluster-configmap” not found

Warning FailedMount 56s (x2 over 5m27s) kubelet Unable to attach or mount volumes: unmounted volumes=[conf], unattached volumes=[kube-api-access-94x6l conf data]: timed out waiting for the condition

ConfigMap no exist

Hi @robertomagallanes221,

Thanks for highlighting this. I forwarded this to the team. They will check and fix it asap.

Regards,

Hi @robertomagallanes221,

This issue is fixed. Please try it and let us know if you encounter any issues.

Regards,

Hi @Tej-Singh-Rana , it works

Thanks

Hi @Tej-Singh-Rana I'm having the same issue with my lab,

Below is my yaml which is passing for everything apart from PVC attachment preventing 6 replicas from starting.

apiVersion: v1

kind: Service

metadata:

name: redis-cluster-service

labels:

app: redis

spec:

ports:

- port: 6379

name: client

targetPort: 6379

- port: 16379

name: gossip

targetPort: 16379

clusterIP: None

selector:

app: redis

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: redis-cluster

spec:

selector:

matchLabels:

app: redis-cluster # has to match .spec.template.metadata.labels

serviceName: "redis-cluster-service"

replicas: 6 # by default is 1

minReadySeconds: 20 # by default is 0

template:

metadata:

labels:

app: redis # has to match .spec.selector.matchLabels

spec:

terminationGracePeriodSeconds: 10

containers:

- name: redis

image: redis:5.0.1-alpine

ports:

- containerPort: 6379

name: client

- containerPort: 16379

name: gossip

volumeMounts:

- name: conf

mountPath: /conf

readOnly: false

- name: data

mountPath: /data

readOnly: false

command: ["/conf/update-node.sh", "redis-server", "/conf/redis.conf"]

env:

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

volumes:

- configMap:

defaultMode: 0755

name: redis-cluster-configmap

name: conf

volumeClaimTemplates:

- metadata:

name: data

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 1Gi

@prashant.bharate your YAML is garbled. You can paste YAML into the form using the </> button to create a set of 3 back ticks starting the block and 3 back ticks ending a block:

type or paste code here

This will prevent the website editor software from removing indentation and replacing quote characters. As things are now, I can’t really debug your code.

Beyond that: you can find the solution for Challenge 4 here.

@rob_kodekloud

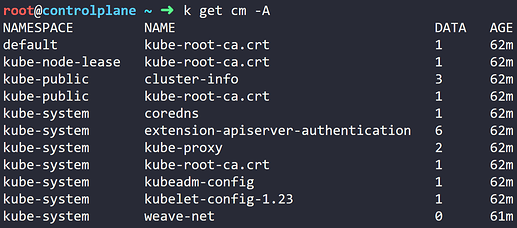

from your previous response, it looks like we have to create the pv too but it’s not mentioned anywhere in the assignment… am I missing something ? (My statefulset is up but the pods are not firing because the PVC cannot be bound to any PV)

It’s there, but you have to click on the little “pv” icons to see the details for each PV.

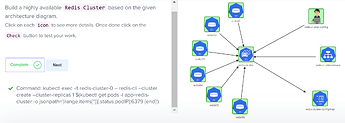

Hi, i got this error when running the final command of challenge 4 to create redis cluster:

kubectl exec -it redis-cluster-0 – redis-cli --cluster create --cluster-replicas 1 $(kubectl get pods -l app=redis-cluster -o jsonpath=‘{range.items[*]}{.status.podIP}:6379 {end}’)

[ERR] Wrong number of arguments for specified --cluster sub command

command terminated with exit code 1

any idea what’s wrong?

That command is almost entirely garbled, as far as I can tell. You appear to be mixing a redis-cli command (I don’t know the syntax for that – can’t help you there). Assuming that I have an accurate sense of what you typed (hard to do because you really should use a

code block (created with the </> key)

- to make sure that quotes and dash characters don't get corrupted.

- to make it easier for folks to read.

)

I also suspect that using range in the jsonpath sub-command is also either unneeded, or even wrong in this case.

The first thing to check is the dash after -it redis-cluster-0: it should be a double dash. In all, the command looks fancier than it needs to be, which is an invitation to errors.

@chamankaushal Please use

codeblocks:

- So your code doesn't get all weird

- So ' and " marks don't get converted into something else.

- So your code can be read.

Use the </> key to create a block, and then paste into it.

Also: do you have a question here?