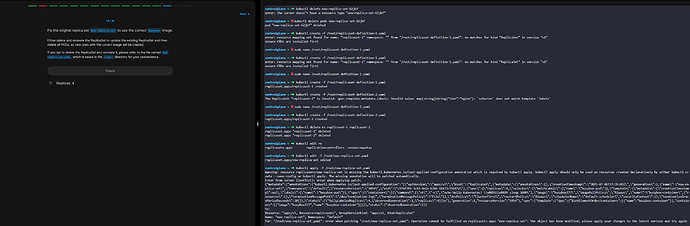

I started taking the CKA course here at KodeKloud and when we got to the ReplicaSet section especially during the exams some questions asked to update the image and scale up or down the number of replica. My idea for each these was to use kubectl edit to update the file new-replicateset.yml then do kubectl apply -f /path/to/file to apply it but that does not seem to work

My question is what is difference between kubectl edit vs apply vs scale. I am wonder if I need to use kubectl delete first before applying changes but I thought kubectl apply automatically fixed the state for desired object.

For added context if it helps I already understand the difference between kubectl create and kubectl apply.

Lastly can kubectl edit be done via the object type like kubectl create deployment or does it need the -f flag. I hope I am explaining this correctly I get confused with all the was to create and modify things and when you can use -f and when you can just declare an object

Hello @Michael_MC

Let’s go through them one at a time.

create

Most often used imperatively to create a resource that supports direct creation from the command line. This is not all resource types, only those listed here.

When used with -f some-file.yaml it does pretty much the same as apply with the exception that the resource cannot already exist.

To create a Pod imperatively, you use kubectl run instead of kubectl create

apply

Always used with -f to specify a yaml manifest. It will create the resource if it doesn’t exist, and will update an existing resource if the YAML you are applying differs from what is currently running in the cluster

edit

This is used to quicky edit a running resource, more quickly than the following commands which would achieve the same result.

So

kubectl edit replicaset my-replicaset

is exactly equivalent to

kubectl get replicaset my-replicaset -o yaml > rs.yaml

vi rs.yaml

kubectl apply -f rs.yaml

Note that not all resources can be edited in this manner. Pods especially have most of their fields immutable, meaning that to change it you must delete the pod and recreate it. You can change the image of a running pod but not much else. You would need to do

kubectl get pod my-pod -o yaml > pod.yaml

vi pod.yaml

kubectl replace --force -f pod.yaml

scale

This is nothing more than a quick way of editing the replicas field of a resource that supports scaling, e.g. Deployment, ReplicaSet and StatefulSet

Thanks @Alistair_KodeKloud for the reply it was helpful in reviewing the basics. More importantly I was asking about the error.

I did some google searching and this is what I found

Every resource in Kubernetes has a resourceVersion field in its metadata. This field changes every time the resource is updated* .

-

When you fetch a resource (for example, with kubectl get or kubectl edit), you get a copy with a specific resourceVersion.

-

If you then try to update or apply changes to that resource, Kubernetes checks if the resourceVersion you are submitting matches the current one in the cluster.

-

If someone else (another user, a controller, or a process) has changed the resource in the meantime, the resourceVersion in the cluster is now different from the one you have.

to prevent overwriting the other changes. This is the error you see

-

.

Controller A and Controller B both fetch the same deployment at version 1091.

Controller A updates and submits its changes; the API server accepts and increments the version to 2075.

Controller B tries to submit its changes, but it still has version 1091.

The API server rejects B’s update because the current version is now 2075, not 1091.

Controller B must fetch the latest version (2075), reapply its changes, and submit again

.

-

Your YAML file contains fields like resourceVersion, creationTimestamp, or uid that should not be present in declarative configs. These fields are managed by Kubernetes and including them can cause conflicts

-

.

-

Multiple people or controllers are editing the same resource at the same time

-

.

-

Fetch the latest version of the resource using kubectl get.

like resourceVersion, creationTimestamp, uid, etc., from your manifest before applying it* .

- to the latest version.

- If you are editing via

kubectl edit, simply retry your edit after fetching the latest state.

this helps a bit better but is still confusing any way to clear this up for me.

I guess I am trying to understand why deleting is needed when apply should just update the cluster.

You seem to have got yourself into a bit of a mess there. I can’t see if you have additional terminals open, but certainly if you tried to edit it twice separately as in you did

- Get the resource and start editing it

- Get the resource somewhere else, edit it and save successfully

- Try to save the resource from step 1

then the 3rd step above would get that error.

So what you would do for the question is kubectl edit rs new-replica-set and change busybox777 to busybox and save it.

Then you may notice that it doesn’t stop the pods from crashing. This is a quirk of replicasets which doesn’t happen for deployments (coming up in a few lectures time). You have to force the replicaset to redeploy its pods with the new image (whether you applied the file, or did kubectl edit), which you can do with

kubectl scale rs new-replica-set --replicas 0

kubectl scale rs new-replica-set --replicas 4

Alternatively, you can use the provided file

-

vi new-replica-set.yaml

-

Fix the image

-

Delete and recreate

kubectl replace --force -f new-replica-set.yaml

or (same thing but more typing)

kubectl delete rs new-replica-set

kubectl apply -f new-replica-set.yaml

1 Like