Hey folks,

This is the last question I’m trying to solve for my CKS course, and I’m stuck. Hoping someone here can help me out.

question:

Now enable auditing in this Kubernetes cluster. Create a new policy file and set it to

Metadatalevel and it will only log events based on the below specifications:Namespace:

prodOperations:

deleteResources:

secretsLog Path:

/var/log/prod-secrets.logAudit file location:

/etc/kubernetes/prod-audit.yamlMaximum days to keep the logs:

30

my answer

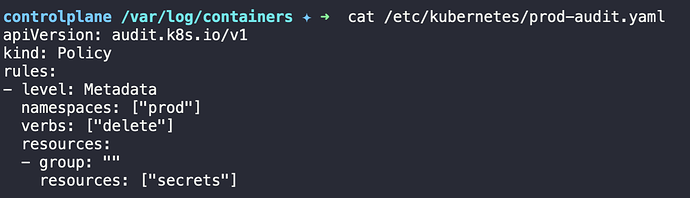

Here’s the audit policy file I created:

apiVersion: audit.k8s.io/v1

kind: Policy

rules:

- level: Metadata

namespaces: ["prod"]

verbs: ["delete"]

resources:

- group: ""

resources: ["secrets"]

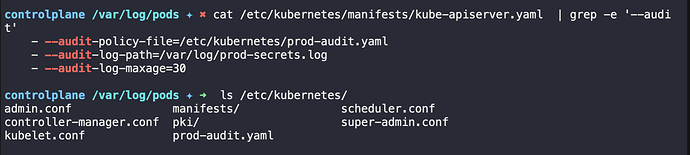

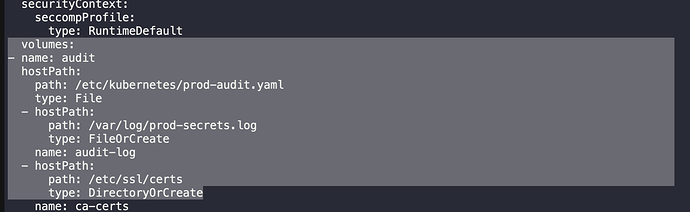

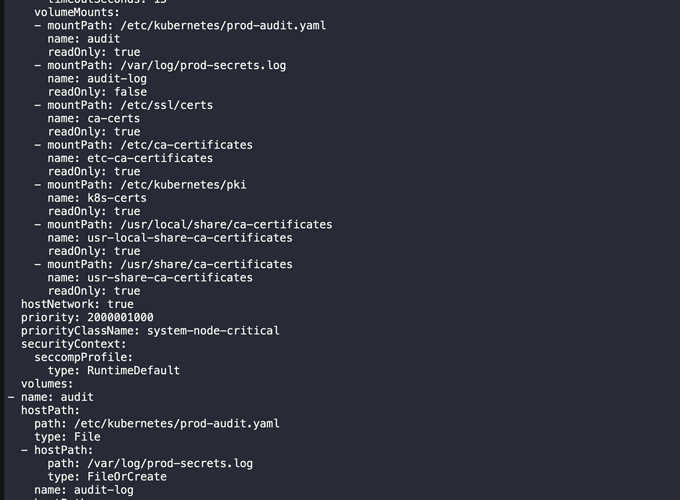

I updated my kube-apiserver static pod manifest (/etc/kubernetes/manifests/kube-apiserver.yaml) with these flags:

After making these changes:

- The

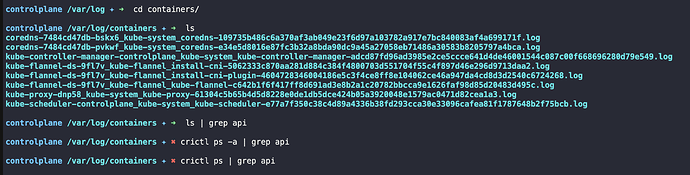

kube-apiservercontainer is not starting. - I can’t find any logs under

/var/log/containersor withcrictl ps -a | grep api. - I’ve checked the syntax of the audit file and the manifest, but nothing stands out.

I suspect the API server might be crashing before it even starts the container properly.

Has anyone encountered this before?

Has anyone encountered this before?

- How can I debug this when I don’t get any logs?

Any help would be appreciated!