I have set up kubernetes HA cluster using the steps given on GitHub - mmumshad/kubernetes-the-hard-way: Bootstrap Kubernetes the hard way on Vagrant on Local Machine. No scripts.

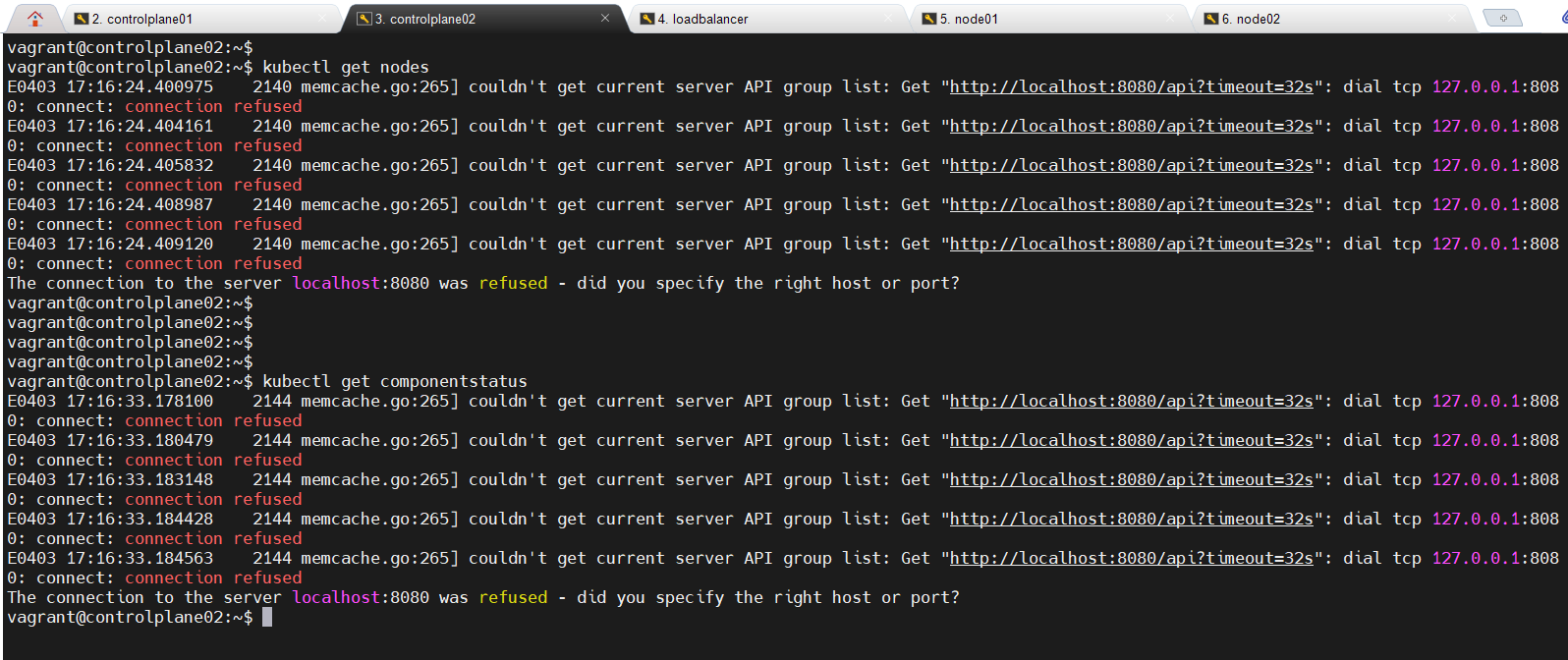

After performing Step-12 ( Configuring kubectl for Remote Access), I get error while executing kubectl get nodes and kubectl get componentstatus alarm in controlplane02.

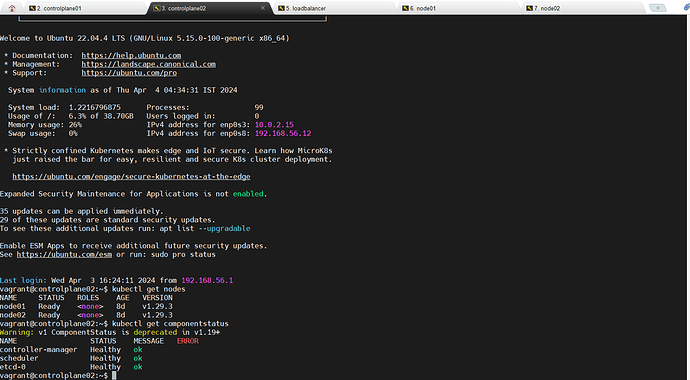

No issue in controlplane01… cluster is ok and healthy

Can anyone help to resolve this issue.

From the error in your image, it appears that you have not set up a kubeconfig file on that host: the “8080” error indicates that kubeconfig can’t figure out where to contact for the kube-apiserver.

The lab sets up the correct kubeconfig on controlplane01 if you have correctly followed the steps. Run kubectl commands from that node.

What about controlplane02? my cluster is working perfectly except for HA. I am unable to execute commands (terminating in error) as shown in attached snapshot.

When controlplane01 is powered off, controlplane02 should work as master. But it is not working

Issue has been resolved after performing following steps on controlplane02:

- Generating admin.crt and admin.key on controlplane02

- Executing step-12 on controlplane02![controlplane02|690x380]

Yes, that works as you have configured a kubeconfig there.

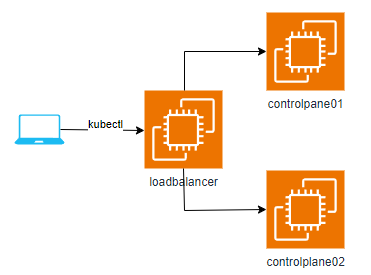

The point of HA is not that you can run commands from the control planes themselves. If this were a production cluster, you would not be running kubectl commands from any cluster node. You would be using a workstation remote from the cluster.

What makes it highly available to a user is the loadbalancer component. You set up the kubeconfig file to use the IP of the load balancer on port 6443 as server. The load balancer forwards the connection directly to API server on one or other of the controlplanes and accounts for any of them being offline. The request fails if all control planes are offline - like this

1 Like