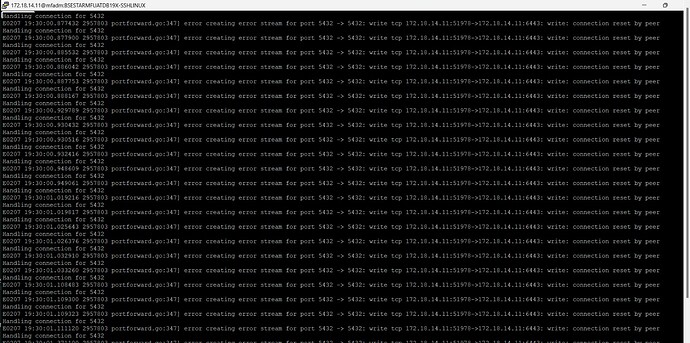

I have an on-premise Kubernetes cluster running a PostgreSQL database and am using Pentaho to migrate old data to the new database. From an application perspective, the database connection works fine, and the application functions properly. However, when transferring a large volume of data via Pentaho, I encounter the following error:

kubectl port forward error creating error stream

I understand that using a NodePort or an NGINX proxy pass could be alternatives, but I am looking for a different approach using port forwarding. I also created a script to kill and restart the port forward, but this disrupts the data migration process, causing Pentaho scripts to stop working.

Thank you

If you’re using kubectl port-forward to do this, I found an issue in the K8s issue queue that may be on point. Port-forwarding can get into an error state if you use it for large amounts of data, it appears. Does this issue look relevant to you?

Hi @sujoy-bhattacharya

I’ve encountered this problem before. Sometimes we need to export a database with a lot of data and then import it into another cluster or database. Using port-forward is not a good solution because it is meant for development testing and cannot keep the connection stable for transferring large amounts of data. I recommend creating a Docker image with Python to handle the export and import process. Once the database is exported, the pod can upload the dump to S3 or MinIO. Then, for the import, the application can download the dump from storage and load it into the database. You can set up the image to control the process with environment variables. I hope this helps.