gopi m:

I tried another exercise as mentioned https://kubernetes.io/docs/tasks/administer-cluster/declare-network-policy/|here, and here as well i am getting proper response from busybox (without the access=true label added) instead of timing out (after i apply nw policy). @unnivkn any suggestions please? Not sure where it is going wrong. The n/w policy seems to be fine as shown below:

$ kubectl describe networkpolicy access-nginx

Name: access-nginx

Namespace: default

Created on: 2021-12-30 07:37:21 +0000 UTC

Labels: <none>

Annotations: <none>

Spec:

PodSelector: app=nginx

Allowing ingress traffic:

To Port: <any> (traffic allowed to all ports)

From:

PodSelector: access=true

Not affecting egress traffic

Policy Types: Ingress

Ayush Jain:

Do you have proper network plugin which supports the Network Policies?

unnivkn:

Hi @gopi m As Ayush mentioned, may I know what is the network cni you are using ?

gopi m:

Thanks @Ayush Jain and @unnivkn, initially there was none and i installed weave, and even restarted daemon/kubelet as below

systemctl daemon-reload && systemctl restart kubelet

However there is no effect. When i grep as below, i see --network-plugin=kubenet and not cni (seen in video). I see the bin for weave present in–cni-bin-dir=/home/kubernetes/bin folder and also the weave pod running.

ps -aux | grep kubelet | grep -i network

Anything else i should inspect?

unnivkn:

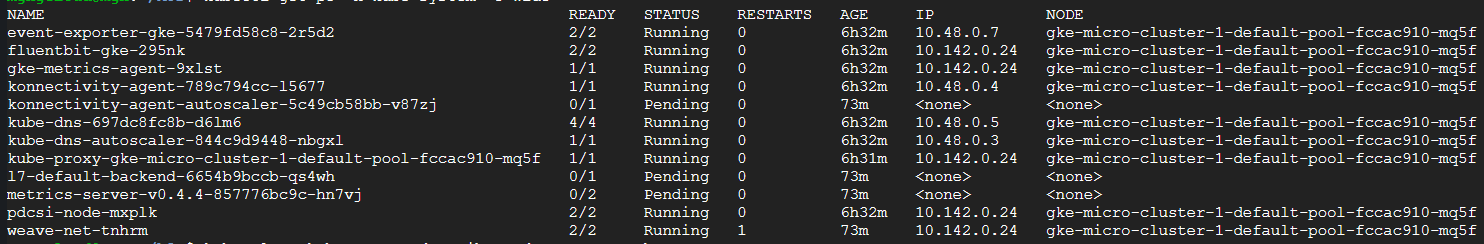

k get po -n kube-system -o wide

gopi m:

$ kubectl get po -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

event-exporter-gke-5479fd58c8-2r5d2 2/2 Running 0 6h32m 10.48.0.7 gke-micro-cluster-1-default-pool-fccac910-mq5f <none> <none>

fluentbit-gke-295nk 2/2 Running 0 6h32m 10.142.0.24 gke-micro-cluster-1-default-pool-fccac910-mq5f <none> <none>

gke-metrics-agent-9xlst 1/1 Running 0 6h32m 10.142.0.24 gke-micro-cluster-1-default-pool-fccac910-mq5f <none> <none>

konnectivity-agent-789c794cc-l5677 1/1 Running 0 6h32m 10.48.0.4 gke-micro-cluster-1-default-pool-fccac910-mq5f <none> <none>

konnectivity-agent-autoscaler-5c49cb58bb-v87zj 0/1 Pending 0 73m <none> <none> <none> <none>

kube-dns-697dc8fc8b-d6lm6 4/4 Running 0 6h32m 10.48.0.5 gke-micro-cluster-1-default-pool-fccac910-mq5f <none> <none>

kube-dns-autoscaler-844c9d9448-nbgxl 1/1 Running 0 6h32m 10.48.0.3 gke-micro-cluster-1-default-pool-fccac910-mq5f <none> <none>

kube-proxy-gke-micro-cluster-1-default-pool-fccac910-mq5f 1/1 Running 0 6h31m 10.142.0.24 gke-micro-cluster-1-default-pool-fccac910-mq5f <none> <none>

l7-default-backend-6654b9bccb-qs4wh 0/1 Pending 0 73m <none> <none> <none> <none>

metrics-server-v0.4.4-857776bc9c-hn7vj 0/2 Pending 0 73m <none> <none> <none> <none>

pdcsi-node-mxplk 2/2 Running 0 6h32m 10.142.0.24 gke-micro-cluster-1-default-pool-fccac910-mq5f <none> <none>

weave-net-tnhrm 2/2 Running 1 73m 10.142.0.24 gke-micro-cluster-1-default-pool-fccac910-mq5f <none> <none>

unnivkn:

how many nodes in this cluster ?