Salathiel Genèse YIMGA YIMGA:

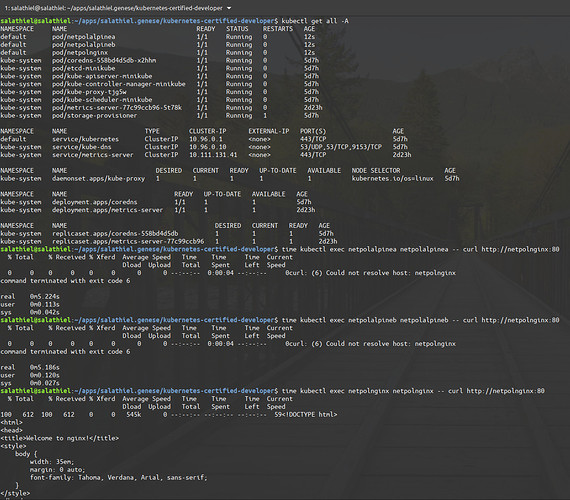

I fail to see why I cannot access the pod netpolnginx from the pod netpolalpinea -

Hinodeya:

apiVersion: <http://networking.k8s.io/v1|networking.k8s.io/v1>

kind: NetworkPolicy

metadata:

name: netpol

spec:

policyTypes:

- Ingress

podSelector:

matchLabels:

pod: netpolnginx

ingress:

- ports:

- port: 80

from:

- podSelector:

matchLabels:

pod: netpolalpinea

- podSelector:

matchLabels:

pod: netpolalpineb

Try this ![]() and put your ip address of the pods into your curl command you have a dns issue

and put your ip address of the pods into your curl command you have a dns issue ![]() propably due to your bad command.

propably due to your bad command.

Why you have two once the name of the pod in your command ?

As noted it’s not a obligation to set the PolicyTypes in this context so you get off the line policyTypes as well !!!

Another way check the dns entry or add the port 53 on the service for TCP and UDP as well

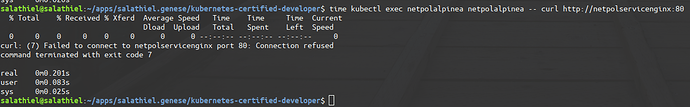

Check dns as well in the pod

kubectl exec -it netpolalpinea -- cat /etc/resolv.conf

nameserver 10.96.0.10

search default.svc.cluster.local svc.cluster.local cluster.local

In your screen I’don’t see any service exposed ?

So during the creation of the pod you can make this command

kubectl run netpolapolinea --image=nginx --port 80 --expose

Correct that as well:

apiVersion: v1

kind: Pod

metadata:

name: netpolalpineb

labels:

pod: netpolalpineb

spec:

containers:

- name: netpolalpineb

image: alpine

command:

- /bin/sh #########add here /bin/sh

- -c

- apk add --no-cache curl && sleep 3600

Anyway you can also use this link for another test:

https://kubernetes.io/docs/tasks/administer-cluster/declare-network-policy/

Salathiel Genèse YIMGA YIMGA:

I’m not sure to understand what the problem is and how to solve it

Salathiel Genèse YIMGA YIMGA:

[UPDATE]

I a lot of things, starting with minikube start --network-plugin=cni --enable-default-cni because they say minikube env doesn’t have network plugin enable by default.

With that, I could effectively enforce netpol IFF addressing the nginx pod using its IP address.

Salathiel Genèse YIMGA YIMGA:

So my concerns/troubles moved to a different line

Salathiel Genèse YIMGA YIMGA:

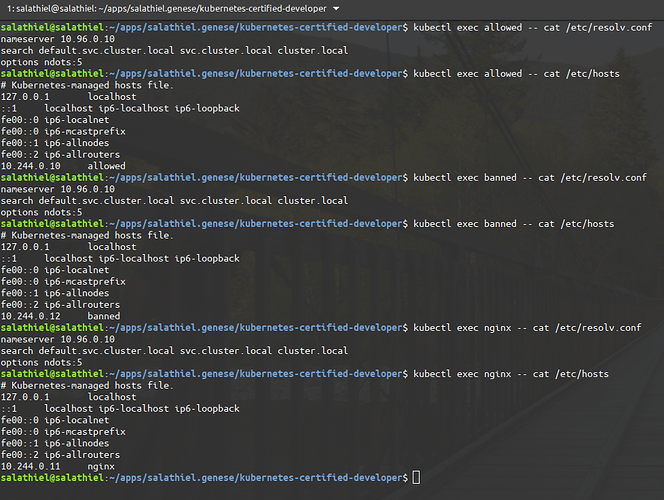

Checking the /etc/hosts of my pods, I noticed they only have entry for themselves:

• nginx -> nginx

• banned -> banned

• allowed -> allowed

And no such thing as nginx.pod.cluster.local and variants…

Salathiel Genèse YIMGA YIMGA:

I’ve a lot of GitHub issues regarding the issue, most of them are dead end

Salathiel Genèse YIMGA YIMGA:

But a few do suggest that starting at some version, minikube has been using kube-dns AND NOT coredns.

Salathiel Genèse YIMGA YIMGA:

https://coredns.io/2017/04/28/coredns-for-minikube/

https://github.com/kubernetes/minikube/issues/2302

https://medium.com/@atsvetkov906090/enable-network-policy-on-minikube-f7e250f09a14

Salathiel Genèse YIMGA YIMGA:

https://kubernetes.io/docs/tasks/administer-cluster/dns-debugging-resolution/

Hinodeya:

@Salathiel Genèse YIMGA YIMGA Ok cool for the next once do not forget that you give information that you’re using minikube when you’re exposing some problem really usefull for people give your help such as me today

Salathiel Genèse YIMGA YIMGA:

Well noted, thank you.