How does Helm attach pods to deployments and service?

On the Kubernetes beginner course, we’ve learned that deployments and services use tags to identity pods that are controlled by them. On the Helm courses, we create templates for deployments and services with constant value for tags, I mean, those values are not changed by {{ .Release.Name}, for instance.

When we create differente releases of the same chart, how does pod are attached to respective deployments and service? Checking on the labs, I didn’t see any additional tag on Selector property for services or deployments. Am I missing something? It is explained later on the course? I just finished the “Writing a helm chart” Lab.

Helm doesn’t “attach pods to services”. It merely renders the template items to create completed manifests and sends those to Kubernetes.

You can see what the rendered template looks like by doing this

helm template chart > rendered.yaml

Where you replace chart with whatever the chart in the question is, then examine the file rendered.yaml which will be created.

For example, in the helm lab you refer…

controlplane ~ ➜ helm template ./hello-world > rendered.yaml

controlplane ~ ➜ cat rendered.yaml

---

# Source: hello-world/templates/service.yaml

apiVersion: v1

kind: Service

metadata:

name: release-name-hello-world

spec:

type: ClusterIP

ports:

- port: 80

targetPort: http

protocol: TCP

name: http

selector:

app: hello-world

---

# Source: hello-world/templates/deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: release-name-hello-world

labels:

app: hello-world

spec:

replicas: 1

selector:

matchLabels:

app: hello-world

template:

metadata:

labels:

app: hello-world

spec:

containers:

- name: hello-world

image: "nginx:1.16.0"

imagePullPolicy: IfNotPresent

ports:

- name: http

containerPort: 80

protocol: TCP

livenessProbe:

httpGet:

path: /

port: http

readinessProbe:

httpGet:

path: /

port: http

controlplane ~ ➜

If you were to now run

kubectl create -f rendered.yaml

then the same resources would be created. They just wouldn’t be managed by Helm.

Hi Alistair, thanks for your quick response!

Let me try explain my question better.

Let’s say that I run helm install release1 chart and by this it will create a deployment1 and a pod1. Then I run helm install release2 chart and it creates a deployment2 and a pod2.

If I run kubectl describe for deployments and look at selectors, for both deployment1 and deployment2 it will be the same, something like label: myapp.

My question is: how kubernetes knows that deployment1 is managing pod1 and deployment2 is managing pod2 if the labels and selectors seems to be the same.

Is it more clear now? Thanks in advance.

If you installed both into the same namespace and the labels are the same in the pods for both installations, then both services will attach to all the pods from both deployments - not what you want.

Templates should also be used to generate unique labels for each deployment.

Thanks, Alistair! I’ve did the Lab again and indeed the services attache all the pods from both deployment, what’s an unwanted, but explicable behaviour.

However, when it goes to deployment, I tried to do some scales up and down and ir worked as “wanted”, I mean, when I was changing release1 deployment, pods related to this release was being created or destroyed, the same for release 2.

I was expecting a random behaviour. For instance, I try to scale down release 2 deployment and kubernetes destroys a pod from release 1 deployment, since the selectors are the same.

I recorded a video, if you want to see, Screen Recording 2024-04-01 at 17.58.26.mov - Google Drive

Recall that a deployment operates by managing a replicaset.

If you look at the underlying replicaset for each of those deployments, then you will see that the replicaset’s selector which is what actually selects the pods includes the pod-template-hash label.

The deployment’s selector actually selects the replicaset which controls the pods.

Now in practice you would not normally deploy 2 instances of the same chart in the same namespace, so you wouldn’t get this clash on the service, however if you wanted to make the two instances completely separate from each other, you would have to add a computed label to both the pod template in the deployment and the service’s selector. A good choice for the label value is the helm release name. The label name is of no consequence, as long as it’s the same in deployment and service manifests

instance: {{ .Release.Name }}

added to the labels and selector would fix it.

This would add webapp1 and webapp2 as values for this label and ensure the services connected to the correct pods.

Thanks again, Alistair! I understand that this is not a usual practice, but I’m still not understand how it works, to be honest.

Looking at the pod description I can see the property “Controlled By” and the name of the right replica set, looking the description of the replica set I see the pod-template-hash on label. But when I create multiple replica-set, the hashvalue is the same for all pods.

I’m wondering if there’s another property used by by kubernetes to link the pod to the replica-set, this “Controlled By” on the pod description, for instance.

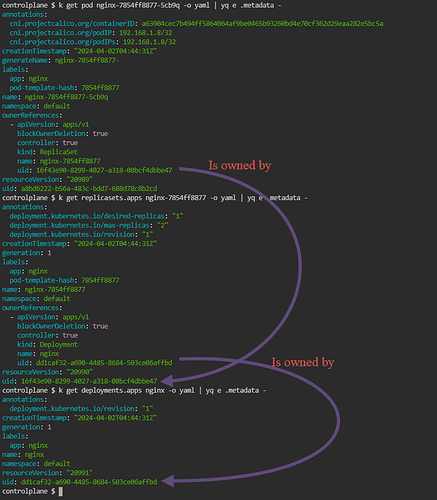

Any resource that is “owned” by another resource will have an “ownerReference” which refers to the resource that owns it and that equates to “Controlled By” in the describe output.

Here is the ownership chain

When a deployment is created, it creates a replicaset, then the replicaset creates pods. When you delete the deployment (or any resource that “controls” something), then the controlled objects are deleted as well. This is a “cascaded delete”. So deployment deletes replicaset deletes pods.

A service does not control anything. The relationship is entirely selector → labels, so a service will connect to ALL pods that have labels matching the service’s selector.

Note the selector for the replicaset

matchLabels:

app: nginx

pod-template-hash: 7854ff8877

The replicaset adds the pod-template-hash label to the pods itself when it asks kubernetes to deploy its pods - which is why scaling the deployment works the way you have seen.

Everything that is happening is 100% to do with kubernetes and 0% to do with helm.

Helm is just a way of kubectl applying a lot of resources all together.

Great! Now I understand, Alistais! Thank you so much!