Sameer Bhatia:

Hi - Can someone help answer which controller / control plane component actually assigns a cluster ip to a service object from service-cluster-ip-range parameter for the kube-api-server? Likewise, which controller actually creates the endpoint object when the the following yaml is applied:

apiVersion: v1

kind: Service

metadata:

name: nginx-service

spec:

selector:

<http://app.kubernetes.io/name|app.kubernetes.io/name>: nginx-proxy

ports:

- name: service-port

protocol: TCP

port: 8080

targetPort: 80

Ajay Shahi:

Kubeproxy I guess …

Nathan Perkins:

its weird that i can’t find any documentation to back this up

Nathan Perkins:

i would have thought the CNI does it, but none of the major CNIs mention it

Nathan Perkins:

it sounds like it might be kube-api-server? you set the service-cluster-ip-range in there. that’s weird though

Nathan Perkins:

it looks like that’s only used for validation

Nathan Perkins:

i searched the kubernetes code for ServiceClusterIPRange and only saw it in validation code

unnivkn:

Hi @Sameer Bhatia fyr:

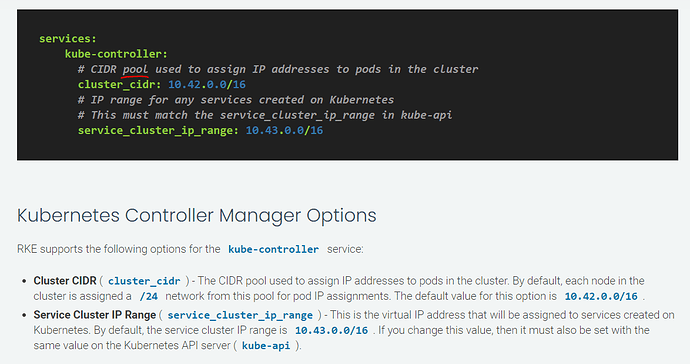

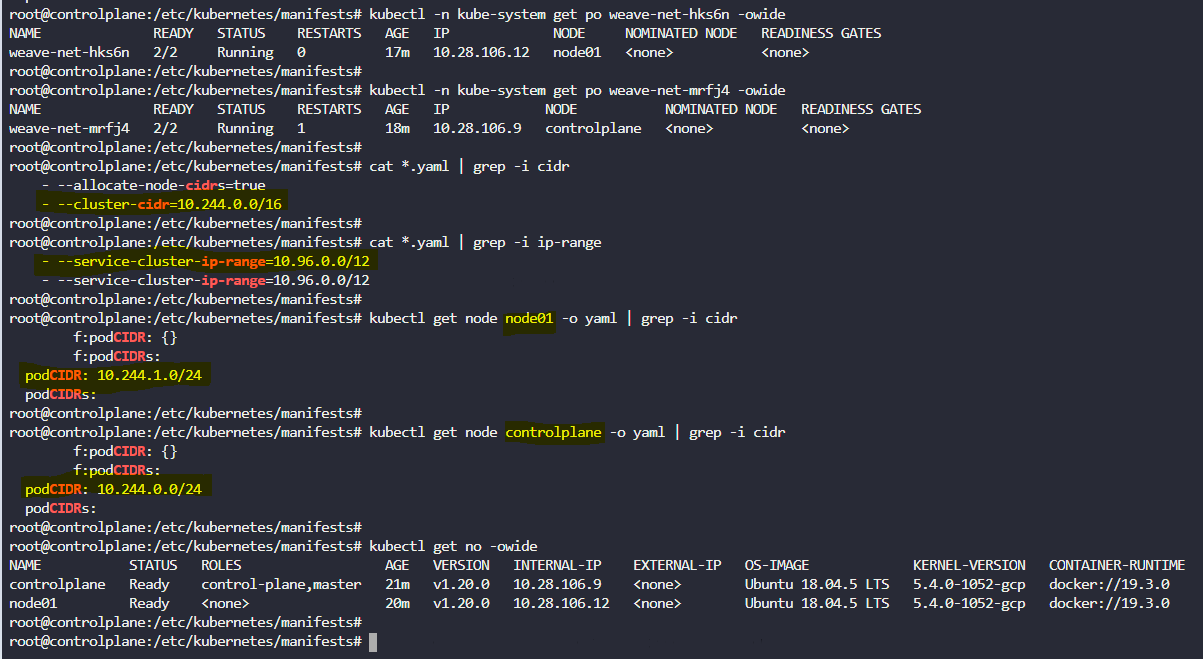

bushniel ashraf:

This is my understanding. The service cluster cidr is mentioned/configred while creating the api static pod. One of the controller manager is responsible for creating the clusterIP when the manifest is applied. The kube-proxy is what sets up the iptable rules on each nodes for this setup to work. And any time a new pod comes up or deleted the kube-proxy is responsible for updating the ip table rules. The cluster cidr is different from the service cidr ranges. The cluster cidr is what decides what IPs the pods get and are decided by the CNI.

In a nutshell the controller managerwill be the brain but the actual work is done by the kube-proxy every time.