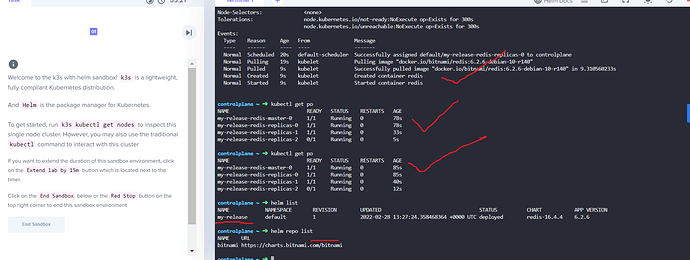

I started the Helm lab and tried to deploy a Helm chart. The pods, deployed by the Helm chart, can not start due to the following error message:

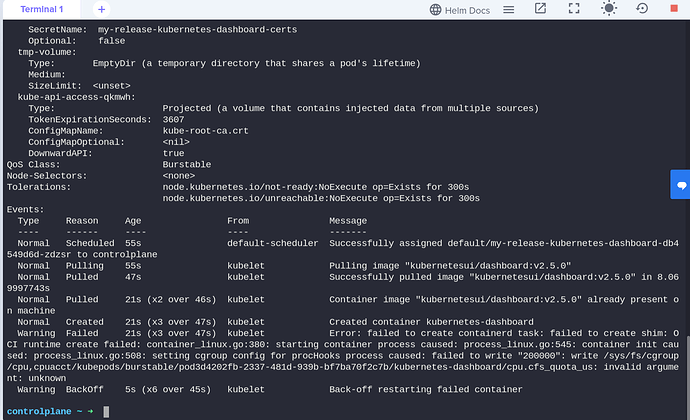

Normal Created 17s (x3 over 34s) kubelet Created container kubernetes-dashboard

Warning Failed 16s (x3 over 34s) kubelet Error: failed to create containerd task: failed to create shim: OCI runtime create failed: container_linux.go:380: starting container process caused: process_linux.go:545: container init caused: process_linux.go:508: setting cgroup config for procHooks process caused: failed to write "200000": write /sys/fs/cgroup/cpu,cpuacct/kubepods/burstable/pod591f08a1-c306-4cda-9d4a-4a564a5e8ed6/kubernetes-dashboard/cpu.cfs_quota_us: invalid argument: unknown

Warning BackOff 11s (x5 over 32s) kubelet Back-off restarting failed container

It was just a test to step into this lab stuff. Unfortunately one of the first things failed already.

How can I workaround this?

Hello, @v.hoebel

Can you please tell me lab name?

@Tej_Singh_Rana I just went to Labs → Helm and created a new one. Since it gets destroyed after 15m automatically, I can not tell you the lab name since it is gone already. But the error is reproducible.

So it’s happening with all the helm labs? @v.hoebel

Yes, that was the case for me when I tried it multiple times.

What do you mean by “gone already”?

You mean that session is expired? Is it?

Not sure if we are talking about the same “Lab”?

Maybe I should have written “Playgrounds”. Is it more clear now?

Yes, It’s clear now. I thought you are talking about helm course’s labs.

Thanks for clarification.

Uf, I mixed things up. Thanks for looking into this issue!

1 Like

We will check and will update you.

Thanks for your patience.

1 Like

Can you please tell me chart name as well? @v.hoebel

I just deployed one chart and it’s working as expected.

Thanks for your feedback.

I tried this chart here:

Hey, @v.hoebel

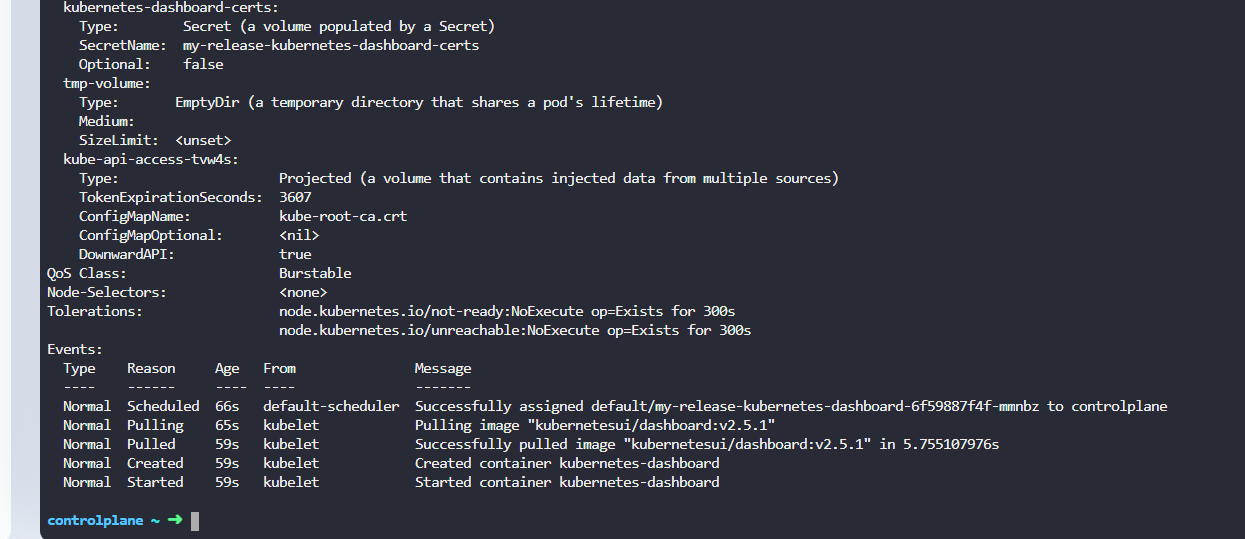

Please check now, we fixed it that issue.

Thanks for your patience.

Regards,

Thank you @Tej_Singh_Rana ! Will try this soon