Hello, have one more question.

I ran an etcd backup on my test cluster.

controlplane $ ETCDCTL_API=3 etcdctl --endpoints=https://127.0.0.1:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key snapshot save /var/lib/backup/etcd-snapshot.db

{"level":"info","ts":1735034490.8487248,"caller":"snapshot/v3_snapshot.go:68","msg":"created temporary db file","path":"/var/lib/backup/etcd-snapshot.db.part"}

{"level":"info","ts":1735034490.8639803,"logger":"client","caller":"v3/maintenance.go:211","msg":"opened snapshot stream; downloading"}

{"level":"info","ts":1735034490.8642037,"caller":"snapshot/v3_snapshot.go:76","msg":"fetching snapshot","endpoint":"https://127.0.0.1:2379"}

{"level":"info","ts":1735034491.159216,"logger":"client","caller":"v3/maintenance.go:219","msg":"completed snapshot read; closing"}

{"level":"info","ts":1735034491.1787996,"caller":"snapshot/v3_snapshot.go:91","msg":"fetched snapshot","endpoint":"https://127.0.0.1:2379","size":"7.6 MB","took":"now"}

{"level":"info","ts":1735034491.1788964,"caller":"snapshot/v3_snapshot.go:100","msg":"saved","path":"/var/lib/backup/etcd-snapshot.db"}

Snapshot saved at /var/lib/backup/etcd-snapshot.db

controlplane $ ETCDCTL_API=3 etcdctl --write-out=table snapshot status /var/lib/backup/etcd-snapshot.db

Deprecated: Use `etcdutl snapshot status` instead.

+----------+----------+------------+------------+

| HASH | REVISION | TOTAL KEYS | TOTAL SIZE |

+----------+----------+------------+------------+

| 70bf6bd2 | 3085 | 1368 | 7.6 MB |

+----------+----------+------------+------------+

Then I created a new test pod.

controlplane $ k run test-pod --image=nginx --restart=Never -- /bin/sh -c "sleep 3600"

pod/test-pod created

controlplane $ k get po

NAME READY STATUS RESTARTS AGE

test-pod 1/1 Running 0 13s

Then I restored the etcd backup but I still see my test pod. I think this is not good, as if the backup has been restored, the new pod should not be there.

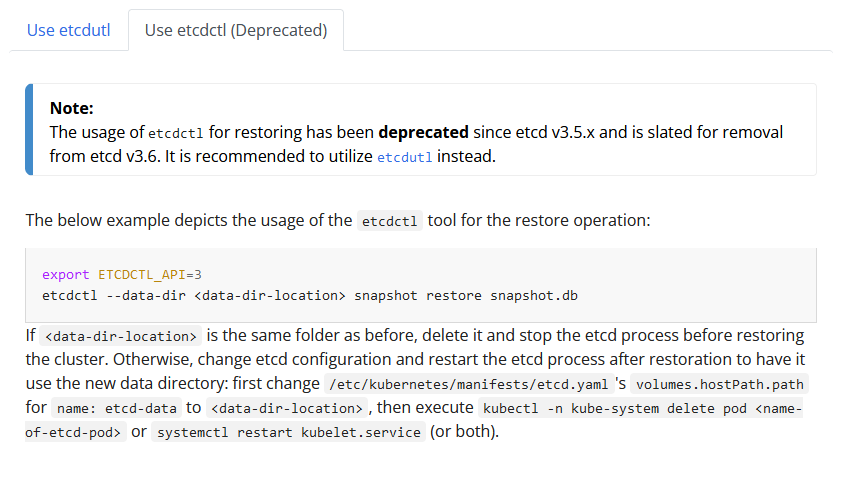

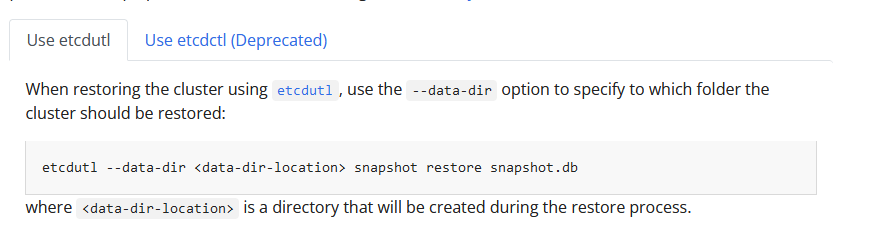

controlplane $ ETCDCTL_API=3 etcdctl snapshot restore /var/lib/backup/etcd-snapshot.db

Deprecated: Use `etcdutl snapshot restore` instead.

2024-12-24T10:05:28Z info snapshot/v3_snapshot.go:251 restoring snapshot {"path": "/var/lib/backup/etcd-snapshot.db", "wal-dir": "default.etcd/member/wal", "data-dir": "default.etcd", "snap-dir": "default.etcd/member/snap", "stack": "go.etcd.io/etcd/etcdutl/v3/snapshot.(*v3Manager).Restore\n\t/tmp/etcd-release-3.5.0/etcd/release/etcd/etcdutl/snapshot/v3_snapshot.go:257\ngo.etcd.io/etcd/etcdutl/v3/etcdutl.SnapshotRestoreCommandFunc\n\t/tmp/etcd-release-3.5.0/etcd/release/etcd/etcdutl/etcdutl/snapshot_command.go:147\ngo.etcd.io/etcd/etcdctl/v3/ctlv3/command.snapshotRestoreCommandFunc\n\t/tmp/etcd-release-3.5.0/etcd/release/etcd/etcdctl/ctlv3/command/snapshot_command.go:128\ngithub.com/spf13/cobra.(*Command).execute\n\t/home/remote/sbatsche/.gvm/pkgsets/go1.16.3/global/pkg/mod/github.com/spf13/[email protected]/command.go:856\ngithub.com/spf13/cobra.(*Command).ExecuteC\n\t/home/remote/sbatsche/.gvm/pkgsets/go1.16.3/global/pkg/mod/github.com/spf13/[email protected]/command.go:960\ngithub.com/spf13/cobra.(*Command).Execute\n\t/home/remote/sbatsche/.gvm/pkgsets/go1.16.3/global/pkg/mod/github.com/spf13/[email protected]/command.go:897\ngo.etcd.io/etcd/etcdctl/v3/ctlv3.Start\n\t/tmp/etcd-release-3.5.0/etcd/release/etcd/etcdctl/ctlv3/ctl.go:107\ngo.etcd.io/etcd/etcdctl/v3/ctlv3.MustStart\n\t/tmp/etcd-release-3.5.0/etcd/release/etcd/etcdctl/ctlv3/ctl.go:111\nmain.main\n\t/tmp/etcd-release-3.5.0/etcd/release/etcd/etcdctl/main.go:59\nruntime.main\n\t/home/remote/sbatsche/.gvm/gos/go1.16.3/src/runtime/proc.go:225"}

2024-12-24T10:05:28Z info membership/store.go:119 Trimming membership information from the backend...

2024-12-24T10:05:28Z info membership/cluster.go:393 added member {"cluster-id": "cdf818194e3a8c32", "local-member-id": "0", "added-peer-id": "8e9e05c52164694d", "added-peer-peer-urls": ["http://localhost:2380"]}

2024-12-24T10:05:28Z info snapshot/v3_snapshot.go:272 restored snapshot {"path": "/var/lib/backup/etcd-snapshot.db", "wal-dir": "default.etcd/member/wal", "data-dir": "default.etcd", "snap-dir": "default.etcd/member/snap"}

controlplane $ k get po

NAME READY STATUS RESTARTS AGE

test-pod 1/1 Running 0 2m12s

What is wrong in my step sequence? Thanks again.