I’m operating a Kubernetes cluster on three VMs within Google Cloud Platform (GCP), all of which are connected via a private network. This setup assigns internal IPs to the VMs. My objective is to make backend services, currently running as pods within the cluster, accessible from the public internet through a web browser. Given that MetalLB is not a viable option due to its dependency on external IPs and the fact that in my setup, external IPs are actually within the VMs’ internal IP address range, what methodologies are recommended to facilitate external access to these services?

I don’t know GCP so I cannot give you the exact steps.

However there will be somewhere a package you can install in the cluster (likely with Helm) that will provision cloud loadbalancers for ingress and services of type LoadBalancer.

When properly configured, the creation of ingress or loadbalancer service resources will trigger the creation of a cloud loadbalancer with endpoints in a public network and backends being your cluster nodes in the private network.

In GKE when we create service type load balancer then the service will trigger creation of cloud load balancer automatically. But i am here running baremetal VM’s running in GCP

Yes, in GKE this functionality is enabled by default.

For non-GKE clusters, a load balancer controller should exist that can be installed into the cluster to perform the same function.

Alternatively, you should be able to install a standard ingress-nginx and set the ingress controller’s service to be nodeport. Manually deploy a TCP loadbalancer and set its backend to connect to the cluster instances on the node port of the ingress service.

I was able to do this as below.

Created a nodePort with with defined port.

Created a GCP HTTP Load balancer resource and configured backend with private network VMS with port defined in nodePort.

This is how i am able to expose the services outside.

As you mentioned i need to tryout with ingress-controller.

Yes you do, or it will get very expensive very quickly if you have a LB for every service!

Using ingress controller, you have only one nodeport service = 1 load balancer, and all Ingress you set up for other services will use it. Ensure you connect the LB to the nodeport of the ingress controller on all cluster nodes.

Doing it with load balancer and ingress controller, ingress controller will need to handle any HTTPS certificates, and the load balancer must be a TCP (not HTTP) load balancer.

If you can find the GCP load balancer ingress controller for non-GKE clusters (there must be one, because AWS has one), then that would automatically deploy HTTP load balancer and handle the certificates, and you would not require ingress-nginx. Additionally if you add or remove nodes from the cluster, connection/disconnection of these nodes with the load balancer is handled automatically.

Route all DNS names for services in the cluster to the load balancer public endpoints.

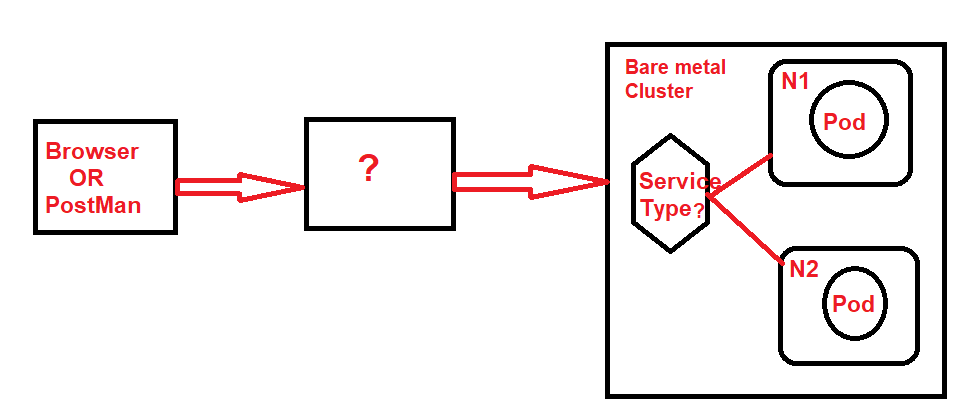

Now i solved it with GCP LB with backend configuration as VM’s and service type nodePort. In your solution i have small confusion .i,e ingress is part of the Cluster. How external traffic reach ingress controller.

What is the resource i need to use to route traffic from external sources to ingress .

- “service” should be the service created by the installation of ingress-nginx, and that service should be of type

NodePort. There is a setting in the ingress-nginx helm chart to make the service beNodePort -

?should be a cloud TCP load balancer and its back end should be connected to the node port of the ingress controller’s service on all 3 nodes - that is if controlplane is itself a node - which it is if you are running kubeadm.