Hi There,

Im facing an issue while running the command

kubectl apply -f https://reweave.azurewebsites.net/k8s/v1.29/net.yaml

error: error validating “https://reweave.azurewebsites.net/k8s/v1.29/net.yaml”: error validating data: Get “http://localhost:8080/openapi/v2?timeout=32s”: dial tcp 127.0.0.1:8080: connect: connection refused; if you choose to ignore these errors, turn validation off with --validate=false

why it is trying to localhost:8080 there ?. If anyone is facing this issue please help me to understand what is happening here

This is typically the error message you get when you do not set up a kubeconfig file. kubectl is telling you it doesn’t know where the kube-apiserver is, and having no better info, it guesses it’s at 8080. So you need to install a kubeconfig file.

vagrant@controlplane:~/.kube$ cat config

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURCVENDQWUyZ0F3SUJBZ0lJRXdhYUJkZ0NkQkl3RFFZSktvWklodmNOQVFFTEJRQXdGVEVUTUJFR0ExVUUKQXhNS2EzVmlaWEp1WlhSbGN6QWVGdzB5TkRBM01ERXhOelEwTURCYUZ3MHpOREEyTWpreE56UTVNREJhTUJVeApFekFSQmdOVkJBTVRDbXQxWW1WeWJtVjBaWE13Z2dFaU1BMEdDU3FHU0liM0RRRUJBUVVBQTRJQkR3QXdnZ0VLCkFvSUJBUUN1SDBrN1phQzN6U3lMZzFFcTVvRnpPbzRpWWNpbmxSSnJkZnpYd1Z5Mlh5S2JERHFZNmUycDFLTE8KNVc0YTIxQWhOTzVKQm9hM2VnR2pMZDJZNThtd3U0MnNSQUd5cjBMWnNjOFVvbWhITlVrU2JMS3JWbjdsTUFYcwplRlVmWVdPeVZNVWdDTHphSnp6eTUzbEk2TjRKT0NKVk9UU3NKTzBTdzhJaGg2em9QMm56VUFMQ0t4T09wdVlDClNJZGU0SXRDdTNSR205b1ZpZDFmMXYzVE5xYmhDb3RYNzFuRVpJVHpsSlNveDkzNjFZTStSbkxXcTd0UWFvSGsKeU1wUTQxNndDbEIwanFFMUs0WGVpNGVHR3NBcURiWjhoV3BJRC8yeDB3akphL0ZKVGlGbmRpclk4VlhYOWlxYQpSaVhMbm9MQXdvZnY0OUZ3QlhWVDRYUklPNUkzQWdNQkFBR2pXVEJYTUE0R0ExVWREd0VCL3dRRUF3SUNwREFQCkJnTlZIUk1CQWY4RUJUQURBUUgvTUIwR0ExVWREZ1FXQkJTdW9ORWtUczB2RDFVeEltbHVCdmQ2WjNlNWlUQVYKQmdOVkhSRUVEakFNZ2dwcmRXSmxjbTVsZEdWek1BMEdDU3FHU0liM0RRRUJDd1VBQTRJQkFRQlZBNnlvUkkvagoycUJ2V2FnTHBiL2JWQitjR2laT1l1M2RZeDhmV0hGWE51NVZFbEdvUDNhaTlTN2FPcnNuN1p2SUFjTGN4YkRECm5Vb3FMeFplRFdOMDBwTE0vRzJnVmxVYXpRVW1QVkxmUlFhbEI1VDU3NUljcDczdXZvdVI3YWN2WXNKaURGSG8KTkExTnFkbFRSWTdEVDJ1dFdIVHFkNVE5eG9BRk4zNlFhTUxvZndwWTJDYUY4WkVBQlRqcm9FSTgweXI1WDdmaApja3VZNUJyem9hLzFzYzlyTzdDN3huU0dJdHJMVklCV28xZU4yZWxtcDZGR21xVTdrai90bndrZlpVZ09xSFhyClYzQ1dPelp2MnR3RGhVL1JUK0luQ0QwYzFEK1dYOHhSZTRnZitSaEFQQjJvSHQxc0tPV0JjYk9lNXZhYTZXZEQKekdaN1VyWElXUjFHCi0tLS0tRU5EIENFUlRJRklDQVRFLS0tLS0K

server: https://192.168.0.103:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: kubernetes-admin

name: kubernetes-admin@kubernetes

current-context: kubernetes-admin@kubernetes

kind: Config

preferences: {}

users:

- name: kubernetes-admin

user:

client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURLVENDQWhHZ0F3SUJBZ0lJU1RKdXVSSEpqRm93RFFZSktvWklodmNOQVFFTEJRQXdGVEVUTUJFR0ExVUUKQXhNS2EzVmlaWEp1WlhSbGN6QWVGdzB5TkRBM01ERXhOelEwTURCYUZ3MHlOVEEzTURFeE56UTVNRE5hTUR3eApIekFkQmdOVkJBb1RGbXQxWW1WaFpHMDZZMngxYzNSbGNpMWhaRzFwYm5NeEdUQVhCZ05WQkFNVEVHdDFZbVZ5CmJtVjBaWE10WVdSdGFXNHdnZ0VpTUEwR0NTcUdTSWIzRFFFQkFRVUFBNElCRHdBd2dnRUtBb0lCQVFDN0JISkIKTjRQTGV2dkdyTzh0K0JnWnlYemxwcmdyNWFGMk9DdVVzYjIrdVA5VVFXNUlGeU9QKzd0MHpTTXo5QmEzdWNtQgpvOWxjVXhrTFJkaUk4QWlrcExtSEJXRXJ2YTdNWDJIUzhQVnRucnRNT0JxSllTeFVHaGNMZXJFUk02YXJlWmR1CllvSzllemd2c3B2TkV0T2hvSDRnUUNBdHRGMElzVWNqMitRd2FNckNQWldQWWduTkNLUzRhQzRsVS95Q21zbGIKMFN6UlBRZGNqKytaZWpBb2Nzdkh4ODJnZGtqTnJTTHpDMnFFTUg3dGc0dXhXazFPUDBtT2xTdllwckZqa09XMgoyV2lZMXQrek1WbVlxQUN4a2paN2RVNkJKVlJBeVVqU2FnWkZ6RWV1KzFvU0VHZng3dVdEWnNwTzU3STdLYnZiCkd6eVp2NURFcG5GYXhNQTVBZ01CQUFHalZqQlVNQTRHQTFVZER3RUIvd1FFQXdJRm9EQVRCZ05WSFNVRUREQUsKQmdnckJnRUZCUWNEQWpBTUJnTlZIUk1CQWY4RUFqQUFNQjhHQTFVZEl3UVlNQmFBRks2ZzBTUk96UzhQVlRFaQphVzRHOTNwbmQ3bUpNQTBHQ1NxR1NJYjNEUUVCQ3dVQUE0SUJBUUI1VnZwSE5jM2ZnMmVkNTlmaldZOHpxUW03Ck52alQ3elRpcHBqdHhnNERXdnVTK2o2YVp1N052ZExWMjRQU0w0Y0VLbkdmQXJvRWhsdzJQUGNHQUtJSlg1L0oKeGhjRFVWUE44WXVZaDdrdEk4MWlheHc4bHFvbzBYcUd2NDl5UDZDbGhKYjA2SGcyN0FlOVNTbXRJVWI1cStxeQpZTXFBOG9nbzR0K05UckRVNTRzNzRqRXA2M09kcGR2WmM1cXo4dG02ajZySWRmQVc4MmR0WTVXU2MzWWYwRGRuClFjUWJvQWF2Y2FyVGVCaThsNlBHWml6bDBkbUduejhoY3pUQmJ0UWs4RmExOEVaNWtmOVg2OGtHbkJUUmZ6N1kKZlduUE95SkFlaHhPVmFMZFlCV2UwRjUvQWR0OGozSG9WTFYrdFJZYWxPSndDeFNJRThYd1JFWnJlL1BmCi0tLS0tRU5EIENFUlRJRklDQVRFLS0tLS0K

client-key-data: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFb3dJQkFBS0NBUUVBdXdSeVFUZUR5M3I3eHF6dkxmZ1lHY2w4NWFhNEsrV2hkamdybExHOXZyai9WRUZ1ClNCY2pqL3U3ZE0wak0vUVd0N25KZ2FQWlhGTVpDMFhZaVBBSXBLUzVod1ZoSzcydXpGOWgwdkQxYlo2N1REZ2EKaVdFc1ZCb1hDM3F4RVRPbXEzbVhibUtDdlhzNEw3S2J6UkxUb2FCK0lFQWdMYlJkQ0xGSEk5dmtNR2pLd2oyVgpqMklKelFpa3VHZ3VKVlA4Z3BySlc5RXMwVDBIWEkvdm1Yb3dLSExMeDhmTm9IWkl6YTBpOHd0cWhEQis3WU9MCnNWcE5UajlKanBVcjJLYXhZNURsdHRsb21OYmZzekZabUtnQXNaSTJlM1ZPZ1NWVVFNbEkwbW9HUmN4SHJ2dGEKRWhCbjhlN2xnMmJLVHVleU95bTcyeHM4bWIrUXhLWnhXc1RBT1FJREFRQUJBb0lCQUdBa0lnUnE0S01MZjBHYwpoM3pQVEx4OCsyc244UWdJRGFBenNodkgzKzZiTmc5L2I0MDU3L3RHQXhGQm4vWkdaaU5mTERzc0cwSytLV0xGCkxsTC9hc0lST0pzejVjZFJ4UG1sa3ltWTIrTFZ0aDJ4dmRxZ0RPVFRZTU55K0hJS1pvVkNoZG1tWk9XNTRhR0wKcFZLZ2VoRmQ2MWp5L2xmZ24rOG5DNVpncFlkSWh1SkR2Rm9PT0xaVFlLK1Zjc1JzVWViMG9sQ016VzFtZXVZWApLZ0dzQld1bXY5TENyRkFTK3dTaW42MmhxVEhxN01IYS9HZE1LcEFXOURuSkgvOXFBbnY2V2xCeWViSHcwMnllCmUrRXp3UFpHZjFOYTdLZlZIUTJkdUtXbGo1R25SSHBoRVZZNTlVYVAvRy92MzdMb29mOStSU1FHaUw3OWxhdFUKdTE1VkdUMENnWUVBNERDREl4TndYZWxVVGpldnJXWFY1QTBGS05JT0djRm5zQ0tXaUk5cGZ5bHUwVUpzakhweApkanNGUnI3bk9sbDNnb25QOG1VQW1BTDVWeTBGaVJiU1IzU0l3ZFNCeDJhV2JFUU1kWmhCbGFldmN2Zkl3ZnNFClFDSXBDZjhkRHhnZTVOUnE2UFpINEhkbGNadjV5azBnd1RlcGRFY1ZqSmxZRzgvZ1RzS3VFTjhDZ1lFQTFZMncKVmlpZFoveERFellJeXhQRU9oaDc1WW5XVEh0Z0RHcEsyM3JBRWlrR3MrMWlJV3RTZms4TUdNYlhxRjdKVVVZTAo4NndhSEN6a0FBbWJMQUVTWFdsYWFnYnlHdjhnRkNOdEVvR3U1ZzYrelo4V1gybm5GSkhyVXVtMjdLN3RzT1NKCmNDM2xWTWtLMVBXM1ZMMDJyMnc0blJiUkx3OG1kazh2WWtEcVdlY0NnWUVBc0ljQ2UxZ1BIcU5mY3NkK1dUYmEKbU50M3VKRE44WkhNcDNCVXYrck0wd2c0N2lVemU5bmVCWTZydE8wVS9XajlKWmlHV1FNVzJKdGU0am5kSmZrRwpVcVY4R2NQTy9NZldvaUZpL2lXSlh1SE8wT1F5L0NzL1NaQ2NaL2F0VnZsVE9qUFlpdCtCOFVtU1kwYkNCWDE5CjBTYVhFNnYxVitSVzhHOWEzQ21IRGxrQ2dZQmpHUmtYSWtuSkUxcmM4MW9wUXNid1hxUS81REs1MHhiRExDQUkKc1hHbis3bk1qUC9ZbWtEeHRDVGM2b2p3N090bTk2WmNNU1Q4cGlnM2pEMmhzZTJmdzEvZGk5T1ZpNGFMVWRVdgpuVlpZRThlZkM1QUtQczZvMFAzdXY5M08vMjMxaEZmeTRwbGxPdFgzOVA1YUtHUFVDOWhKcldqcytZN1RuL05SCkdPYW5TUUtCZ0JRNHRML2tFcXgwWmY1cmJNVFFvVnEzR1l6UnU4UHdXWXdQTzVBQkt3b21tMHJnaisrRWFxbFoKbDBxRkFsUVFvanlueFBVTlM0SGJuYVNrY3RESkRmcU1oYXM2SmhqYlVDbkNhOVZZazFZb0pZKzZteU9wK0NCbApJa1BGUWhmaWx2V3pqenNtY2c3MGRtcEF3TEcyeWFET2NPVEFXb0pobUhEcFd3WlpaN0M1Ci0tLS0tRU5EIFJTQSBQUklWQVRFIEtFWS0tLS0tCg==

This is my sample config file at master node. Please let me know what i need to add in this config file ?

It seems like you are trying to deploy it from the worker node.

I should have ran this

kubectl apply -f https://github.com/rajch/weave/releases/latest/download/weave-daemonset-k8s-1.11.yaml

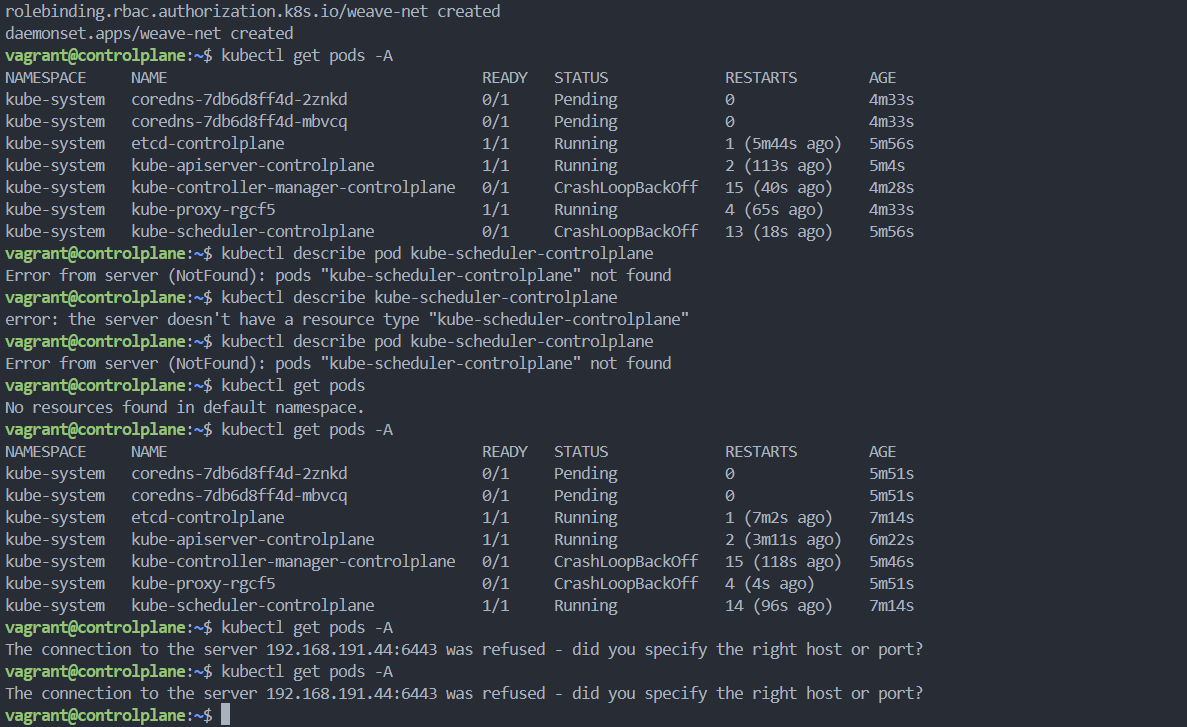

the url was wrong. I can see the output weavenet is created but when i do kubectl get pods -A it works for sometime and it shows connection refused. Can someone help me to understand what am i making wrong.

Also adding on top, I cannot see the weavenet pod running and there are some pods not in the running state which belongs to the k8s components

I did sudo systemctl restart kubelet then it worked for few minutes and again it displays the same connection refused error. Is there any problem with kublet config ?

@rob_kodekloud Could you please help me to figure out this intermittent downfall of kubelet ? Its working for sometime and facing this issues on sometime attached screenshot for your reference

I assume you are doing a kubeadm install. Some questions about how you’re doing that:

- Are you using the tutorial from the CKA course repo? People who follow the course video instead of this tutorial tend to get into trouble – use the repo’s tutorial if you’re not already.

- Small errors in doing the tutorial tend to cause the problem you’re describing. The kubelet problem tends to relate to misconfiguration of containerd, via its config.toml file. That needs to be exactly right, or pods go in and out of service in a somewhat random way. The part of the tutorial people get wrong tends to be on the Node Setup page of the tutorial; that’s where you need to concentrate your attention.

- Once you’ve seen this problem, you need to “back out” your install of the cluster you did with

kubeadm init. You do that be doing kubeadm reset, which removes the install files and lets you start over. So do that, and do the tasks in the Node Setup page again; doing this and doing this right is how you fix your problem.

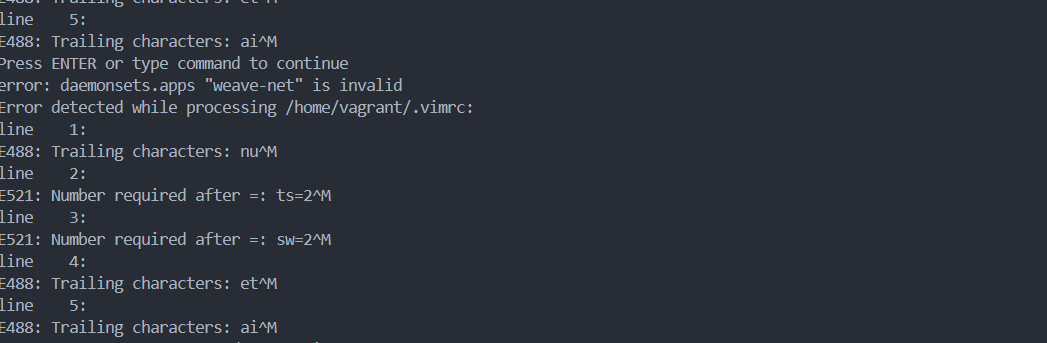

I was not getting this error previously. Now im getting this error as well.

Sounds like your .vimrc file (on your Mac, I assume) is invalid. Not sure what you have it, although the “line 52” part suggests, “quite a bit”. Not sure what your “error loading config file” error is about either. Weird stuff. You may be doing your commands fancier than you need to; I’m guessing your .kube/config file might belong to root and not user vagrant. If you mean chown vagrant:vagrant, say that.

@rob_kodekloud

FYI, I am using windows machine with virtual box installed on top of it. Actually vim shows error in the weave-net file when we edit the daemon set as mentioned in the course. The changes are not getting accepted and it shows error. The kubeapi server is keep going down. I used flannel as well. No help on that as well. Issue is with the kubelet service. I uninstalled k8s and installed it from the beginning. Still Im getting the same issue. Works for sometime and goes into crashlopback

still, likely cause is a containerd problem. Not clear to me what exactly you’ve done, but most of the development of the tutorial was done on Windows by an an engineer who’s very good with it. I don’t have a lot more advice as to what you need to beyond what I’ve given: do the tutorial as carefully and exactly as you can.

@rob_kodekloud I have clearly followed the steps in the given tutorial. If the engineer is good with it its fine. For me and a lot of students in the community are facing this issue. It would be very helpful if we can have a 1-1 session to debug it further. The ideal outcome is to understand the underlying problem

@mmumshad Expecting your response as well. Thanks in advance

1 Like

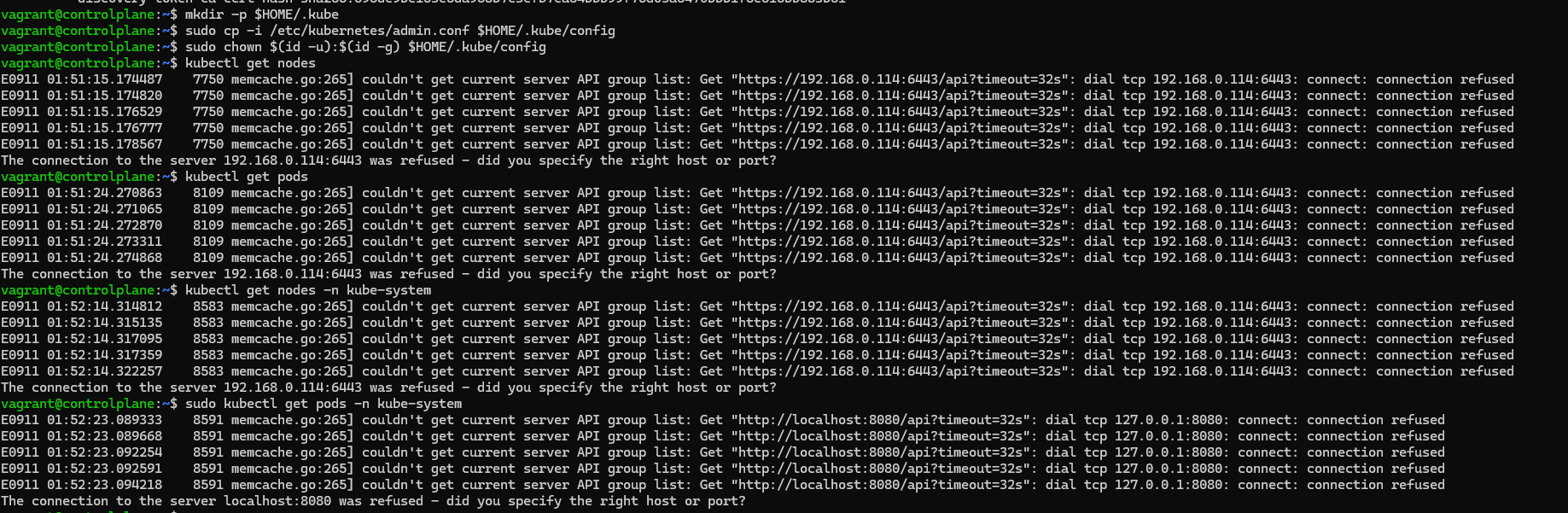

I am also facing same error . and i can see my kube config file as well