Hello everyone,

Im am having some errors when trying to configure auditing for the CKS certification.

When saving the file, kube-apiserver pod wont spin up.

When running “sudo journalctl -u kubelet | grep kuberuntime” i get the following error:

Feb 12 13:28:03 controlplane kubelet[4669]: E0212 13:28:03.494561 4669 kuberuntime_sandbox.go:72] "Failed to create sandbox for pod" err="rpc error: code = Unknown desc = failed to setup network for sandbox \"9913a23eac8e1aed94f032af0d2be7020dba6c92ee356123c5963e4780634a52\": plugin type=\"flannel\" failed (add): open /run/flannel/subnet.env: no such file or directory" pod="kube-system/coredns-5d78c9869d-f57mn"

Feb 12 13:28:03 controlplane kubelet[4669]: E0212 13:28:03.494579 4669 kuberuntime_manager.go:1122] "CreatePodSandbox for pod failed" err="rpc error: code = Unknown desc = failed to setup network for sandbox \"9913a23eac8e1aed94f032af0d2be7020dba6c92ee356123c5963e4780634a52\": plugin type=\"flannel\" failed (add): open /run/flannel/subnet.env: no such file or directory" pod="kube-system/coredns-5d78c9869d-f57mn"

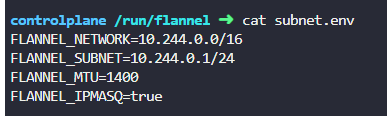

The file /run/flannel/subnet.env does exists:

My configuration is the following:

/etc/kubernetes/prod-audit.yaml

apiVersion: audit.k8s.io/v1

kind: Policy

rules:

- level: Metadata

namespaces: ["prod"]

verbs: ["delete"]

resources:

- group: ""

resources: ["secrets"]

kube-apiserver.yaml

apiVersion: v1

kind: Pod

metadata:

annotations:

kubeadm.kubernetes.io/kube-apiserver.advertise-address.endpoint: 192.41.14.6:6443

creationTimestamp: null

labels:

component: kube-apiserver

tier: control-plane

name: kube-apiserver

namespace: kube-system

spec:

containers:

- command:

- kube-apiserver

- --advertise-address=192.41.14.6

- --allow-privileged=true

- --authorization-mode=Node,RBAC

- --client-ca-file=/etc/kubernetes/pki/ca.crt

- --enable-admission-plugins=NodeRestriction

- --enable-bootstrap-token-auth=true

- --etcd-cafile=/etc/kubernetes/pki/etcd/ca.crt

- --etcd-certfile=/etc/kubernetes/pki/apiserver-etcd-client.crt

- --etcd-keyfile=/etc/kubernetes/pki/apiserver-etcd-client.key

- --etcd-servers=https://127.0.0.1:2379

- --kubelet-client-certificate=/etc/kubernetes/pki/apiserver-kubelet-client.crt

- --kubelet-client-key=/etc/kubernetes/pki/apiserver-kubelet-client.key

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.crt

- --proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client.key

- --requestheader-allowed-names=front-proxy-client

- --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt

- --requestheader-extra-headers-prefix=X-Remote-Extra-

- --requestheader-group-headers=X-Remote-Group

- --requestheader-username-headers=X-Remote-User

- --secure-port=6443

- --service-account-issuer=https://kubernetes.default.svc.cluster.local

- --service-account-key-file=/etc/kubernetes/pki/sa.pub

- --service-account-signing-key-file=/etc/kubernetes/pki/sa.key

- --service-cluster-ip-range=10.96.0.0/12

- --tls-cert-file=/etc/kubernetes/pki/apiserver.crt

- --tls-private-key-file=/etc/kubernetes/pki/apiserver.key

- --audit-policy-file=/etc/kubernetes/prod-audit.yaml

- --audit-log-path=/var/log/prod-secrets.log

- --audit-log-maxage=30

image: registry.k8s.io/kube-apiserver:v1.27.0

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 8

httpGet:

host: 192.41.14.6

path: /livez

port: 6443

scheme: HTTPS

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 15

name: kube-apiserver

readinessProbe:

failureThreshold: 3

httpGet:

host: 192.41.14.6

path: /readyz

port: 6443

scheme: HTTPS

periodSeconds: 1

timeoutSeconds: 15

resources:

requests:

cpu: 250m

startupProbe:

failureThreshold: 24

httpGet:

host: 192.41.14.6

path: /livez

port: 6443

scheme: HTTPS

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 15

volumeMounts:

- mountPath: /etc/ssl/certs

name: ca-certs

readOnly: true

- mountPath: /etc/ca-certificates

name: etc-ca-certificates

readOnly: true

- mountPath: /etc/kubernetes/pki

name: k8s-certs

readOnly: true

- mountPath: /usr/local/share/ca-certificates

name: usr-local-share-ca-certificates

readOnly: true

- mountPath: /usr/share/ca-certificates

name: usr-share-ca-certificates

readOnly: true

- mountPath: /etc/kubernetes/prod-audit.yaml

name: audit

readOnly: true

- mountPath: /var/log/prod-secrets.log

name: audit-log

readOnly: false

hostNetwork: true

priority: 2000001000

priorityClassName: system-node-critical

securityContext:

seccompProfile:

type: RuntimeDefault

volumes:

- hostPath:

path: /etc/ssl/certs

type: DirectoryOrCreate

name: ca-certs

- hostPath:

path: /etc/ca-certificates

type: DirectoryOrCreate

name: etc-ca-certificates

- hostPath:

path: /etc/kubernetes/pki

type: DirectoryOrCreate

name: k8s-certs

- hostPath:

path: /usr/local/share/ca-certificates

type: DirectoryOrCreate

name: usr-local-share-ca-certificates

- hostPath:

path: /usr/share/ca-certificates

type: DirectoryOrCreate

name: usr-share-ca-certificates

- name: audit

hostPath:

path: /etc/kubernetes/prod-audit.yaml

type: File

- name: audit-log

hostPath:

path: /var/log/prod-secrets.log

type: FileOrCreate

status: {}

I have also tried configuring the volumes as:

- hostPath:

path: /etc/kubernetes/prod-audit.yaml

type: File

name: audit

- hostPath:

path: /var/log/prod-secrets.log

type: FileOrCreate

name: audit-log

I have followed the Lab instructions and also I have tried the exact Kubernetes Docs steps found at Auditing | Kubernetes

Any help is appreciated, thanks!!