I developed the following network policy to fulfill the requirements from this question, but I am not sure what I got wrong here.

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: allow-app1-app2

namespace: apps-xyz

spec:

podSelector:

matchLabels:

role: db

policyTypes:

- Ingress

ingress:

- from:

- namespaceSelector:

- matchLabels:

kubernetes.io/metadata.name: apps-xyz

podSelector:

- matchLabels:

name: app1

- namespaceSelector:

- matchLabels:

kubernetes.io/metadata.name: apps-xyz

podSelector:

- matchLabels:

name: app2

ports:

- protocol: TCP

port: 6379

In the “apps-xyz” namespace there should be exactly one app with label “name: app1” and one app with label “name: app2”.

Although my solution is a bit different from the official solution because I use the AND logic indicated by the lecture, I think it should still work.

What did I do wrong here?

Let’s look at the problem:

A few pods have been deployed in the apps-xyz namespace. There is a pod called redis-backend which serves as the backend for the apps app1 and app2 . The pod called app3 on the other hand, does not need access to this redis-backend pod. Create a network policy called allow-app1-app2 that will only allow incoming traffic from app1 and app2 to the redis-pod .

Make sure that all the available labels are used correctly to target the correct pods. Do not make any other changes to these objects.

The key thing your solution is missing is the portion I put in bold. You need to use all the labels for purposes of the problem.

You can find the solution in the course repo, and you’ll see that the tier: frontend is included in the sub-rule for both permitted pods.

1 Like

Hi @rob_kodekloud - Thank you for the feedback. I think your explanation makes a lot of sense. However, I would still argue that the solution should probably check against accessibility rather than the labels defined in the rule.

Another side question here. Beside running kubectl describe networkpolicys <policy_name> to view the access between pods, do you know if there’s any other way to verify that my policy is actually working as expected (e.g. something like kubectl auth can-i)?

I have seen people mention “curl”, but most of the containers from the exam that I exec into don’t have “curl” installed.

You create a pod that either should or should not have access according to the problem description, and you choose the image of that pod so that it has the tools you need. I actually prefer to use the “netcat” tool (nc) since it’s a bit easier to use for this purpose than curl, and it’s included in a busybox image.

So the steps are:

- Create a busybox tool that fits the case you’re trying to test. Say, it’s called test-pod.

- Use kubectl exec for that pod:

kubectl exec test-pod -- nc -w2 -v target-pod.svc 80

where -w2 means “wait for 2 seconds”, -v means “verbose output”, target-pod.svc is a service that points to the pod you want test the netpol for access, and 80 is the port you want to test this on.

1 Like

Thank you @rob_kodekloud - I just tested out the “netcat” approach you suggested using mock exam 2 question 2 again. For people who are interested, here are the steps and results:

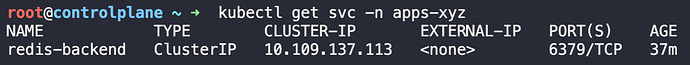

- Review the redis-backend service

- Create a test busybox pod that does NOT HAVE the required labels and run the netcat tool

root@controlplane ~ kubectl run -n apps-xyz -it test-busybox-1 --image=busybox -- sh

If you don't see a command prompt, try pressing enter.

/ #

/ #

/ # nc -w2 -v 10.109.137.113 6379

nc: 10.109.137.113 (10.109.137.113:6379): Connection timed out

/ #

- Create a test busybox pod that does HAVE the required labels and run the netcat tool

root@controlplane ~ kubectl run -n apps-xyz -it test-busybox-2 --image=busybox --labels="name=app1,tier=frontend" -- sh

If you don't see a command prompt, try pressing enter.

/ #

/ #

/ # nc -w2 -v 10.109.137.113 6379

10.109.137.113 (10.109.137.113:6379) open

/ #

As we can see, the pod that has no access is having connection timed out and the pod that does have access is having connection open. Cheers!

netcat rocks and so do you! Great to hear.