Can anyone help me to understand what’s wrong with my configuration or maybe there are some playground limits which I am not aware of?

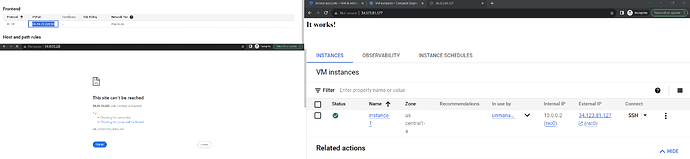

I am launching simple infra with GCP and Terraform with the network, instance, and Classic load balancer. I have deployed the Apache docker container on the instance and I can see the It works message on the static reserved external IP for the instance in the browser.

However, when I create a backend service, unmanaged instance group, healthcheck, urlmap and HTTP proxy, I get a timeout on http://:80

Healthchecks are passed and the instance is healthy, firewall logs show that the traffic for the healthcheks are allowed too. I think I misconfigured something in Network configurations for the forwarding rule.

I also tried to create the same thing with default network but it failed the same.

Will appreciate any help and attach any additional info/logs if needed.

main.tf

variable "project_id" {

description = "The ID of the project in which resources will be provisioned."

default = "clgcporg8-014" # Enter project ID locally till we don't have an account.

}

variable "compute_service_account_email" {

description = "An email of the Compute Engine default service account"

default = "[email protected]" # Enter compute service account email ID locally till we don't have an account.

}

variable "resource_count" {

description = "Number of instances and IPs to create"

default = "1"

}

provider "google" {

project = var.project_id

region = "us-central1"

}

module "network" {

source = "./01-network"

ip_count = var.resource_count

}

module "instance" {

source = "./02-instance"

private_vpc_id = module.network.private_vpc_id

private_subnet_id = module.network.private_subnet_id

service_account_email = var.compute_service_account_email

instance_count = var.resource_count

static_ips = module.network.static_ips

}

module "load-balancer" {

source = "./03-load-balancer"

public_vpc_id = module.network.public_vpc_id

public_subnet_id = module.network.public_subnet_id

traffic_lb_ip = module.network.traffic_lb_ip

instance_group = module.instance.unmanaged_instance_group

}

load-balancer.tf

variable "public_vpc_id" {

description = "The ID of the vpc where the lb will be placed."

}

variable "public_subnet_id" {

description = "The ID of the subnet where the lb will be placed."

}

variable "traffic_lb_ip" {

description = "Static external IP address for traffic load balancer from network module."

}

variable "instance_group" {

description = "Unmanaged Instance Group"

}

resource "google_compute_health_check" "http_health_check" {

name = "http-health-check"

check_interval_sec = 30

timeout_sec = 5

tcp_health_check {

port = "80"

}

healthy_threshold = 2

unhealthy_threshold = 2

log_config {

enable = true

}

}

resource "google_compute_backend_service" "backend_svc" {

name = "http-backend"

port_name = "http"

protocol = "HTTP"

timeout_sec = 10

log_config {

enable = true

}

enable_cdn = false

backend {

group = var.instance_group

balancing_mode = "UTILIZATION"

capacity_scaler = 1

max_utilization = 1

}

health_checks = [google_compute_health_check.http_health_check.id]

}

resource "google_compute_url_map" "url_map" {

name = "traffic-load-balancer"

default_service = google_compute_backend_service.backend_svc.self_link

}

resource "google_compute_target_http_proxy" "http_proxy" {

name = "traffic-load-balancer-target-proxy"

url_map = google_compute_url_map.url_map.self_link

}

resource "google_compute_global_forwarding_rule" "forwarding_rule" {

name = "http-proxy"

load_balancing_scheme = "EXTERNAL"

target = google_compute_target_http_proxy.http_proxy.self_link

port_range = "80-80"

ip_protocol = "TCP"

}

network.tf

variable ip_count {

description = "Number of IPs to create"

type = string

}

# a VPC for private resources

resource "google_compute_network" "application_private_vpc" {

name = "application-private-vpc"

auto_create_subnetworks = "false"

}

resource "google_compute_subnetwork" "private_subnet" {

name = "private-subnet"

ip_cidr_range = "10.0.0.0/16"

network = google_compute_network.application_private_vpc.id

region = "us-central1"

}

resource "google_compute_address" "static" {

count = var.ip_count

name = "static-ip-address-${count.index+1}"

region = "us-central1"

address_type = "EXTERNAL"

network_tier = "PREMIUM"

}

# a VPC for public resources

resource "google_compute_network" "traffic_public_vpc" {

name = "traffic-public-vpc"

auto_create_subnetworks = "false"

}

resource "google_compute_subnetwork" "public_subnet" {

name = "public-subnet"

ip_cidr_range = "10.1.0.0/16"

network = google_compute_network.traffic_public_vpc.id

region = "us-central1"

}

resource "google_compute_address" "traffic_lb_ip" {

name = "traffic-lb-ip"

region = "us-central1"

}

locals {

joined_ips = join(",", google_compute_address.static.*.address)

}

resource "google_compute_firewall" "allow-ssh-to-static-ips" {

name = "allow-ssh-to-static-ips"

network = google_compute_network.application_private_vpc.name

allow {

protocol = "tcp"

ports = ["22"]

}

source_ranges = ["0.0.0.0/0"]

destination_ranges = [local.joined_ips]

direction = "INGRESS"

priority = 1000

target_tags = ["ssh"]

}

resource "google_compute_firewall" "allow-all-in-subnet" {

name = "allow-all-in-subnet"

network = google_compute_network.application_private_vpc.name

allow {

protocol = "all"

}

source_ranges = ["0.0.0.0/0"]

destination_ranges = [google_compute_subnetwork.private_subnet.ip_cidr_range]

}

resource "google_compute_firewall" "application-allow-egress" {

name = "application-allow-egress"

network = google_compute_network.application_private_vpc.name

direction = "EGRESS"

destination_ranges = ["0.0.0.0/0"]

allow {

protocol = "all"

}

}

# Output for created external static IPs

output "static_ips" {

description = "The static IPs for the instances"

value = google_compute_address.static.*.address

}

output "traffic_lb_ip" {

description = "Static external IP address for traffic load balancer."

value = google_compute_address.traffic_lb_ip.address

}

# Output for Application Private VPC

output "private_vpc_id" {

value = google_compute_network.application_private_vpc.id

description = "The ID of the Private VPC network"

}

# Output for Private Subnet

output "private_subnet_id" {

value = google_compute_subnetwork.private_subnet.id

description = "The ID of the private subnet"

}

output "private_subnet_cidr" {

value = google_compute_subnetwork.private_subnet.ip_cidr_range

description = "The IP CIDR range of the private subnet"

}

# Output for Traffic Public VPC

output "public_vpc_id" {

value = google_compute_network.traffic_public_vpc.id

description = "The ID of the public VPC network"

}

# Output for Public Subnet

output "public_subnet_id" {

value = google_compute_subnetwork.public_subnet.id

description = "The ID of the public subnet"

}

output "public_subnet_cidr" {

value = google_compute_subnetwork.public_subnet.ip_cidr_range

description = "The IP CIDR range of the public subnet"

}

instance.tf

variable "private_vpc_id" {

description = "Application VPC ID from network module"

type = string

}

variable "private_subnet_id" {

description = "Private subnet ID from network module"

type = string

}

variable "service_account_email" {

description = "An email of the Compute Engine default service account"

type = string

}

variable "instance_count" {

description = "Number of instances to create"

}

variable "static_ips" {

description = "Static IPs from network module"

type = list(string)

}

resource "google_compute_instance" "instance" {

count = var.instance_count

name = "instance-${count.index+1}"

machine_type = "e2-micro"

zone = "us-central1-a"

tags = ["http-server", "https-server", "lb-health-check", "ssh"]

boot_disk {

auto_delete = true

device_name = "instance-${count.index+1}"

initialize_params {

image = "projects/ubuntu-os-cloud/global/images/ubuntu-2310-mantic-amd64-v20231031"

size = 10

type = "pd-standard"

}

mode = "READ_WRITE"

}

network_interface {

subnetwork = var.private_subnet_id

access_config {

nat_ip = var.static_ips[count.index]

}

}

can_ip_forward = false

deletion_protection = false

enable_display = false

labels = {

goog-ec-src = "vm_add-tf"

}

scheduling {

automatic_restart = true

on_host_maintenance = "MIGRATE"

preemptible = false

provisioning_model = "STANDARD"

}

shielded_instance_config {

enable_integrity_monitoring = true

enable_secure_boot = false

enable_vtpm = true

}

service_account {

email = var.service_account_email

scopes = ["https://www.googleapis.com/auth/sqlservice.admin", "https://www.googleapis.com/auth/compute", "https://www.googleapis.com/auth/servicecontrol", "https://www.googleapis.com/auth/service.management.readonly", "https://www.googleapis.com/auth/logging.admin", "https://www.googleapis.com/auth/monitoring", "https://www.googleapis.com/auth/trace.append", "https://www.googleapis.com/auth/devstorage.read_only"]

}

}

resource "google_compute_instance_group" "unmanaged_instance_group" {

name = "unmanaged-instance-group-1"

description = "An unmanaged instance group created with Terraform"

network = var.private_vpc_id

zone = "us-central1-a"

named_port {

name = "http"

port = 80

}

depends_on = [google_compute_instance.instance]

}

resource "null_resource" "add_instance_to_group" {

count = var.instance_count

depends_on = [google_compute_instance.instance, google_compute_instance_group.unmanaged_instance_group]

provisioner "local-exec" {

command = "gcloud compute instance-groups unmanaged add-instances ${google_compute_instance_group.unmanaged_instance_group.name} --instances=${google_compute_instance.instance[count.index].name} --zone=us-central1-a"

}

}

output "unmanaged_instance_group" {

description = "Unanaged Instance Group"

value = google_compute_instance_group.unmanaged_instance_group.self_link

}

output "internal_ips" {

description = "Internal IP addresses of the instances"

value = google_compute_instance.instance.*.network_interface[0].network_ip

}