As a developer, you've probably heard of application containerization. Containers provide a lightweight and isolated runtime that ensures applications inside them run consistently across different environments.

But as the number and complexity of containerized applications grow, so do the challenges of managing them. How do you ensure that your containers are running smoothly with high availability and can handle increased traffic without a negative impact on performance?

This is where container orchestration comes in. Container orchestration is the process of automating the deployment, management, and coordination of containers across multiple hosts or clusters. Container orchestration tools help you simplify and streamline the tasks involved in running containerized applications at scale.

In this article, we'll focus on two of the most popular and widely used tools for containerization and container orchestration: Docker and Kubernetes. We'll explore how Kubernetes works with Docker to provide a powerful and flexible platform for deploying and managing your applications.

Understanding Docker Containers

Before we delve into Kubernetes, let's take a brief look at Docker and its key components. Docker lets you bundle an application and everything it needs into a portable unit, known as a container, that can run anywhere. This solves the issue of having different results on different machines and makes sure everything works the same everywhere.

Key Docker Components

Docker consists of several components that work together to create and run containers. Some of the main components are:

- Docker images: This is a small, self-contained, and runnable software bundle that has everything required to run an application, such as the code, runtime, system tool, and libraries. Images are built from a series of instructions specified in a Dockerfile, which defines the environment and dependencies of the application.

- Docker containers: A Docker container is an instance of a Docker image. It provides an isolated runtime environment for running the application. Containers are portable and can be easily moved between different environments, ensuring consistency and reproducibility. You can start, stop, restart, and remove containers using the Docker CLI or API. You can also attach to a running container and execute commands inside it.

- Docker registries: Docker registries are repositories where Docker images are stored and distributed. The most popular Docker registry is Docker Hub, a public registry that hosts thousands of pre-built images. You can push and pull images from registries using the Docker CLI or API. You can also use private registries, such as Docker Trusted Registry, which is a secure and enterprise-grade registry solution.

If you want to learn more about Docker and how to use it to create and run containerized applications, you can check out our course:

Docker Tools

Docker also provides a number of tools that enhance its user experience and functionality. Below are some of its tools:

- Docker Desktop: An easy-to-use application that allows you to run Docker on your Windows or Mac machine. It includes the Docker Engine, the Docker CLI, Docker Compose, and other tools that make it easy to develop and test your applications locally. It also integrates with popular IDEs and cloud platforms, such as Visual Studio Code and AWS.

- Docker Hub: This is the world's largest online repository of Docker images. It allows you to browse, search, and download millions of images for various applications and use cases. It also allows you to create and manage your repositories, where you can store and share your images with others. You can also use Docker Hub to automate your image builds and tests as well as to scan your images for security vulnerabilities.

- Docker Scout: A web-based tool that helps you monitor and troubleshoot your containers and applications. It allows you to view the status, logs, metrics, and events of your containers, as well as to set alerts and notifications for any performance issues. You can also use Docker Scout to perform root cause analysis and to get recommendations for resolving problems.

- Docker Extensions: These are plugins that extend the functionality of Docker. They allow you to integrate Docker with other tools and services, such as logging, monitoring, networking, storage, and security tools. You can find and install Docker Extensions from the Docker Store, which is a curated marketplace of trusted and verified extensions.

Introduction to Kubernetes

While Docker simplifies the process of creating and running containers, Kubernetes takes containerization to the next level by providing a robust and scalable container orchestration platform. Kubernetes, also known as K8s, automates the deployment, scaling, and management of containerized applications.

To learn more about Kubernetes core concepts, check out this blog: Kubernetes Concepts Explained!

Key Kubernetes Components

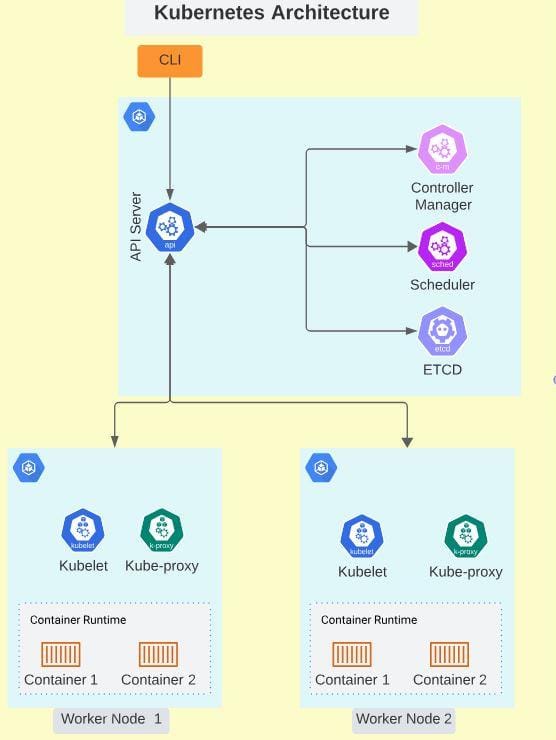

To understand how Kubernetes works, let's look at its key components:

- Pods: They are the smallest and most basic units of computation in Kubernetes. A Pod is a group of one or more containers that share the same network and storage resources and are deployed on the same host. Pods are ephemeral, meaning that they can be created and destroyed at any time. They are usually managed by higher-level controllers, such as deployments, which ensure that their desired number and state are maintained.

- Nodes: These are the physical or virtual machines that run your Pods. Each node has a kubelet, which is an agent that communicates with the master node and manages the Pods on the node. Nodes also have other components, such as a container runtime, a kube-proxy, which handles the network routing for the Pods, and a kube-DNS, which provides DNS services for the Pods.

- Master node: The master node in a Kubernetes cluster oversees the entire cluster's operation and manages the scheduling and deployment of Pods. It coordinates communication between nodes and maintains the desired state of the cluster.

- Control plane: The control plane is the brain of the Kubernetes cluster. It has the API server, scheduler, etcd, and controller manager, that handle the orchestration and management of the cluster.

- API server: The API server is the main entry point for all the communications between the nodes and the control plane. It exposes the Kubernetes API, which allows you to interact with your cluster using the kubectl CLI, the Kubernetes dashboard, or other tools and clients.

- Scheduler: The scheduler is responsible for assigning Pods to nodes based on the resource availability and requirements of the Pods.

- Controller manager: The controller manager runs various controllers that monitor and manage the state of your cluster. For example, the replication controller ensures that the desired number of Pods are running for a given deployment, the service controller creates and updates the load balancers for your services, and the node controller handles the node registration and health checks.

- Etcd: Etcd is a distributed key-value store that stores the configuration and state data of your cluster. It is used by the API server and the other control plane components to store and retrieve the cluster information.

Below is a graphical representation of the Kubernetes architecture:

Check out our Kubernetes for Beginners courses if you want to learn more about Kubernetes and how to use it to orchestrate and manage your containerized applications.

How Kubernetes Works Together with Docker

Now that you have a basic understanding of Docker and Kubernetes, let's see how they work together to provide efficient containerization and seamless container orchestration.

Containerization with Docker

Docker builds images using Open Container Initiative (OCI) image format. The OCI image format is designed to be platform-agnostic, meaning that containers created using this format can be run on any platform that supports the OCI standard.

Role of Kubernetes in Managing Docker Containers

Docker focuses on the creation and packaging of containers, while Kubernetes takes on the responsibility of managing and orchestrating these containers at scale. Kubernetes provides a powerful set of features that simplify the deployment and management of containers, ensuring high availability, scalability, and fault tolerance.

Note: Kubernetes dropped Docker runtime as its container runtime after v1.24. Now users have the option of choosing containerd or CRI-O as their Kubernetes container runtime. They both seamlessly run containers from images that follow the OCI format, and this includes Docker images.

To learn more about the change in Kubernetes runtime, check out this article: Kubernetes Dropping Docker: What Happens Now?

Container Orchestration Features of Kubernetes

Below are some Kubernetes features that make it an ideal tool for container orchestration:

- Scaling: Kubernetes allows you to scale your application by adding or removing Pods based on the workload. This ensures that your application can handle increased traffic while still maintaining optimal performance. You can use the kubectl CLI or the Kubernetes dashboard to manually scale your Pods. Alternatively, you can use the Horizontal Pod Autoscaler (HPA) to automatically scale your pods, or the Vertical Pod Autoscaler (VPA) to automatically scale your pods’ resources, or the Cluster Autoscaler (CA) to automatically scale your nodes based on defined metrics.

- Load balancing: Kubernetes automatically distributes incoming network traffic among your Pods using services and ingresses. This helps prevent the overloading of some Pods.

- Service discovery: Kubernetes provides a built-in DNS service, kube-DNS, that allows containers within the cluster to discover and communicate with each other using the Pod or service names. It allows you to use simple and human-readable names, such as

my-service.my-namespace.svc.cluster.local, to access your services. - Rolling updates: Kubernetes supports rolling updates, allowing you to update your application without downtime. It works by gradually replacing old Pods with new ones, ensuring a smooth transition and minimizing any disruption to users. You can use the kubectl CLI or the Kubernetes dashboard to perform rolling updates, or you can use the Deployment object to automate and manage the rolling updates, using parameters such as

replicas,maxUnavailable, andmaxSurge.

Benefits of Using Kubernetes with Docker

Using Kubernetes with Docker has a lot of benefits for your application development and deployment. Here are some of these benefits:

- Enhanced scalability: Kubernetes enables you to scale applications containerized using Docker easily and efficiently using autoscaling, load balancing, and service discovery features. You can handle any amount of traffic and demand without compromising the performance of your application.

- Improved resource utilization: Kubernetes optimizes resource utilization by efficiently scheduling and managing containers across nodes. It ensures that containers are placed on nodes with available resources, preventing resource bottlenecks and maximizing cluster efficiency. Kubernetes also allows for the optimization of resources utilized by Pods using horizontal or vertical autoscaling features.

- Automated deployment and management: Kubernetes simplifies the deployment and management of containerized applications. It provides a declarative approach to defining the desired state of your applications, allowing Kubernetes to handle the complex tasks of deploying, scaling, and managing containers.

Learning Path for a Kubernetes Administrator

If you're interested in becoming a Kubernetes administrator, you can follow our Kubernetes Administrator learning path. This learning path will equip you with the practical and theoretical knowledge you need to set up, operate, and troubleshoot a Kubernetes cluster. You will learn everything from Understanding the fundamentals of DevOps to how you can get career ready.

Conclusion

The combination of Kubernetes and Docker provides a powerful solution for container creation and orchestration. Docker simplifies the process of creating and running containers, while Kubernetes takes containerization to the next level by providing advanced features for scaling, load balancing, self-healing, and more. The seamless integration between Kubernetes and Docker allows you to leverage the strengths of both technologies to build scalable, resilient, and manageable containerized applications.

Whether you're a developer, a DevOps engineer, or an IT professional, understanding the synergy between Kubernetes and Docker is essential for modern application deployment and management in today's containerized world.

If you're interested in learning more about DevOps, you can sign up for a free account on KodeKloud. As a member, you’ll have access to 70+ courses, labs, quizzes, and projects, that will help you master different DevOps skills.

We hope you enjoyed this article and found it useful. If you have any comments or questions, feel free to leave them below.

Discussion