as per the K8S documentation which is quoted below , the init side car container (ie restart policy is Always) will be shutting down the last if termination grace period is not over which is 30 seconds . But what I’m seeing is it is getting terminated as same as rest all containers , do you know the reason for it please.

Upon Pod termination, the kubelet postpones terminating sidecar containers until the main application container has fully stopped. The sidecar containers are then shut down in the opposite order of their appearance in the Pod specification. This approach ensures that the sidecars remain operational, supporting other containers within the Pod, until their service is no longer required

It will help to see the YAML for this pod to determine what is happening.

Be sure to post

as a code block

or it will be illegible.

Hi Alistair ,

Pls find the code block . thanks

apiVersion: apps/v1

kind: Deployment

metadata:

name: myapp-prd-dpd

labels:

name: myapp-prd-dpd

spec:

replicas: 2

selector:

matchLabels:

app: myapp

template:

metadata:

labels:

app: myapp

spec:

restartPolicy: Always

terminationGracePeriodSeconds: 60

initContainers:

- name: init-myservice

image: busybox

restartPolicy: Always # here restart policy is always , we can't set on failure or never

command: ['sh', '-c', 'tail -F /var/tmp/logs.txt']

volumeMounts:

- name: app-config

mountPath: /var/tmp

containers:

- name: nginx-container

image: nginx:latest

ports:

- containerPort: 80

volumeMounts:

- name: app-config

mountPath: /var/tmp

command: ['sh', '-c', 'while true; do echo "logging" >> /var/tmp/logs.txt; sleep 1; done']

- name: second-container

image: busybox:latest

command: [ "sh", "-c", "while true; do echo 'Secondary container running'; sleep 3600; done" ]

volumes:

- name: app-config

emptyDir: {}

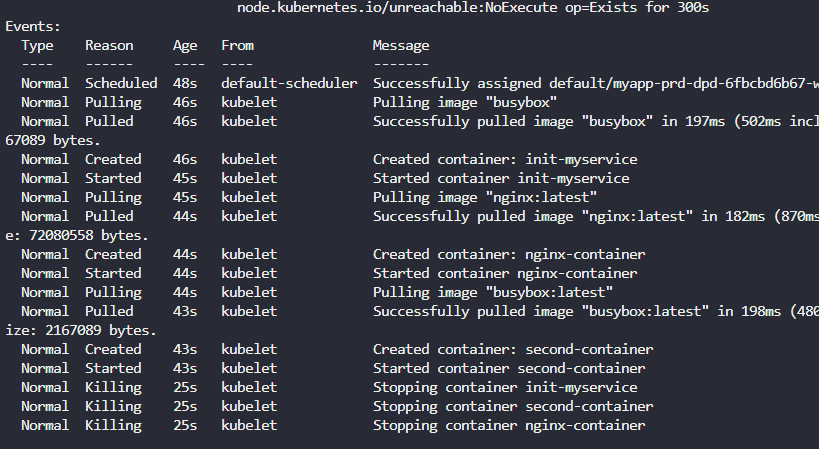

I have checked this further and my observation is correct as it is not doing the described termination sequence detailed in the k8s documentation .

Please see the kubectl describe event podname output .

Name: myapp-prd-dpd-6566cf5b84-mxqmt.181b231edc759fdf

Namespace: default

Labels: <none>

Annotations: <none>

API Version: v1

Count: 1

Event Time: <nil>

First Timestamp: 2025-01-16T09:44:56Z

Involved Object:

API Version: v1

Field Path: spec.containers{second-container}

Kind: Pod

Name: myapp-prd-dpd-6566cf5b84-mxqmt

Namespace: default

Resource Version: 3986

UID: 1a35d3dc-0ce2-47da-8956-cb9ae7a44a76

Kind: Event

Last Timestamp: 2025-01-16T09:44:56Z

Message: Started container second-container

Metadata:

Creation Timestamp: 2025-01-16T09:44:56Z

Resource Version: 4138

UID: 21bc353c-13ca-4953-a99d-114d6694d394

Reason: Started

Reporting Component: kubelet

Reporting Instance: node01

Source:

Component: kubelet

Host: node01

Type: Normal

Events: <none>

Name: myapp-prd-dpd-6566cf5b84-mxqmt.181b2404323d09d7

Namespace: default

Labels: <none>

Annotations: <none>

API Version: v1

Count: 1

Event Time: <nil>

First Timestamp: 2025-01-16T10:01:21Z

Involved Object:

API Version: v1

Field Path: spec.initContainers{init-myservice}

Kind: Pod

Name: myapp-prd-dpd-6566cf5b84-mxqmt

Namespace: default

Resource Version: 3986

UID: 1a35d3dc-0ce2-47da-8956-cb9ae7a44a76

Kind: Event

Last Timestamp: 2025-01-16T10:01:21Z

Message: Stopping container init-myservice

Metadata:

Creation Timestamp: 2025-01-16T10:01:21Z

Resource Version: 5673

UID: 25b15cb4-5189-4a3e-bd5c-fa3e2ef08db0

Reason: Killing

Reporting Component: kubelet

Reporting Instance: node01

Source:

Component: kubelet

Host: node01

Type: Normal

Events: <none>

Name: myapp-prd-dpd-6566cf5b84-mxqmt.181b2404323d3065

Namespace: default

Labels: <none>

Annotations: <none>

API Version: v1

Count: 1

Event Time: <nil>

First Timestamp: 2025-01-16T10:01:21Z

Involved Object:

API Version: v1

Field Path: spec.containers{second-container}

Kind: Pod

Name: myapp-prd-dpd-6566cf5b84-mxqmt

Namespace: default

Resource Version: 3986

UID: 1a35d3dc-0ce2-47da-8956-cb9ae7a44a76

Kind: Event

Last Timestamp: 2025-01-16T10:01:21Z

Message: Stopping container second-container

Metadata:

Creation Timestamp: 2025-01-16T10:01:21Z

Resource Version: 5674

UID: 3143749b-84c7-4e60-b8e6-1ae910c1c1ad

Reason: Killing

Reporting Component: kubelet

Reporting Instance: node01

Source:

Component: kubelet

Host: node01

Type: Normal

Events: <none>

Name: myapp-prd-dpd-6566cf5b84-mxqmt.181b2404323dd47b

Namespace: default

Labels: <none>

Annotations: <none>

API Version: v1

Count: 1

Event Time: <nil>

First Timestamp: 2025-01-16T10:01:21Z

Involved Object:

API Version: v1

Field Path: spec.containers{nginx-container}

Kind: Pod

Name: myapp-prd-dpd-6566cf5b84-mxqmt

Namespace: default

Resource Version: 3986

UID: 1a35d3dc-0ce2-47da-8956-cb9ae7a44a76

Kind: Event

Last Timestamp: 2025-01-16T10:01:21Z

Message: Stopping container nginx-container

Metadata:

Creation Timestamp: 2025-01-16T10:01:21Z

Resource Version: 5675

UID: 91479118-6b9a-46a0-8b02-753e84b5f501

Reason: Killing

Reporting Component: kubelet

Reporting Instance: node01

Source:

Component: kubelet

Host: node01

Type: Normal

Events: <none>

I’m not sure I get why you expect different behavior than what you’re getting. The key characteristic of your initContainer is that it never exits, nor does it ever get to an error. So it will be running the life of the pod. If you instead do a task that is limited in time and succeeds (say, “sleep 30”), how does that change behavior?

Thanks Rob for your response , according to the documentation, init sidecar containers have more characteristics than what you have stated here. Since they are classified as init containers with extended life due to the restart policy, they are started in sequence like regular init containers (one after another), and when they terminate, they are terminated last, in the reverse order defined in the init container list. My query is basically why they are being deleted together with the application containers when they should terminate last in practice

Given that your container does not terminate (but is sent a signal and killed), why is that valid?

Regarding the 30-second sleep you mentioned, I tried that. When the task completes (a 30-second sleep), the container will terminate. Because of the Always restart policy, it will spawn a new container. However, the restart will follow an exponential back-off delay, with a maximum of 5 minutes (default).

yes I thought about it , yes I am killing the pod or deployment with kubectl delete command but still it is a graceful termination (SIGTERM) and not a forceful termination (SIGKILL) . So I think my observation is still valid and it should gracefully terminate all the containers in the pod by respecting the termination sequence.