Hi!

i have used this lab a few times to try and understand the kodekloud aws playground limitations. (around 4 times this week)(my issue is here Exposing ArgoCD on K8s in AWS playground)

the lab works but sometimes there are issues… did you try to redo it?

edit:

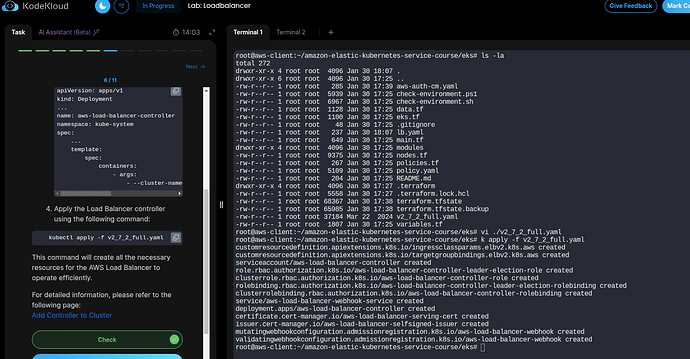

the lab doesnt say it specifically . but the first steps (to clone the repo) must be done in the root & without mentioning a folder. at some point, this yaml file will be copied by the LAB itself to the location of that cloned folder - dont ask me why but thats how it works (if the folder doesnt exists … you dont get the file)

was my answer not helpful?

the file you are looking for is:

https://github.com/kubernetes-sigs/aws-load-balancer-controller/releases/download/v2.7.2/v2_7_2_full.yaml

its a yaml for installing a loadbalancer controller for EKS on AWS.

the file is being copied (in the background) by the lab you are working on (the lab will only copy the file IF the directory of the clone repo exists in the root folder)

Hi @narendra-jatti

Yo need the file v2.7.2/v2_7_2_full.yaml in the eks subdir which is available when you clone the repo in Q3.

sorry to jump in but if you are talking about cloning this repo:

kodekloudhub/amazon-elastic-kubernetes-service-course/tree/main/eks

there is no v2 yaml file… the lab copies this file from an AWS repo (this is a standard yaml for adding a load balancer controller to kubernetes on AWS).

i hope im not misleading as i have experienced this issue myself … just wanted to help.

Yes!,

The file will be available when the previous questions are completed.

hi @adamlevi87 ,will surely check soon and let you know!.thanks for jumping in!

1 Like

what i mean is… The lab copies that file behind the scene, it has nothing to do with the actual repo cloning…

True!

I could have phrased it more aptly.

What I mean is that the file by name v2.7.2/v2_7_2_full.yaml would be available in the eks directory when Q6 is presented.

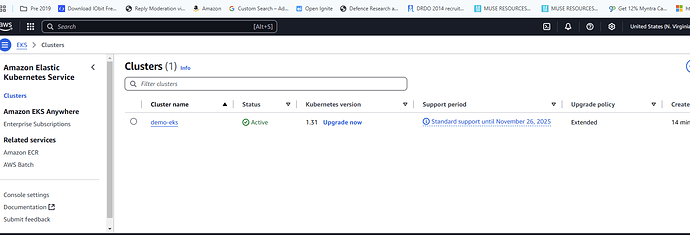

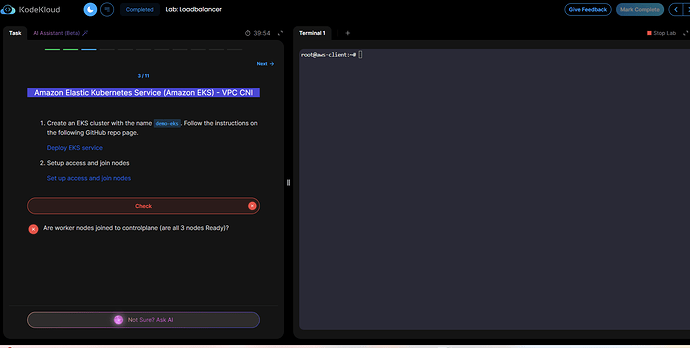

That CRD manifest file would be useful if Q3 results in a pass. The screenshot by @narendra-jatti shows Q3 as filed which contains steps to check-environment along with setting up the cluster, configuring the context, and joining the nodes.

Any misconfiguration in that step could affect Q-6 and subsequent questions.

@Santosh_KodeKloud ,

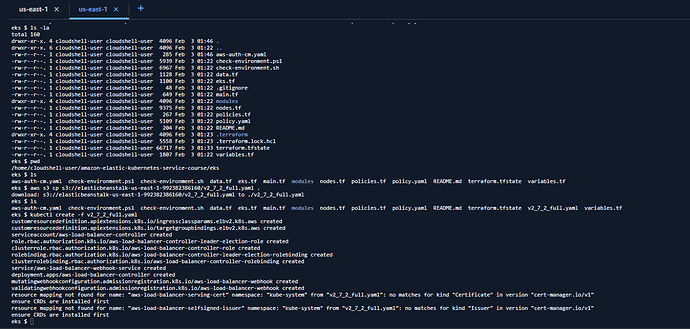

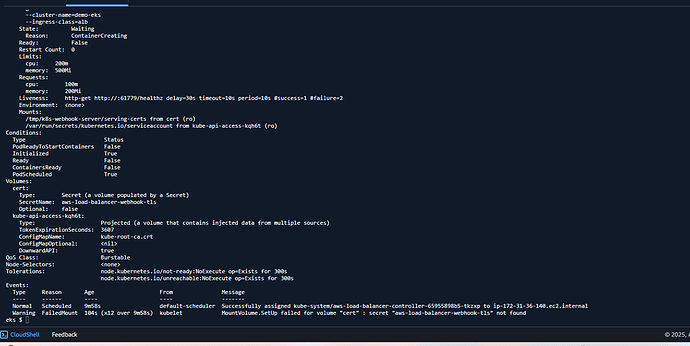

,getting CRD error now…i still don’t see controller yaml file even after Q6,i downloaed manually from adam link and updated only the cluster name and trying to create the contoller…and got this error now…kindly help…

all are fine,why getting error for 3rd anssser…can you help?

Are you actually doing the lab rather than using the lab to do your own thing?

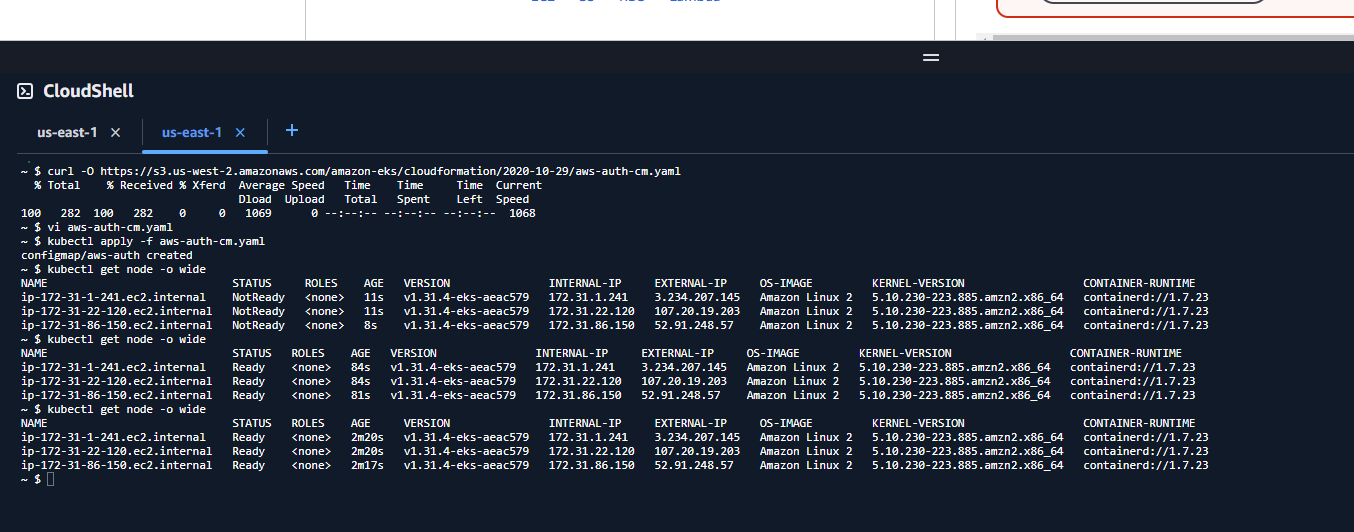

If you are following the lab questions, you must execute the commands from the lab terminal, not cloudshell, as that is where the grader runs. If you haven’t got a working kubeconfig on the lab terminal, then the grader can’t successfully run kubectl get nodes

@Alistair_KodeKloud ,thanks for your help.

Yes,things are fine when i use terminal of the lab instead of cloudshell.

May i know why things were not fine with cloudshell??

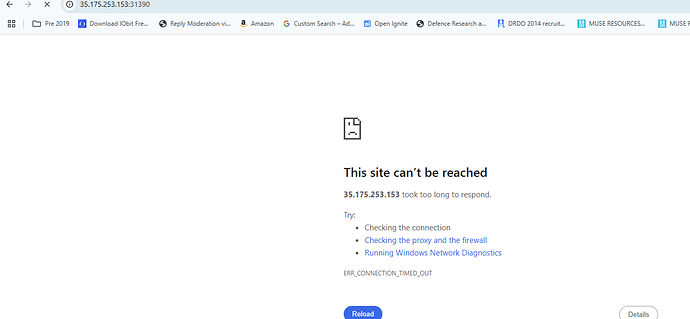

now,i am also trying to access the app via the node port like abov.e…why not able to access via the exposed port with public ip??

@Alistair_KodeKloud ,

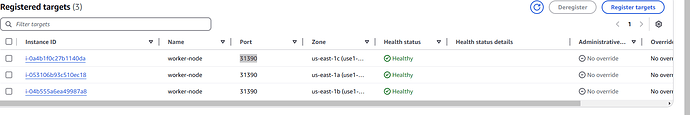

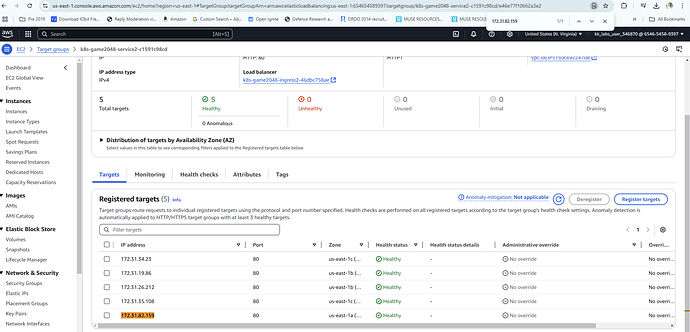

,these are pods targets…can not we access the app in browser uising these IP’s?

I already told you in the post above this one. If you are doing the lab, the grader runs through the lab terminal. It cannot access cloudshell

IF you have done the lab correctly, you access the app through the public DNS name of the loadbalncer. In a real production cluster, the nodes would absolutely not be accessible via public IPs. Loadbalancer is the only way in from the outside world.