CI/CD with Docker for Beginners

Modern application development uses agile methodologies, which allow for faster and more efficient development cycles. This approach emphasizes collaboration and communication between developers, testers, and other stakeholders throughout the entire development process. It also incorporates continuous integration and delivery, which ensures that each new feature or update is thoroughly tested before being released to the public.

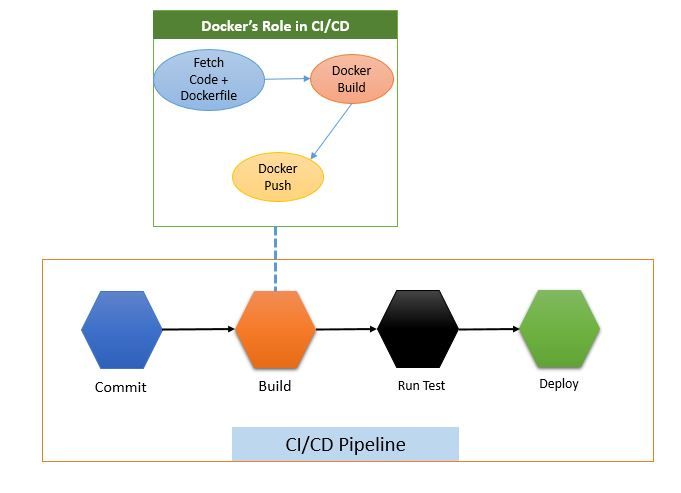

This article focuses on continuous integration, more specifically, the role of Docker in a CI/CD pipeline.

What is Docker?

Docker is a platform that allows developers to package and deploy applications in containers, which can run on any system without requiring additional dependencies or configuration. Docker's portability, efficiency, and ease of use have made it a popular choice for organizations.

Learn more about Docker from this blog: What Is Docker in DevOps & How Does It Work?

What is CI/CD?

A CI/CD pipeline is a set of practices that enable teams to rapidly and reliably deliver code changes to production. It is an essential component of modern software development that involves continuous integration (CI) and continuous delivery/deployment (CD) to streamline the software delivery process. With a CI/CD pipeline, developers can easily test and release code changes to end-users, ensuring the software is always up-to-date and working as expected.

Learn more about CI/CD from this blog: How CI/CD Pipeline Works.

Several tools can be used to implement CI/CD pipelines. One widely used tool is Jenkins.

Role of Docker in DevOps

Docker is a tool that's positioned between developers and operations personnel. Thanks to Docker, developers can hand over to the operations team an application - packaged as an image - that can run seamlessly in any environment (testing, staging, or production). It eliminates friction between the two teams and eases the work of automating steps such as testing, staging, and deployment.

Role of Docker in CI/CD

Docker plays a key role in the CI/CD pipeline. It is supported by most build systems, such as Jenkins, Bamboo, Travis, etc.

The first step you take to integrate Docker into a CI/CD pipeline is to create a Dockerfile and adding to the source code repository. A Dockerfile is a text file that contains a set of instructions for building a Docker image. Below is an example of a Dockerfile:

# Use the official lightweight Node.js 16 image.

# https://hub.docker.com/_/node

FROM node:17-slim

# Create and change to the app directory.

WORKDIR /usr/src/app

# Copy application dependency manifests to the container image.

COPY package*.json ./

# Install production dependencies.

RUN npm install --omit=dev

# Copy local code to the container image.

COPY . ./

# Run the web service on container startup.

CMD [ "npm", "start" ]Each time a new commit is made to the source code, the CI/CD tool—for instance, Jenkins—prompts the downloading of the source code from a remote repository, such as GitHub or GitLab. Docker then builds an image of the application using the downloaded Dockerfile.

After the image is built, it is tagged and uploaded to an image repository, such as Docker Hub. From here, operations teams can use the image for testing, staging, or deploying to production.

Below is an example of a Jenkinsfile that automates the steps above:

pipeline {

environment {

dockerRepo = "<dockerhub-username>/<repo-name>"

registryCredential = '<dockerhub-credential-name>'

}

agent any

stages {

//Getting the source code and a Dockerfile

stage('Getting Source Code From GitHub') {

steps {

checkout([

$class: 'GitSCM',

branches: [[name: 'master']],

userRemoteConfigs: [[

url: 'https://github.com/*****.git',

credentialsId: 'github-key'

]]

])

}

}

//Building image using the Dockerfile in the source code above

//env.BUILD_ID tags the image with a unique build number

stage("Building docker image"){

steps{

script{

dockerImage = docker.build dockerRepo + ":${env.BUILD_ID}"

}

}

}

//Pushing the image built in the previous step to DockerHub

stage('Push Image') {

steps{

script {

docker.withRegistry( '', registryCredential ) {

dockerImage.push("latest")

dockerImage.push("${env.BUILD_ID}")

}

}

}

}

}

}In the code above, the CI/CD pipeline is triggered by a commit to the source code repository. Once triggered, the updated code, which includes the Dockerfile, is downloaded. After this, the pipeline runs the ‘docker build’ command, which instructs Docker to build an image of the application. Once the image is ready, it is pushed to an image repository, in this case, Docker Hub.

The example above demonstrates the role of Docker in a CI/CD pipeline.

Implementing a CI/CD pipeline requires the configuration of many components. To learn how to implement a full CI/CD pipeline, step by step, ENROLL in our Jenkins Course.

Conclusion

Docker enables us to package and deploy applications in containers, which can run on any system without requiring additional dependencies or configuration. This helps in reducing friction between developers and operations as well as making it easier to implement CI/CD pipelines.

This blog has covered a very basic example of a CI/CD pipeline. Advance your knowledge of how CI/CD pipelines work by checking the blogs below. Also, Subscribe to get more CI/CD and Docker blogs.

More on Docker and CI/CD: