Highlights

- Kubernetes explained simply: Automates how containers run, scale, and recover.

- Origin: Created by Google to manage containerized workloads at scale.

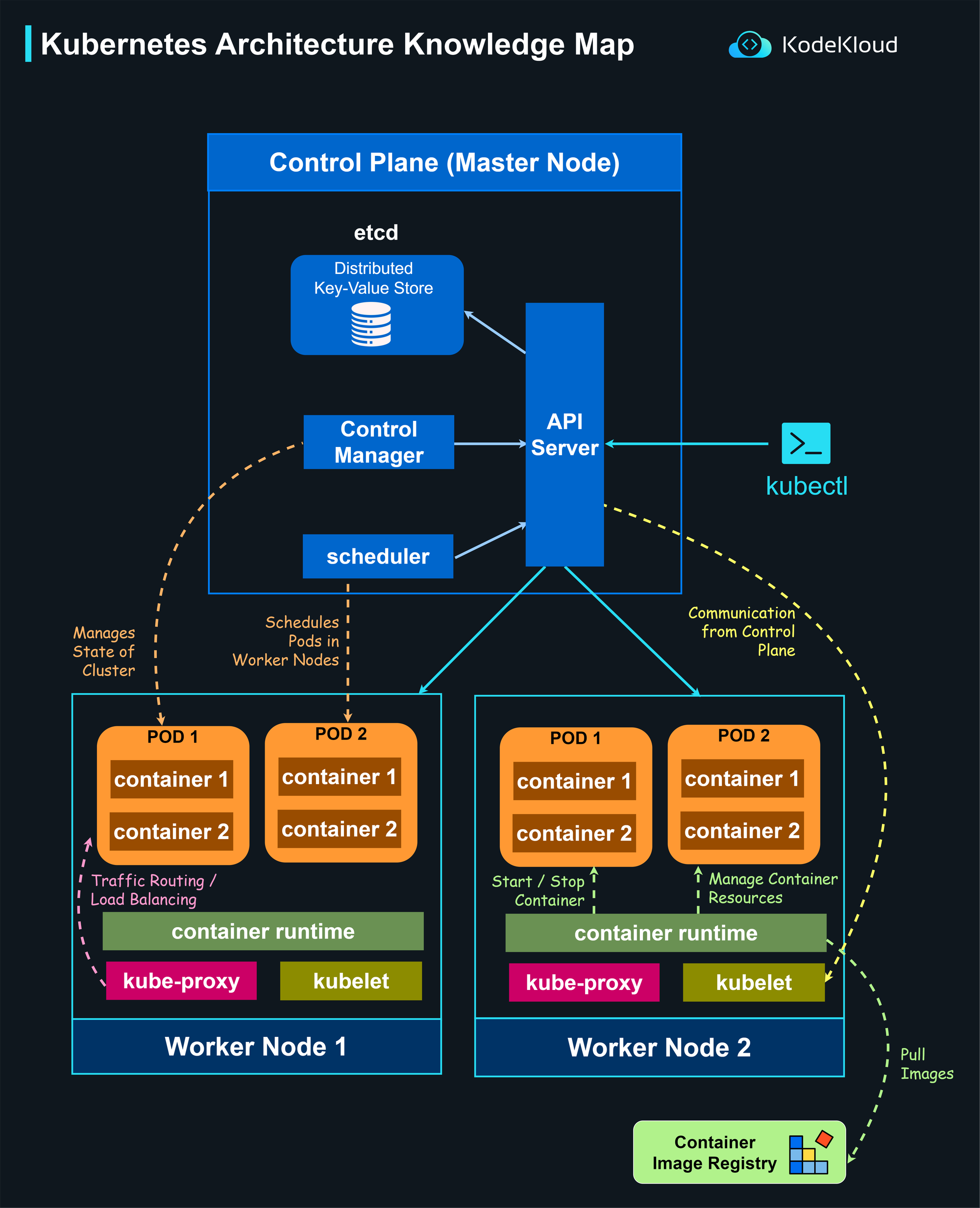

- Core components: Control Plane (API Server, etcd, Scheduler, Controller Manager) and Worker Nodes (Kubelet, Kube-Proxy, Runtime).

- Key concepts: Pods, Deployments, ReplicaSets, Services, ConfigMaps, Secrets, and Namespaces.

- Why it matters: Provides auto-scaling, self-healing, seamless updates, and cross-cloud portability.

- Real-world use: Powers systems at Netflix, Spotify, and Airbnb.

- Learn by doing: Practice Kubernetes instantly in KodeKloud’s free labs - no installation required.

- Certifications path: KCNA → CKA → CKAD → CKS → KCSA (covered in KodeKloud’s Kubeastronaut Journey).

- Free resources: Kubernetes Made Easy eBook, YouTube Channel, and Open DevOps 101 visual guides.

- Takeaway: Kubernetes isn’t just a tool - it’s the foundation of modern DevOps and cloud-native engineering.

Introduction: Why Everyone’s Talking About Kubernetes

If there’s one word that keeps popping up in every DevOps conversation today, it’s Kubernetes.

From small startups deploying microservices to tech giants managing thousands of containers, Kubernetes has quietly become the backbone of modern infrastructure.

But why has it gained such massive popularity? To answer that, let’s rewind for a second.

A few years ago, developers started packaging applications into containers - lightweight, portable environments that run the same way on any system. Containers solved a huge problem:

However, with great power came great complexity. As teams adopted containers widely, they suddenly had to manage hundreds or even thousands of them - running across different servers, regions, and cloud platforms.

That’s when chaos began.

And developers began asking…

Kubernetes - the system that makes container management effortless, scalable, and automatic.

Kubernetes (often shortened as K8s) is an open-source platform that automates the deployment, scaling, and operation of containerized applications. Think of it as the brain of your infrastructure - orchestrating how, when, and where your applications run, so you don’t have to manually manage every container.

Originally built by Google and now maintained by the Cloud Native Computing Foundation (CNCF), Kubernetes has evolved into the industry standard for container orchestration. It’s used by nearly every major cloud provider and powers thousands of enterprise systems around the world.

Computing Foundation

and Enterprises

In today’s cloud-native era, knowing Kubernetes isn’t just optional - it’s a foundational skill for every DevOps, Cloud, and Software Engineer. It enables you to deploy complex applications faster, scale them efficiently, and make your infrastructure resilient by design.

In this beginner’s guide, we’ll break down Kubernetes from the ground up.

You’ll learn what containers really are, why Kubernetes was created, how it works behind the scenes, and how you can start practicing it hands-on using KodeKloud’s beginner-friendly labs and courses - no prior experience required.

Let’s start by understanding the foundation Kubernetes is built on: containers.

Understanding Containers - The Foundation of Kubernetes

Before we dive into Kubernetes, we need to understand what it was built to manage: containers.

Imagine you’re developing an application on your laptop - everything works perfectly. Then you deploy it to a server, and suddenly, it breaks. Missing dependencies. Wrong environment. Different OS versions.

Sound familiar?

That’s the exact problem containers were invented to solve.

What Are Containers?

A container is a lightweight, portable unit that packages an application together with everything it needs to run - its code, libraries, dependencies, and runtime.

Think of a container as a mini environment that travels with your app. Whether it runs on your laptop, a testing server, or in the cloud - it behaves exactly the same.

| 📦 App Code | ⚙️ Libraries | 🔗 Dependencies | 🧩 Runtime |

|

💻 Laptop

|

→ |

🧪 Test Server

|

→ |

☁️ Cloud

|

Containers ensure consistency, isolation, and portability, which makes them perfect for modern software delivery.

Containers vs. Virtual Machines (VMs)

At first glance, containers and virtual machines might look similar - both run applications in isolated environments.

But under the hood, they work very differently.

| Aspect | Virtual Machines (VMs) | Containers |

|---|---|---|

| Weight | Heavy (each VM includes an OS) | Lightweight (share host OS kernel) |

| Startup Time | Minutes | Seconds |

| Resource Usage | High | Very efficient |

| Isolation Level | Full hardware-level isolation | Process-level isolation |

| Portability | Limited | Extremely portable |

| Use Case | Ideal for full OS separation | Ideal for running microservices |

Containers are like tiny, efficient packages compared to bulky VMs.

- Weight: Heavy (each VM includes OS)

- Startup: ⏱️ Minutes

- Resource Usage: High

- Isolation: Hardware-level

- Portability: Limited

- Weight: Lightweight (shared OS kernel)

- Startup: ⚡ Seconds

- Resource Usage: Very efficient

- Isolation: Process-level

- Portability: Extremely portable

They share the same host operating system, which means you can run hundreds of containers on a single machine - where you might only fit a handful of VMs.

Why Containers Changed Everything

Containers revolutionized software development because they:

- Enable consistent environments across development, testing, and production.

- Simplify continuous integration and delivery (CI/CD).

- Allow applications to be broken into microservices, each deployed independently.

- Scale easily - you can spin up or shut down containers within seconds.

- Reduce costs by using resources more efficiently.

In short, containers made DevOps faster, more reliable, and cloud-ready.

But… as teams began using containers at scale, another problem emerged.

Imagine trying to manage hundreds of containers manually - ensuring each one is running, scaling, updating, and talking to others correctly. It’s like trying to conduct an orchestra with hundreds of instruments, all playing on their own.

|

|

That’s exactly why Kubernetes was born - to become the orchestrator of containers.

Learn Containers the Hands-On Way

The best way to understand containers is to use them.

At KodeKloud, you can learn Docker - the world’s most popular container tool - through guided, interactive labs that run directly in your browser.

Start here:

- Docker for Absolute Beginners - Learn how containers work, step by step.

- Docker Labs (Free) - Practice building, running, and managing containers instantly.

Next, we’ll look at the challenge that led to Kubernetes’ creation - what happens when containers multiply and things get complicated.

The Problem Before Kubernetes - When Containers Got Out of Hand

Containers were a breakthrough for developers and operations teams alike.

They made applications portable, lightweight, and easy to run anywhere.

But with that success came a new challenge - scale.

At first, managing a few containers manually wasn’t hard. You could start them with simple commands like docker run, stop them when not needed, and redeploy when necessary.

But what happens when your application grows from 3 containers to 300 - or even 3,000?

That’s when the real chaos begins.

When Containers Multiply

Imagine your application is built using a microservices architecture - one container for the frontend, another for the backend, one for the database, and many others for supporting services.

Now imagine:

- A container crashes - who restarts it automatically?

- Traffic spikes - how do you spin up more containers instantly to handle the load?

- You release an update - how do you replace containers safely without downtime?

- You need to deploy across multiple servers or even clouds - how do you coordinate that?

Manually managing all of this with scripts or Docker commands quickly becomes impossible. The more containers you add, the harder it is to track, monitor, and keep them running smoothly.

You’re no longer managing applications - you’re managing infrastructure chaos.

The Need for Orchestration

This is where the concept of container orchestration was born.

Just like a music conductor coordinates dozens of instruments to play in harmony, an orchestrator coordinates all your containers - deciding when to start, stop, scale, or move them around based on demand and system health.

An orchestration system should be able to:

- Automatically deploy containers across multiple machines.

- Restart failed containers (self-healing).

- Distribute traffic evenly (load balancing).

- Scale up or down automatically based on usage.

- Manage configurations, secrets, and updates safely.

Developers and DevOps teams needed something that could automate container management so they could focus on building, not babysitting, applications.

The Birth of Kubernetes

To solve these growing pains, Google - the company that had already been running containers at massive scale internally - open-sourced its internal orchestration system in 2014.

That system became Kubernetes (pronounced koo-ber-net-ees), often abbreviated as K8s. The name comes from the Greek word for helmsman or pilot - the one who steers the ship.

And that’s exactly what Kubernetes does:

It steers your containers, ensuring they run efficiently, reliably, and automatically across your entire infrastructure.

Today, Kubernetes is maintained by the Cloud Native Computing Foundation (CNCF) and has become the industry standard for container orchestration - used by companies like Netflix, Spotify, Shopify, and nearly every major cloud provider.

Before and After Kubernetes (At a Glance)

| Challenge | Before Kubernetes | With Kubernetes |

|---|---|---|

| Container crashes | Manual restarts | Automatic self-healing |

| Scaling demand | Manual scripting | Auto-scaling |

| Updates | Downtime during redeployments | Rolling updates and rollbacks |

| Load balancing | Manual setup | Built-in Service abstraction |

| Multi-server management | Complex and error-prone | Cluster-level orchestration |

Kubernetes became the answer to one question every DevOps engineer faced:

“How do I manage containers at scale without losing my sanity?”

Now that we know why Kubernetes exists, let’s understand what it actually is and how it orchestrates containers behind the scenes.

What is Kubernetes?

By now, we’ve seen why containers revolutionized how applications are built and why managing them at scale became nearly impossible without orchestration.

That’s where Kubernetes comes in - the system designed to make managing containers automatic, scalable, and reliable.

Kubernetes in Simple Terms

Kubernetes (K8s) is an open-source platform that automates the deployment, scaling, and management of containerized applications.

In simpler words:

Kubernetes is the manager that ensures all your containers are running exactly how and where they should - automatically.

You tell Kubernetes what you want (for example, “run three copies of this web app”), and it figures out how to make that happen.

- If one container fails, Kubernetes restarts it.

- If traffic increases, it adds more.

- If a node crashes, it reschedules containers elsewhere.

It’s like a self-driving system for your infrastructure - constantly monitoring and adjusting to keep your applications healthy and available.

Kubernetes as a Declarative System

Kubernetes works on a declarative model, meaning you describe the desired state, and Kubernetes makes sure your system matches it.

For example, you might define:

“I need 3 Pods of my frontend app running at all times.”

Kubernetes continuously checks - and if only 2 are running, it automatically creates a third to match your defined state.

This principle of desired vs. current state is what makes Kubernetes powerful and resilient.

Core Building Blocks of Kubernetes

At its core, Kubernetes is made up of a few key components that work together inside a cluster - a collection of machines (physical or virtual) managed as one unit.

Cluster

The cluster is the full Kubernetes environment. It’s divided into two main parts:

- The Control Plane - makes decisions about scheduling, scaling, and overall cluster management.

- The Worker Nodes - where your applications actually run.

Node

A node is a machine (or virtual machine) inside your cluster. Each node runs the services needed to host and manage containers - including the Kubelet (which talks to the control plane) and the Kube-Proxy (which handles networking).

Pod

A Pod is the smallest deployable unit in Kubernetes - a wrapper around one or more containers that work together as a single unit. If you think of containers as rooms, a Pod is like the apartment that holds them together.

Each Pod gets its own IP address and is scheduled by Kubernetes onto a node.

Deployment

A Deployment defines how many Pods you want, what version of your app to run, and how updates should happen. It makes scaling and rolling updates effortless.

Service

A Service ensures your Pods are accessible - even if they’re replaced or rescheduled. It acts as a stable connection point for communication between users or other apps.

A Quick Example

Let’s say you have a simple web app. You define in Kubernetes:

- A Deployment to run 3 replicas of your app.

- A Service to make the app reachable via a fixed address.

From there, Kubernetes automatically:

- Creates and places Pods on available nodes.

- Restarts them if one fails.

- Balances traffic between them.

- Scales them up or down as needed.

You never have to manually log into a server or restart a container again - Kubernetes handles it all.

How Kubernetes Works - The Big Picture

Now that we know what Kubernetes is, let’s look at how it actually works behind the scenes. Kubernetes isn’t a single program - it’s a system of coordinated components that talk to each other to keep your applications running smoothly.

At a high level, a Kubernetes cluster consists of two main parts:

- The Control Plane, which makes all the decisions.

- The Worker Nodes, which do all the work.

Think of it like a company:

- The Control Plane is management - planning, monitoring, and deciding what needs to happen.

- The Worker Nodes are the employees - doing the actual work of running your applications.

The Control Plane - The Brain of Kubernetes

The Control Plane runs the components that make global decisions about the cluster. It ensures everything is in sync with the desired state you defined (remember: declarative model).

Here are its key parts:

1. API Server

The API Server is the main entry point into Kubernetes - it’s how you (and the system) communicate with the cluster. Every command you run (like kubectl get pods) talks to the API Server.

Think of it as the “front desk” - every request goes through here before being passed to the right department.

2. etcd

etcd is the database of Kubernetes - it stores the entire cluster state, including configurations, nodes, and workloads. If Kubernetes were a brain, etcd would be its memory.

3. Scheduler

The Scheduler decides where each Pod should run. It looks at available nodes, their resources, and requirements, then assigns Pods accordingly.

Example: If Node A is overloaded, the Scheduler will place the next Pod on Node B.

4. Controller Manager

The Controller Manager is the “automation engine” that ensures the cluster always matches the desired state. If a Pod fails or a node goes offline, it reacts immediately to fix the issue - like a supervisor making sure every job is done.

The Worker Nodes - The Hands of Kubernetes

Worker Nodes are where your applications actually run. Each node hosts Pods, but also runs a few key components that help Kubernetes manage those Pods effectively.

1. Kubelet

The Kubelet is an agent that runs on every node. It constantly communicates with the Control Plane, reporting what’s happening locally and making sure Pods are running as instructed.

If a Pod crashes, the Kubelet tells the Control Plane - and the Controller Manager steps in to recreate it.

2. Kube-Proxy

The Kube-Proxy handles network communication inside the cluster. It ensures traffic is properly routed between Pods and Services, no matter which node they’re running on.

3. Container Runtime

This is the engine that actually runs your containers - usually containerd or CRI-O (Docker used to be the default, but Kubernetes now uses these optimized runtimes). The runtime pulls images, starts containers, and manages their lifecycle under the Pod’s control.

How It All Works Together (Simplified Flow)

Here’s what happens when you deploy an app in Kubernetes:

- You apply a Deployment (YAML or

kubectl) - telling Kubernetes your desired state. - The API Server receives your request.

- The Scheduler decides which node(s) should run the required Pods.

- The Kubelet on those nodes creates the Pods and starts containers.

- The Controller Manager and etcd continuously monitor and store the system’s state.

- The Kube-Proxy ensures everything can communicate securely and efficiently.

If something fails, Kubernetes detects it - and automatically heals the system.

The system runs continuously, ensuring flights (containers) are always in the air and on schedule.

Now that you understand how Kubernetes works behind the scenes, let’s explore its core concepts - the building blocks that define how apps are deployed and managed inside a cluster.

Core Kubernetes Concepts (Explained Simply)

Now that you understand the big picture of how Kubernetes works behind the scenes, it’s time to explore the core building blocks that you interact with as a user. These are the objects you define, deploy, and manage to bring your applications to life inside a Kubernetes cluster.

1. Pods - The Smallest Unit of Work

A Pod is the smallest deployable object in Kubernetes - the building block of everything you run. Each Pod wraps one or more containers that need to work together (for example, an app container and a helper container).

Think of a Pod as a single room where all containers inside share the same space, network, and storage.

Pods are ephemeral - if one fails, Kubernetes automatically replaces it. You never manage Pods directly for scaling or updates; other controllers handle that for you.

🧠 Try it: Launch your first Pod in the Kubernetes Labs and see it come alive instantly in your browser.

2. ReplicaSets - Keeping the Right Number of Pods Alive

A ReplicaSet ensures that the specified number of Pods is always running. If a Pod crashes or is deleted, the ReplicaSet creates a new one automatically.

Think of it as a safety officer - always checking and restoring the correct number of workers (Pods) in your system.

While ReplicaSets are useful, you rarely create them manually - they are managed automatically through Deployments(which we’ll cover next).

3. Deployments - The Smart Way to Manage Pods

A Deployment is one of the most common Kubernetes objects. It defines what to run, how many copies to run, and how to update them safely.

You describe your app’s desired state in a YAML file - for example, “Run 3 replicas of my web app” - and Kubernetes ensures that reality matches your request.

If you release a new version, Deployments perform rolling updates without downtime.

Think of a Deployment as your app manager - handling new releases, restarts, and scaling with zero manual effort.

🎓 Learn hands-on: Practice creating Deployments in the Kubernetes for Absolute Beginners Course.

4. Services - Connecting Everything Together

Pods are temporary - they can come and go at any time. That means their IP addresses constantly change. So, how do other apps or users connect to them?

That’s where Services come in. A Service provides a stable network endpoint that routes traffic to the right Pods, no matter how many times they restart or move.

Imagine a Service as a front desk that always knows which rooms (Pods) are currently available.

There are different types of Services - like ClusterIP (internal), NodePort (external via node port), and LoadBalancer(via cloud integration).

🧩 Practice Tip: Try exposing your first Deployment with a Service using kubectl expose in KodeKloud’s labs.

5. ConfigMaps and Secrets - Managing App Configurations

Applications often need environment variables, API keys, or configurations. Kubernetes helps manage these cleanly using ConfigMaps and Secrets.

- ConfigMaps store non-sensitive data like URLs or environment variables.

- Secrets store sensitive data like passwords or tokens - in an encrypted format.

These keep your container images clean and your configurations dynamic.

Think of them as notebooks - one for general notes (ConfigMaps) and one locked in a safe (Secrets).

6. Namespaces - Organizing Your Cluster

A Namespace acts like a folder or workspace inside your cluster, helping you organize resources logically. You might have separate namespaces for development, testing, and production - all inside the same cluster.

Namespaces also allow you to apply resource quotas, control access, and avoid name conflicts between teams or environments.

Think of a Namespace as a mini-cluster within your larger cluster - isolated but connected.

7. Ingress (Bonus for Beginners)

While Services expose apps within the cluster, Ingress allows you to expose them to the outside world using clean URLs (like https://myapp.com). It’s a layer that routes traffic based on hostnames or paths, often combined with TLS for HTTPS.

Think of Ingress as a smart gatekeeper - directing users to the right service through well-defined routes.

Now that you understand the fundamental building blocks of Kubernetes, let’s explore why it’s become the go-to platform for modern DevOps - and the real-world benefits it brings to teams and businesses.

Why Use Kubernetes? (Key Benefits Explained Simply)

By now, you know what Kubernetes is and how it organizes your applications into Pods, Deployments, and Services. But what truly makes Kubernetes so valuable - and why is it considered the backbone of modern DevOps?

The answer lies in how Kubernetes automates nearly every painful part of running software at scale.

Let’s break down the key reasons teams and companies rely on it every day.

1. Automated Scaling - Grow or Shrink on Demand

Traffic spikes are unpredictable - and so are quiet periods. Kubernetes automatically scales your applications up or down based on CPU, memory, or custom metrics.

That means:

- When traffic surges, Kubernetes spins up more Pods.

- When demand drops, it scales them down to save resources.

Think of it as your infrastructure’s auto-pilot - always matching capacity to real-time needs.

This ensures efficiency, performance, and cost savings - especially in cloud environments where you pay for what you use.

2. Self-Healing - Applications That Fix Themselves

In traditional environments, when a server or container fails, someone has to fix it manually. Kubernetes changes that.

It continuously monitors Pods and automatically:

- Restarts failed containers,

- Replaces unresponsive Pods, and

- Reschedules workloads on healthy nodes.

Kubernetes is like a 24/7 system engineer that never sleeps, always keeping your apps alive.

This makes applications highly resilient - even in the face of hardware or network failures.

3. Seamless Updates and Rollbacks

Deploying updates without downtime is a common DevOps challenge.

Kubernetes simplifies this with rolling updates - gradually replacing old Pods with new ones while keeping your application available.

And if something goes wrong? A single command rolls everything back to the previous stable version.

It’s like a time machine for your deployments - one click to move forward or back.

This reliability makes continuous delivery pipelines much safer and faster.

4. Portability Across Any Environment

Kubernetes runs anywhere - on your laptop, on-premise servers, or any major cloud provider. You can move workloads freely between environments without changing your app’s configuration.

Think of Kubernetes as a universal adapter - it abstracts away the underlying infrastructure, so your app runs consistently everywhere.

This freedom is especially valuable for hybrid or multi-cloud setups.

5. Improved Resource Efficiency and Cost Control

Kubernetes intelligently schedules workloads based on available CPU, memory, and node conditions. Instead of overprovisioning servers, it ensures every node is used optimally.

It’s like a smart city planner - placing workloads in the best possible location for performance and efficiency.

As a result, companies reduce waste, cut cloud costs, and improve system performance.

6. Consistent Environments for Dev, Test, and Production

Every environment in Kubernetes is defined as code - meaning your development, staging, and production setups are identical.

No more “it works on my machine” problems - your app behaves the same everywhere.

This consistency strengthens CI/CD pipelines and builds confidence during releases.

7. Ecosystem and Extensibility

Kubernetes isn’t just a platform - it’s an ecosystem. You can extend it with hundreds of plugins, operators, and integrations for networking, storage, security, and observability.

From Helm for package management to Prometheus for monitoring, Kubernetes seamlessly fits into every DevOps workflow.

It’s like a modular control center - powerful on its own, and limitless when customized.

At a Glance: What Kubernetes Solves

| Challenge | Without Kubernetes | With Kubernetes |

|---|---|---|

| Container failures | Manual restarts | Automatic self-healing |

| Scaling | Scripting required | Auto-scaling based on demand |

| Updates | Downtime during releases | Rolling updates & rollbacks |

| Resource usage | Static allocation | Smart scheduling |

| Multi-cloud portability | Complex | Runs anywhere |

Next, let’s look at how Kubernetes is used in the real world and why it has become the engine behind today’s most scalable, cloud-native systems.

Kubernetes in Action - Real-World Use Cases

You’ve learned what Kubernetes does and why it’s powerful. But what does it actually look like in action?

Let’s explore how teams and organizations use Kubernetes every day - from automating deployments to managing global-scale systems - and why it’s become the backbone of the cloud-native world.

1. Continuous Integration and Continuous Delivery (CI/CD)

One of the most common uses of Kubernetes is in automating software delivery pipelines.

When developers push code:

- A CI/CD system (like Jenkins, GitHub Actions, or GitLab) builds and tests the app.

- Kubernetes then deploys it automatically to a test or production environment.

It ensures smooth rollouts, rollbacks, and environment consistency - all without manual intervention.

In short: Kubernetes makes continuous delivery actually continuous.

Try it yourself:

KodeKloud’s CI/CD, Docker & Kubernetes Learning Paths teaches you how these tools work together in real environments.

2. Microservices Architecture

Kubernetes was practically built for microservices - applications broken into small, independent components.

Each microservice runs as its own Deployment or Pod, communicating through Services and APIs. This architecture makes scaling and updates painless - update one service without touching the rest.

Example: A retail app can run separate Pods for checkout, inventory, user management, and recommendations - all coordinated seamlessly by Kubernetes.

KodeKloud Lab Tip:

Experiment with microservices deployment in the Kubernetes Hands-On Labs - you’ll see how Services keep everything connected.

3. Cloud-Native and Hybrid Deployments

Kubernetes runs anywhere - in the cloud, on-premise, or both. This flexibility allows companies to adopt hybrid and multi-cloud strategies combining AWS, Azure, and GCP workloads into a single manageable platform.

Example: A company might keep sensitive workloads on-prem while running web services in the cloud - all under one Kubernetes cluster.

This portability and standardization make Kubernetes a favorite among enterprises moving toward hybrid and edge computing.

4. Machine Learning and AI Workloads

Kubernetes isn’t just for web apps - it’s also used to train, deploy, and manage machine learning (ML) models at scale. It provides isolation, scalability, and GPU scheduling for data science workloads.

Example: ML engineers use Kubernetes to spin up multiple training jobs across GPU nodes - saving time and optimizing cost.

Kubernetes tools like Kubeflow and Ray are now standard for ML pipelines - giving teams DevOps-level control over AI workflows.

5. Infrastructure as Code (IaC) and Automation

Kubernetes reinforces the DevOps philosophy of infrastructure as code - everything, from deployments to networking, is defined in YAML files. That means your entire application setup can be version-controlled, reviewed, and redeployed anywhere.

Think of it as GitOps in action - where your code repository defines both your app and the infrastructure it runs on.

KodeKloud’s Terraform and Kubernetes labs are perfect for practicing this automation end-to-end.

6. Multi-Tenant and SaaS Platforms

For SaaS companies, Kubernetes enables multi-tenancy - hosting multiple customers or environments within a single cluster using Namespaces and resource quotas.

Each customer’s environment stays isolated but runs on shared infrastructure, cutting costs while maintaining control.

Example: A SaaS CRM platform might run separate Namespaces per client, each with its own resources and services - all orchestrated under Kubernetes.

7. Monitoring and Observability

Kubernetes integrates seamlessly with modern monitoring tools like Prometheus, Grafana, and ELK stacks. These tools collect real-time metrics from Pods and nodes, helping teams visualize system health and catch issues before they escalate.

With Kubernetes, monitoring becomes proactive - not reactive.

Try setting up Prometheus and Grafana in KodeKloud’s observability labs to see your cluster metrics live.

8. Real-World Adoption

Kubernetes isn’t just a trend - it’s powering the world’s biggest digital platforms:

- Spotify runs workloads across multiple clusters to manage millions of concurrent users.

- Airbnb uses Kubernetes for elastic scaling during high-traffic seasons.

- Netflix relies on Kubernetes for consistent deployments across thousands of services.

From small startups to global enterprises, Kubernetes is the invisible engine behind the apps we use every day.

Now that you’ve seen how Kubernetes is used in the real world, let’s talk about how you can start learning it step by step - even if you’re brand new to the ecosystem.

How to Learn Kubernetes (Step-by-Step Path for Beginners)

Kubernetes can look intimidating at first - YAML files, clusters, Pods, controllers - there’s a lot to take in. But with the right plan and the right platform, it becomes one of the most rewarding skills you’ll ever master.

Here’s a step-by-step roadmap that thousands of learners have successfully followed through KodeKloud to go from complete beginner to certified Kubernetes professional.

Step 1: Learn the Prerequisites

Before diving into Kubernetes, build a solid foundation in:

- Linux basics - working with the terminal, file systems, and permissions.

- Networking fundamentals - IPs, DNS, ports, and load balancing.

- Containers (Docker) - how to build, run, and manage containers.

KodeKloud Resources:

These are short, hands-on courses with built-in labs - perfect to get comfortable before touching Kubernetes.

Step 2: Start with Kubernetes Fundamentals

Once you’re ready, start with the basics - understanding Pods, Deployments, Services, and Namespaces through guided examples.

🎯 Goal: Get familiar with how applications run inside a Kubernetes cluster.

KodeKloud Course:

👉 Kubernetes for the Absolute Beginners (Hands-On)

You’ll learn through live environments - not slides - with instant feedback after every lab.

Step 3: Practice Daily Using Interactive Labs

The secret to mastering Kubernetes isn’t memorization - it’s muscle memory.

Practice real scenarios in browser-based labs where you can break, fix, and experiment safely.

💻 KodeKloud Studio:

Explore free labs like:

- Launch your first Pods and Services.

- Deployments & Scaling Labs - Practice auto-scaling and rollouts.

- Rolling & Rollback Updates Labs - Practice Deploying Updates and Reverting Back.

You’ll start thinking like a Kubernetes engineer - not just a learner.

Step 4: Move to Real-World Scenarios

Once the basics feel natural, start applying them in real-world challenges - just like a DevOps professional.

🎓 Recommended Practice Path:

- KodeKloud Engineer Platform (KKE) - handle real tasks in production-like systems.

This step bridges the gap between “learning Kubernetes” and “using Kubernetes like a pro.”

Step 5: Get Certified - The Kubeastronaut Journey

KodeKloud has structured the complete Kubernetes certification path, known as the Kubeastronaut Journey, which includes all official CNCF certifications:

| Level | Certification | Goal |

|---|---|---|

| Beginner | KCNA (Kubernetes and Cloud Native Associate) | Understand Kubernetes concepts & ecosystem |

| Administrator | CKA (Certified Kubernetes Administrator) | Learn to manage and troubleshoot clusters |

| Developer | CKAD (Certified Kubernetes Application Developer) | Design and deploy apps on Kubernetes |

| Security | CKS (Certified Kubernetes Security Specialist) | Secure clusters and workloads |

| Security baiscs | KCSA (Kubernetes and Cloud Native Security Associate)** | Understand Kubernetes security frameworks |

Each course includes:

- Real exam-style labs and mock tests

- Visual explainers and step-by-step breakdowns

- Certification preparation tips from industry experts

🎓 Pro Tip: Don’t rush certifications. Instead, master the concepts through labs - the exams will then feel natural.

Step 6: Learn Visually and Stay Updated

Kubernetes evolves fast, with new releases every few months.

Stay ahead with KodeKloud’s free educational resources:

- 📘 Kubernetes Made Easy eBook - beginner-friendly reference guide.

- 🧾 Open DevOps 101 Repository - visual diagrams, cheat sheets, and infographics.

- ✉️ Bite-Sized Email Courses - like Kubernetes Made Easy and Linux Simplified for DevOps Engineers.

Consistency is key - even 30 minutes of practice a day will make you confident faster than you think.

Step 7: Build Your Portfolio and Apply Your Skills

Start showcasing what you’ve learned through small projects and GitHub repos.

For example:

- Deploy a sample web app using Pods, Deployments, and Services.

- Add monitoring using Prometheus and Grafana.

- Document your setup with screenshots and YAML files.

Share your progress on LinkedIn or in the KodeKloud community - you’ll be surprised how many recruiters and peers notice!

Your Next Step

You don’t have to master Kubernetes overnight. Start small, practice often, and let KodeKloud guide you one lab at a time.

“The best way to learn Kubernetes is not to read about it - it’s to use it.”

Start your Kubernetes journey today:

👉 Join KodeKloud and unlock your first interactive Kubernetes lab in minutes.

Common Myths About Kubernetes

Kubernetes can seem complex when you first hear about it. There’s a lot of buzz around containers, clusters, and automation, and that can lead to a few misconceptions. Let’s clear up some of the most common ones.

Myth 1: Kubernetes is only for large companies

Many beginners assume Kubernetes is something only big tech companies like Netflix or Google can use.

In reality, Kubernetes can work well for small teams and projects too - especially when scalability, automation, and microservices are part of the plan. You can start small with Minikube or Kind and grow over time.

Note: Kubernetes isn’t automatically the right choice for every product. If your app is small or simple, it might introduce unnecessary complexity and cost. The key is understanding your workload patterns, traffic scale, and growth goals before adopting it.

Myth 2: You need to be a developer to use Kubernetes

You don’t need to be a programmer to learn Kubernetes. It’s a platform for developers, DevOps engineers, and system administrators alike. Most tasks involve configuration and automation, not coding.

Note: A basic understanding of how applications run and how containers work is enough to get started. Many successful learners come from networking or operations backgrounds - not software engineering.

Myth 3: Kubernetes replaces DevOps

Kubernetes doesn’t replace DevOps - it enables it. It’s a tool that helps teams practice DevOps principles like automation, continuous delivery, and monitoring. Kubernetes makes DevOps smoother, but it doesn’t replace the culture or collaboration that defines DevOps itself.

Note: DevOps is the mindset. Kubernetes is just one of the best tools to make that mindset work in real systems.

Myth 4: Kubernetes is only for cloud environments

Kubernetes was designed to run anywhere - in the cloud, on-premises, or even on your laptop. This flexibility lets teams mix environments, manage hybrid setups, or gradually migrate to the cloud.

Note: Cloud-native doesn’t always mean “cloud-only.” Many organizations run Kubernetes in hybrid or edge environments, blending cloud speed with on-prem control.

Myth 5: Kubernetes is too difficult to learn

Kubernetes looks complex until you see it in action. The challenge usually comes from trying to learn without structure or practical labs. When you follow a clear, hands-on learning path, everything becomes easier to understand.

Note: The best way to learn Kubernetes is to practice daily, even for 20-30 minutes. KodeKloud’s guided labs and beginner-friendly courses make the process interactive and approachable.

Kubernetes mastery comes from doing, not memorizing.

Now that we’ve cleared up the myths, let’s wrap up with a short summary and a few frequently asked questions to help you take the next step with confidence.

Summary: Why Kubernetes is the Future of Cloud-Native

Kubernetes started as a solution to a simple problem - how to manage containers at scale - but it has grown into the foundation of modern software delivery.

Today, nearly every organization that builds and ships applications at speed relies on Kubernetes to keep their systems reliable, scalable, and efficient. It has become the engine behind the cloud-native movement, transforming how teams deploy and operate applications across the world.

If you’ve followed this guide, you now understand:

- What containers are and why they changed everything

- The challenges that led to Kubernetes

- How Kubernetes works behind the scenes

- The core objects like Pods, Deployments, and Services

- The benefits that make it indispensable in DevOps

- And how to start learning it step by step

In short, Kubernetes brings together everything DevOps stands for - automation, scalability, reliability, and collaboration. It helps teams build faster, recover automatically, and adapt easily to change.

The Real Value of Learning Kubernetes

Learning Kubernetes is more than learning a tool. It’s understanding how modern systems run in the cloud, how microservices communicate, and how automation shapes the future of software. Whether you are a developer, sysadmin, or aspiring DevOps engineer, Kubernetes is the skill that connects it all.

Once you understand Kubernetes, you understand how the modern cloud works.

Your Next Step

Start your Kubernetes journey today with KodeKloud. With guided courses, interactive labs, and hands-on challenges, you’ll go beyond theory and learn how to build, deploy, and manage applications like a real engineer.

Start here:

Every expert started with their first Pod. Your journey begins today - one command at a time.

FAQs

Is Kubernetes the same as Docker?

No.

Docker is used to build and run containers. Kubernetes is used to manage many containers across multiple systems efficiently.

In short: Docker creates containers; Kubernetes organizes them.

Is Kubernetes only for large companies?

No.

You can start locally using Minikube or Kind. But Kubernetes should fit your product’s nature - if your app is small or simple, it might add unnecessary complexity and cost.

Can I run Kubernetes on my laptop?

Yes, you can.

Tools like Minikube, Kind, or Docker Desktop let you create a small local cluster and experiment without cloud resources.

However, if you want to skip the setup and start learning instantly, the KodeKloud Kubernetes Playgrounds are even better. Everything is already configured and ready to use - no installation, no configuration, no dependencies. You can launch real clusters in your browser, break things, fix them, and truly learn how Kubernetes behaves in production-like environments.

👉 Try it here: KodeKloud Kubernetes Playgrounds

Is Kubernetes worth learning in 2025?

Absolutely.

It’s at the heart of DevOps, cloud-native infrastructure, and modern software engineering. Kubernetes is one of the most in-demand skills across DevOps, Cloud, and Platform Engineering roles.

Discussion