Highlights

- AI is now a competitive mandate, not an innovation experiment

- Talent gaps, not technology, are the #1 blocker to AI adoption

- 68% of organizations lack AI-ready skills, slowing cloud and platform initiatives

- AI transformation success is 70% driven by people and process

- Upskilling existing teams is faster, cheaper, and more sustainable than hiring

- AI-native DevAIOps teams deliver faster time-to-market and higher ROI

- Kubernetes and cloud-native platforms are foundational to GenAI at scale

- Enterprises must move from AI pilots to production-ready systems

- Multi-cloud strategy requires standardized platforms, not siloed teams

- Continuous learning is now a core business capability, not an HR initiative

Podcast Version Available

This article is also available as a concise, executive-level podcast episode exploring AI-native DevAIOps teams, multi-cloud strategy, and workforce transformation.

- Why AI + cloud-native convergence is a leadership mandate

- What prevents enterprises from scaling AI to production

- How AI-native DevOps teams outperform traditional models

- Why upskilling is faster and more effective than hiring

The convergence of artificial intelligence and cloud-native infrastructure is no longer a future vision, it's the competitive reality reshaping enterprise technology teams across every sector globally. As AI and cloud technologies become pervasive, organizations are moving toward AI-native teams aligned with DevAIOps, bringing DevOps practices together with AI-driven workflows. Industry research underscores why this transformation is imperative. According to a 2025 Linux Foundation State of Tech Talent report, 94% of organizations expect AI to add significant value to their operations, yet less than half have the necessary AI skills in-house. In fact, 68% of companies lack AI/ML-skilled employees, contributing to a wider tech talent gap in areas like cloud and platform engineering. This skills deficit is now a major barrier to tech adoption for 44% of firms.

Forward-looking organizations recognize that building AI capabilities is not just a tech initiative, it’s a people initiative. “70% of AI transformation is determined by the people and processes supporting it”, says Linux Foundation’s Clyde Seepersad. The AI revolution is a catalyst for human capital transformation: rather than simply acquiring new tools, companies must upskill and empower their workforce to use AI effectively. This has given rise to new roles (over half of organizations are expanding AI-specific roles, hiring AI/ML operations leads and AI product managers) and new workflows. In two-thirds of organizations, AI has “significantly changed how teams work” & developers now validate AI-generated code, AI fluency is expected of new hires, and many entry-level tasks are being automated.

As organizations navigate multi-cloud DevOps environments while integrating generative AI and agentic systems, a critical question emerges: How do you build teams capable of thriving in this exponentially complex landscape? The answer lies in creating AI-native, high-performing teams equipped with both cloud-native expertise and AI fluency, a transformation that demands strategic upskilling, hands-on experience, and a learning infrastructure designed for the era of DevAIOps.

Why AI-Native Dev Teams Matter Now

The data is unmistakable. According to the World Economic Forum's Future of Jobs Report 2025, 86% of businesses expect AI and automation to transform their operations by 2030, with 39% of existing skill sets becoming outdated between 2025-2030. Yet the gap between AI ambition and execution remains stark: over 85% of AI projects fail to reach production, and fewer than 10% of companies extract significant business value from AI investments.

The root cause? Organizations lack AI-literate technical leadership and teams capable of bridging the chasm between AI possibilities and production-ready implementations. As InfoQ's 2025 Cloud and DevOps Trends Report reveals, while AI Agents for cloud engineering show tremendous promise, enterprise adoption is slowed by compliance, security concerns, and the absence of teams trained to deploy these systems safely and effectively.

In light of the latest from KubeCon NA 2025 and AI Native DevCon Fall 2025 events:

- The convergence of cloud-native + AI + agentic workflows is now mainstream.

- Multi-cloud orchestration must include intelligent/agentic workload delivery, not just containers.

- Teams must evolve: developer + agent, platform engineer + AI-ops, SRE + model monitoring.

- Upskilling your workforce in agentic workflows, spec-driven development, multi-cloud AI orchestration is no longer optional.

Or, in the words from the KubeCon NA 2025 wrap-up: “Organisations that embrace AI-first DevOps and build out intelligence engines that deliver adaptability, reliability, governance and speed will define the next decade.” As a technology and talent leader, the mandate is clear. Now is the time to nurture your talent and infuse AI and multi-cloud expertise across your engineering organisation. Treat upskilling and continuous learning as core business strategies, and partner with platforms that can accelerate this journey.

The DevAIOps Reality: Where AI Meets Cloud-Native Infrastructure

DevAIOps, the fusion of DevOps practices with AI-powered automation and intelligent operations represents the next evolution of software delivery. According to recent industry research, 99% of organizations implementing DevOps report positive effects, with 74% experiencing enhanced software delivery speed and 70% reporting improved ROI within the first year.

Now, generative AI amplifies these gains. Organizations adopting AI in DevOps pipelines report 40-60% reductions in infrastructure setup time, 30% fewer failed deployments, and 20% improvements in build pipeline speeds. Gartner predicts that by 2025, AI-driven DevOps will reduce downtime costs by 40%, while 60% of teams already report real productivity gains from AI-augmented tools.

Yet these benefits remain out of reach for teams lacking practical AI and cloud-native fluency. As the Linux Foundation emphasizes, success with AI workloads hinges not only on data science expertise but on cloud-native fluency the ability to architect, deploy, orchestrate, monitor, secure, and operate distributed infrastructure at scale.

Cloud-native technologies power GenAI scalability, with 65% of organizations relying on cloud infrastructure to build and train models, and 50% using Kubernetes to manage GenAI inference tasks. The CNCF's State of Cloud Native Development report reveals that 36% of professional developers are already running ML/AI workloads on Kubernetes, with an additional 18% planning to.

This convergence demands teams skilled across the entire stack: Kubernetes orchestration, Infrastructure as Code (Terraform, Pulumi), GitOps workflows (ArgoCD, Flux), observability (Prometheus, Grafana, OpenTelemetry), service mesh architectures (Istio, Cilium), CI/CD automation, and AI model deployment frameworks (Kubeflow, KServe) all while maintaining security, compliance, and cost optimization across multi-cloud environments.

To harness multi-cloud’s benefits, Modern IT or AI leaders must design teams and processes with a cloud-agnostic mindset. Best practices include using centralized delivery platforms and common tooling across clouds to avoid siloing teams by vendors. It’s vital to implement cloud-agnostic automation. For instance, standardizing on Kubernetes, container registries, and IaC for provisioning, so that moving between clouds or scaling to new regions doesn’t require retooling or duplicating effort. Site Reliability Engineering (SRE) practices further ensure that reliability and performance are maintained across this distributed landscape. High-performing DevOps/MLOps teams also treat AI/ML workloads as first-class citizens in the cloud: versioning ML models and pipelines just like code, integrating them into a unified delivery workflow. In a multi-cloud world, this unified approach to architecture and automation is what separates agile organizations from those bogged down in complexity.

What High-Performing AI-Native Dev Teams Look Like

High-performing teams in this landscape possess three critical capabilities:

1. Cloud-Native Mastery Across Multi-Cloud Platforms

Elite teams demonstrate expertise in container orchestration, Kubernetes cluster management (single and multi-cluster), platform engineering, and infrastructure automation. They deploy applications seamlessly across AWS, Azure, GCP, on-premises data centers, and edge locations while maintaining consistent governance, security policies, and observability.

2. AI-First Development and Operations Fluency

Beyond traditional DevOps, AI-native teams understand LLM architectures, prompt engineering, Retrieval Augmented Generation (RAG), Model Context Protocol (MCP), AI agents, vector databases, and MLOps pipelines. They integrate AI into code reviews, automated testing, deployments, monitoring, and incident response transforming reactive operations into proactive, intelligent systems.

3. Production-Ready, Hands-On Experience

Theory alone doesn't build competence. High-performing teams gain fluency through real-world scenario practice provisioning infrastructure, debugging production incidents, optimizing CI/CD pipelines, implementing GitOps at scale, and deploying AI agents in sandbox environments that mirror actual enterprise complexity.

Skilling Up for DevAIOps: Culture, Training, and High-Performance Teams

Building an AI-native, multi-cloud DevOps team is as much about people and culture as it is about tools. Research consistently shows that investing in talent yields outsized returns in the digital era. The 2025 State of Tech Talent report found organizations making the biggest strides in AI are “treating upskilling as a core capability, not a side initiative. In practical terms, this means fostering continuous learning, encouraging experimentation, and giving teams hands-on experience with emerging tech. Executives and engineering leaders must champion a culture where DevOps and AI skills development is ongoing, this is now a business strategy, not just an HR strategy.

Key focus areas for skilling high-performing DevAIOps teams include:

- Cloud & Kubernetes Mastery: Teams should be fluent in cloud-native architectures, Kubernetes orchestration, container security, and Infrastructure-as-Code across all major clouds. This provides the foundation for multi-cloud agility. It’s no coincidence that companies with well-trained cloud/K8s teams see results like 2× faster container deployment capabilities and dramatically reduced downtime.

- CI/CD, Automation & SRE Practices: Emphasize advanced CI/CD pipeline skills, automated testing, and SRE methodologies (monitoring, chaos engineering, performance tuning). With the right DevOps skills and training, organizations have reported 50% less downtime and 65% faster time-to-market when adopting modern platforms like Kubernetes.

- AI/ML and Data Literacy: Even if not every team member is a data scientist, understanding how to leverage AI/ML services is crucial. This ranges from using AI-driven analytics and AIOps tools, to collaborating effectively with data science teams on MLOps. As AI becomes woven into products and processes, AI literacy is becoming a core competency (indeed, many companies now expect basic AI knowledge from incoming talent. Beyond traditional DevOps, AI-native teams understand LLM architectures, prompt engineering, Retrieval Augmented Generation (RAG), Model Context Protocol (MCP), AI agents, vector databases, and MLOps pipelines. They integrate AI into code reviews, automated testing, deployments, monitoring, and incident response transforming reactive operations into proactive, intelligent systems.

- DevSecOps & Governance: High-performing teams integrate security and compliance from the start. In AI-augmented pipelines, this includes knowing how to manage AI ethics (avoiding “AI hallucinations” or bias), data governance, and secure use of open-source AI models. Open collaboration is a strength, 40% of orgs now leverage open-source AI tools to accelerate adoption, and those with strong open-source cultures report higher retention and innovation.

Perhaps the most encouraging finding is that organizations are doubling down on upskilling their existing people. In 2025, 72% of companies prioritized upskilling current staff (up from 48% just a year prior). Not only is this approach faster, it’s 62% faster than hiring new talent, it’s also more effective, boosting retention and ensuring hard-won domain knowledge stays in-house. Certifications and structured learning paths play an important role here: 71% of organizations consider certifications important in hiring to validate skills. Building a high-performance team thus involves providing clear skill development roadmaps and incentives for continuous education (e.g. achieving cloud or Kubernetes certifications). It’s about enabling your people to grow with technology. As Frank Nagle of Harvard notes, “the AI revolution is not just a technology race, but a catalyst for human capital transformation & organizations need to build their AI workforce from within”. In practice, that means creating an environment where engineers constantly learn, experiment, and push the envelope supported by leadership every step of the way.

The ROI of Building AI-Native DevOps Teams

Investing in AI-native, cloud-skilled teams delivers measurable, transformative ROI:

Productivity Gains - Organizations achieve up to 40% productivity improvements and 10% overall workforce efficiency gains from upskilled technical leadership.

Faster Time-to-Market - DevOps teams deploy 208 times more frequently than low performers, with 106 times faster change lead times, directly translating to revenue acceleration.

Cost Reduction - Mature DevOps practices yield 20-30% reductions in development and operational costs through automation, reduced downtime, and optimized resource utilization.

Quality and Reliability - 61% of organizations report enhanced deliverable quality after DevOps adoption, while AI-driven systems reduce deployment errors by 35% and increase release velocity by 50%.

Talent Retention - Upskilling slashes attrition costs (replacing an employee costs 50-200% of annual salary) while future-proofing teams against skill obsolescence.

AI Transformation Success - Product development teams following AI best practices achieve a median ROI of 55% on generative AI initiatives, compared to just 5.9% for unprepared organizations.

How KodeKloud Enables AI-Native DevOps Team Transformation

Building these capabilities at enterprise scale requires a comprehensive, hands-on learning ecosystem precisely what KodeKloud delivers. Transforming your workforce for this AI + multi-cloud era may sound daunting, but platforms like KodeKloud are purpose-built to accelerate the process. KodeKloud has emerged as a leading enabler for organizations aiming to build high-performing cloud, DevOps, and AI teams. Its comprehensive offerings address the very needs discussed above: hands-on skill development across DevOps, Cloud, Kubernetes, SRE, Platform Engineering, and emerging AI technologies.

With over 1.6 million active learners worldwide, 165+ technical courses, 1250+ hands-on labs, and production-ready playgrounds spanning AI, DevOps, Cloud, Kubernetes, SRE, Platform Engineering and more, KodeKloud provides the infrastructure enterprises need to transform their technical teams.

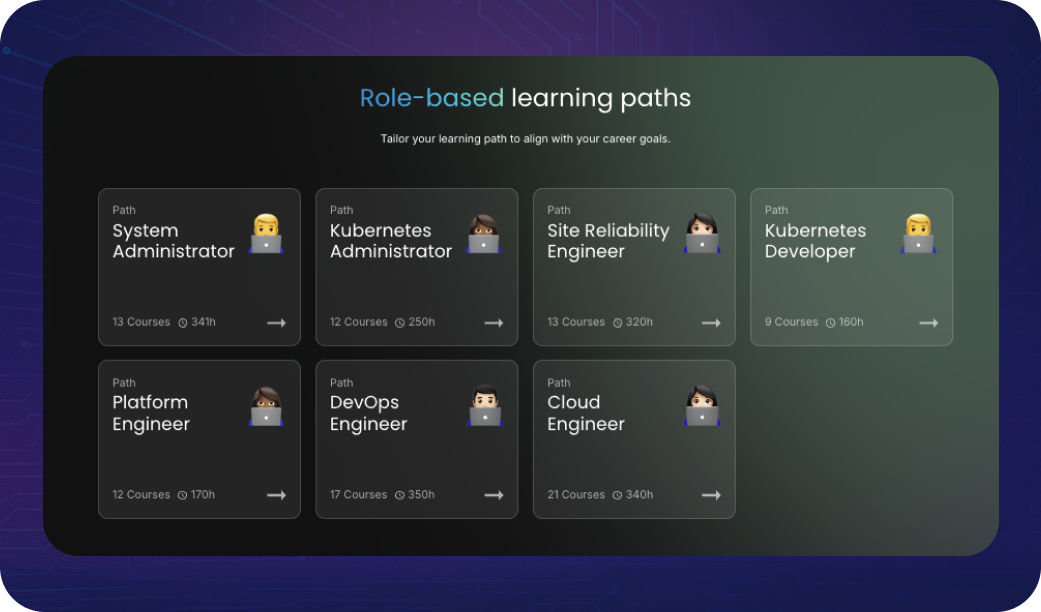

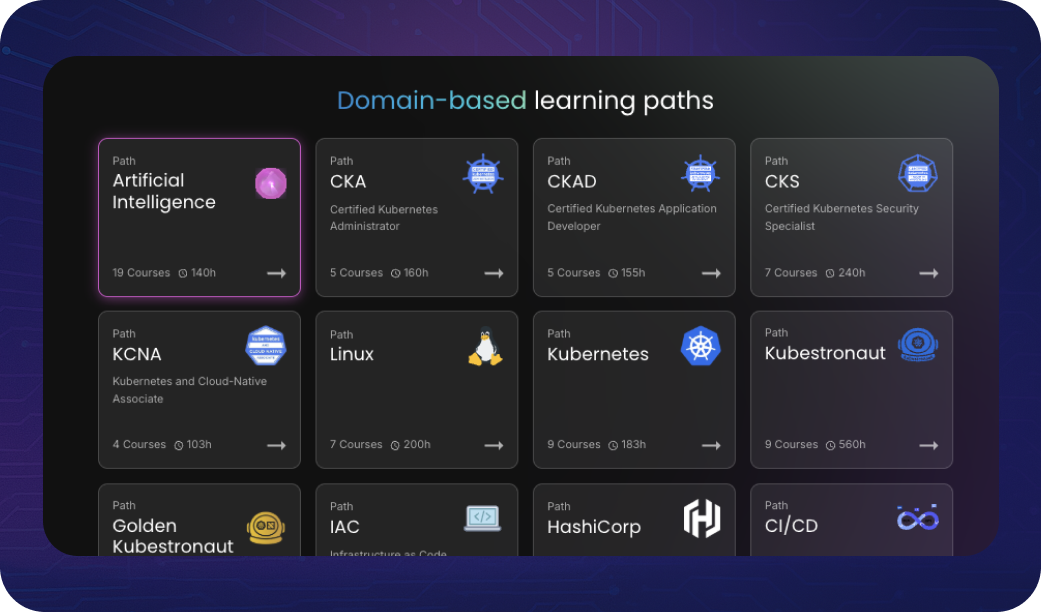

1. Extensive Learning Paths and Role-Based Skilling:

KodeKloud provides curated learning paths spanning DevOps, Cloud (AWS, Azure, GCP), Kubernetes (from KCNA to CKA/CKAD and beyond), Infrastructure-as-Code, Automation, and even AI/ML fundamentals. These paths are designed to take learners from foundational concepts to advanced, real-world proficiency. For example, the Kubestronaut learning path covers the core Kubernetes certifications (CKA, CKAD, CKS, etc.), while the Golden Kubestronaut program goes further guiding professionals to master all CNCF Kubernetes certifications (and related tech like service mesh, CI/CD, and observability). These programs have created a community of Kubernetes experts recognized by the industry. Similarly, role-based paths (e.g. for Site Reliability Engineer, Platform Engineer, DevOps Engineer) ensure that training aligns with the team’s actual job roles. This role-focused, continuous learning approach helps enterprises build skills in a targeted way, whether you need your ops team to learn SRE practices or your developers to pick up Terraform and cloud security.

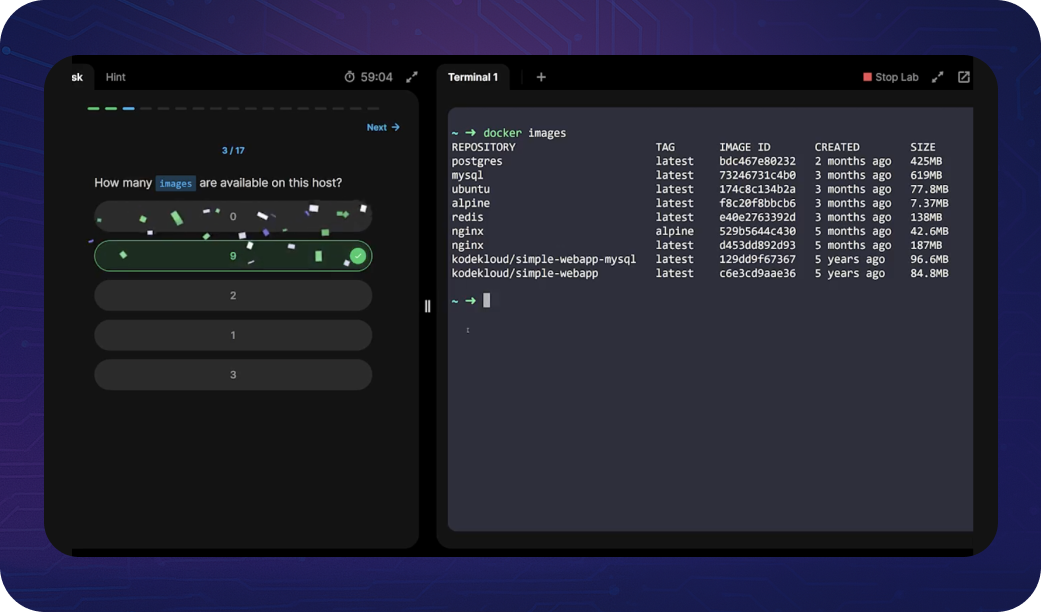

2. Hands-On Labs, Real Environments, and Playground Sandboxes:

A distinguishing feature of KodeKloud is its emphasis on learning by doing. The platform offers a rich array of interactive labs and cloud playgrounds that replicate real-world environments. Teams can experiment with Kubernetes clusters, spin up cloud instances on AWS/Azure/GCP, practice CI/CD pipelines with Jenkins, or manage infrastructure with Terraform, all in a safe, browser-based sandbox. These KodeKloud Playgrounds cover major tech stacks (containers, Kubernetes, Linux, DevOps tools, IaC, databases, etc.) and major cloud vendors, allowing learners to practice scenarios they’ll encounter on the job. Crucially, this hands-on experience builds true competency and confidence. As one user, a Director of Solutions Architecture, attested: “KodeKloud helped my staff upskill across Kubernetes, Git, Terraform, and Ansible. We’ve seen exponential uptake thanks to the excellent quality of the materials”. Another technology leader noted that after using KodeKloud, “our K8s talent pool is growing exponentially… Kubernetes certified in 3 weeks. My advice: take KodeKloud courses”. These testimonials highlight KodeKloud’s ability to rapidly turn education into tangible outcomes fast-tracking teams to certification and on-the-job proficiency.

3. AI Integration and KodeKey Playground:

True to the AI-native theme, KodeKloud has introduced KodeKey, an all-in-one AI development playground. KodeKey lets developers and learners experiment with multiple cutting-edge large language models (LLMs): GPT variants, Anthropic’s Claude, Google’s Gemini, etc. using a single unified API key. This eliminates the friction of managing multiple AI providers and billing accounts. For an enterprise building AI into its DevOps processes, KodeKey serves as a “personal launchpad into the world of AI development and experimentation”. Teams can prototype AI-driven tools, compare model outputs, and practice prompt engineering in a secure sandbox. Importantly, KodeKey is designed for learning and prototyping (not production) aligning perfectly with upskilling goals. It’s one more way KodeKloud ensures your team is not only cloud- and DevOps-savvy, but also comfortable integrating AI/LLM capabilities into projects (think ChatGPT plugins, AI-based code assistants, etc.). In combination with KodeKloud’s AI courses and labs, it lays the groundwork for adopting DevAIOps workflows.

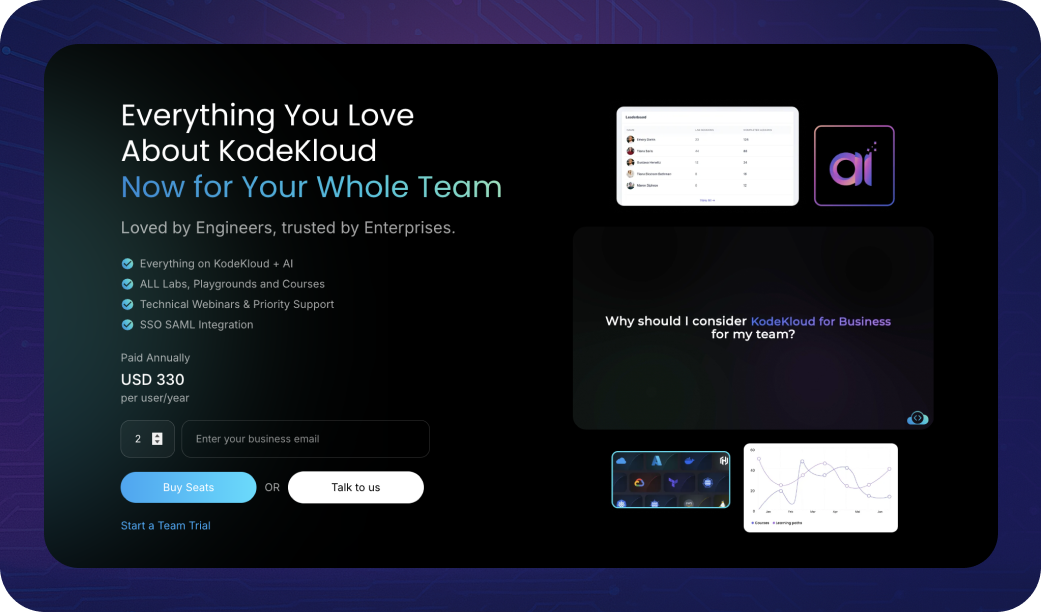

4. Enterprise-Ready Platform (KodeKloud for Enterprise):

For organizations and CXOs, KodeKloud offers enterprise features that make managing team skilling at scale straightforward. The KodeKloud Business plan provides an enterprise dashboard for tracking team progress and learning metrics, SSO/SAML integration for easy user management, centralized billing, and even the ability to assign seats or transfer licenses as team membership changes due to valid reasons. There’s also the KodeKloud Team Trial option and dedicated account support meaning you can pilot the platform with your team and get guidance on the best learning plans. Additionally, KodeKloud’s content stays up-to-date with the fast-changing tech landscape (with new courses on technologies like ArgoCD, Backstage, Cilium, policy-as-code, etc. in development. This ensures your talent is learning the latest and greatest practices, from GitOps to agentic AI tools. By partnering with KodeKloud, enterprises essentially gain a one-stop skilling solution covering the full stack of competencies needed for cloud, DevOps, and AI excellence. It’s a platform built by experts (and CNCF ambassadors, Golden Kubestronauts) who deeply understand cloud-native tech and how adults learn best through hands-on immersion and real-world projects.

5. Proven Results and Certifications:

KodeKloud’s success stories and learner outcomes speak to the impact. As mentioned, teams have achieved Kubernetes certifications in weeks and cut cloud deployment times significantly. The platform prepares learners for top industry certifications (AWS, Azure, Google Cloud, Docker, Kubernetes, Linux Foundation, and more. Notably, KodeKloud has collaborated with the CNCF on initiatives like the Kubestronaut program, underscoring its credibility in the cloud native community. By enrolling your team in these programs and encouraging them to earn certifications (e.g. become a Kubestronaut or even a coveted Golden Kubestronaut), you create a baseline of excellence and shared achievement. This not only validates skills through internationally recognized certs but also boosts morale and retention, employees see tangible growth in their careers. Given that 71% of organizations value certifications when recruiting as per LF report, investing in your existing team’s credentials can also save on hiring costs and fill roles internally. And remember, upskilling from within is 62% faster than hiring externally as stated in the same report. The ROI of such training is clear: a Puppet State of DevOps report found that well-trained teams enjoy significantly higher reliability and lower downtime, while other studies have linked DevOps skill maturity to millions in savings from avoiding failures. In essence, skilling up is not a cost, it’s an investment that pays back in operational performance.

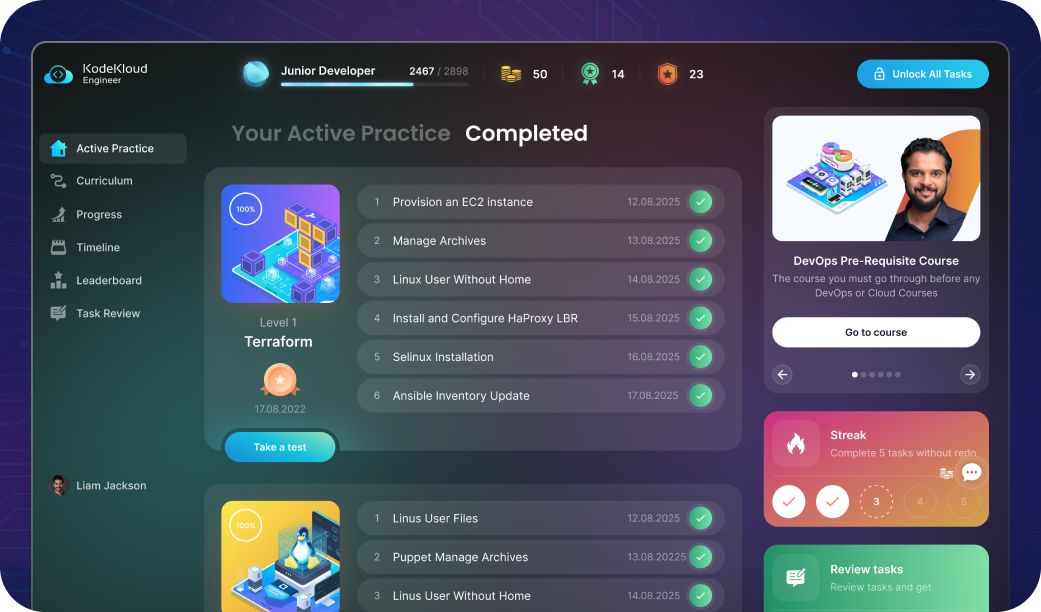

KodeKloud Engineer: Job-Simulation Platform for Real Project Experience

KodeKloud Engineer takes hands-on learning to the next level by simulating real project tasks on real systems. Learners join a virtual organization, receive daily scenario-based tasks mirroring production incidents, and solve challenges across Linux administration, Kubernetes troubleshooting, AWS/Azure/GCP operations, CI/CD pipeline debugging, and Infrastructure as Code.

This platform replicates the daily workflow of AI-native DevOps, SRE, and Platform Engineering roles, providing:

- Real-world scenarios - Not theoretical exercises, but actual tasks engineers face in production environments

- Role-specific leaderboards - Gamification and peer competition to drive engagement

- Timeline tracking - Detailed activity logs and task history to demonstrate progress

- Peer collaboration and task reviews - Learning from others' solutions and sharing knowledge

- Streak-based incentives - Building consistency and daily practice habits

This job-simulation experience directly addresses the interview readiness gap, equipping engineers to confidently answer scenario-based questions and explain real projects.

The Path Forward: From AI Pilots to Production at Scale

The era of AI experimentation is over. 2025 marks the transition from AI pilots to production-ready, enterprise-scale implementations. Across North America, EMEA, APAC and beyond, forward-thinking enterprises are realizing that building AI-native, high-performing teams is the key to thriving in a multi-cloud, DevAIOps world. The rapid advances in AI, the ubiquity of cloud, and the pressures for digital agility mean that yesterday’s approaches won’t suffice. Organizations need teams that are visionary and versatile, adept at cloud-native engineering, fluent in AI-Augmented Ops, and continually learning. The payoff is enormous: faster time-to-market, higher resilience, improved innovation, and ultimately a stronger competitive position. As research from the World Economic Forum and others suggests, those who invest in people now will be the ones defining the next decade of technology leadership. Or, in the words of a recent DevOps insight: “Organizations that embrace AI-first DevOps and build out intelligence engines that deliver adaptability, reliability, governance and speed will define the next decade”.

Organizations that succeed will be those that invest decisively in building AI-native, cloud-fluent, high-performing technical teams capable of architecting, deploying, and operating intelligent systems across multi-cloud DevOps environments.

KodeKloud provides the comprehensive learning infrastructure enterprises need to execute this transformation: structured AI learning paths, cloud-native certification roadmaps, production-like playgrounds, real-world job simulations, and customizable corporate training, all grounded in hands-on, learn-by-doing methodology that builds practical competence, not just theoretical knowledge.With its hands-on labs, guided paths, and enterprise features, KodeKloud can help turn your DevOps practitioners into DevAIOps rockstars, your cloud engineers into multi-cloud experts, and your organisation into an AI-savvy innovator.

Ready to build your high-performing, AI-native DevOps teams? We invite you to explore KodeKloud’s offerings and see the impact for yourself. Whether it’s mastering Kubernetes through our role-based paths, experimenting with AI in the KodeKey playground, or onboarding your entire department onto a tailored learning plan, KodeKloud has the tools and experience to make it happen. Empower your team with the skills of tomorrow and position your enterprise to thrive today.

Take the next step: Visit KodeKloud’s website to learn more, request a demo or free team trial, and read our customer success stories.

The future belongs to teams that can seamlessly navigate Kubernetes at scale, orchestrate multi-cloud infrastructure through code, deploy autonomous AI agents safely, and continuously deliver innovation at the speed dictated by business needs. KodeKloud makes that future achievable, starting today.

Discussion