Are Universities Asking the Right AI Question?

Most universities today are asking some version of:

- “How do we teach our students about AI?”

It is a reasonable question, but an incomplete one.

The question employers are actually answering on the other side is much closer to:

- “Which graduates can build, deploy, and maintain AI-enabled systems in real environments?”

That is the gap between AI literacy and AI readiness.

The Literacy-to-Readiness Gap

You can think of it this way:

- AI literacy is knowing what AI, machine learning, and large language models are, understanding core concepts and use cases, and being able to talk about them in exams or presentations.

- AI readiness is being able to design and build AI-enabled features in real systems, work with cloud, data, DevOps, and AI tooling as they exist in practice, and collaborate with product and platform teams to keep those systems running.

An AI-ready engineering graduate is someone who can realistically be onboarded into a team and contribute in months, not years.

What an AI-Ready Graduate Can Actually Do

Instead of only answering theory questions, an AI-ready graduate can, for example:

- Build and test AI agents and RAG workflows using frameworks such as LangChain, LangGraph or MCP, connecting models via APIs and managing prompts, tools and evaluation.

- Deploy AI-enabled services by containerising applications, deploying to Kubernetes or managed cloud services, and configuring CI/CD pipelines.

- Work with data, cost and reliability by understanding inference cost, latency and throughput, observing behaviour in production-like environments, and iterating based on metrics.

- Collaborate with DevOps and platform teams using Git workflows, respecting security and infra guardrails, and taking part in incident simulations and post-mortems.

When employers say, “Show me what you have built,” this is the level they are hoping to see.

What This Means for Curricula, Labs and Assessment

For universities, this does not mean abandoning theory. It means changing the balance and the level of realism.

A modern AI-aware engineering curriculum typically needs:

-

More hands-on density

- A higher proportion of contact hours spent building, debugging, and deploying in labs or sandboxes.

- Fewer 'toy' examples and more tasks that resemble production scenarios.

-

Realistic lab environments

- Labs that mimic actual stacks: Linux, containers, Kubernetes, cloud services, CI/CD, monitoring.

- Safe access to AI models and APIs, with controlled credentials and cost.

-

Project- and portfolio-based assessment

- Marks linked to projects, lab performance, and job-simulation exercises.

- Students graduating with evidence: GitHub repositories, write-ups, demos, not only exam scores.

-

Alignment with OBE (Outcome-Based Education) and accreditation

- Mapping hands-on AI labs and projects to Course Outcomes (COs) and Program Outcomes (POs).

- Using lab and project performance as direct assessment evidence for attributes like:

- Problem analysis

- Design and development of solutions

- Modern tool usage

- Individual and teamwork

In other words: the AI-ready curriculum is still an OBE curriculum. It simply treats labs and projects as first-class citizens rather than add-ons.

The Employability Problem

Around the world, a few signals are becoming clear:

- Employers continue to value degrees from strong universities, but:

- They increasingly ask: “What can this candidate actually do in month one?”

- They pay attention to portfolios, GitHub activity, project discussions, and job-simulation performance.

- AI, Cloud, and DevOps adoption are accelerating:

- Global surveys show AI-related roles among the fastest-growing job categories.

- Many organisations report shortages of AI-related skills, even while they invest heavily in AI tools.

- At the same time, graduates often report:

- Feeling under-prepared for technical interviews.

- Limited exposure to real cloud environments and modern workflows.

- Difficulty explaining a complete system they have designed and deployed.

It’s important to note that this is no longer limited to software companies. Banking, healthcare, manufacturers, logistics providers and even public-sector agencies are hiring engineers who can make AI work safely inside their existing systems.

AI readiness is now a cross-industry expectation, not just a tech-sector nice-to-have.

The Risk of Moving Too Slowly

The window for universities to adapt is shorter than most academic leaders think.

The global data paints a stark picture:

- WEF's 2025 Future of Jobs survey says 86% of employers expect AI to drive transformation within five years, and 40% of core skills will change by 2030.

- Randstad’s 2024 survey finds 75% of companies use AI, but only about 35% of workers received any AI training last year, widening the skills gap.

- Bain’s global analysis shows demand for AI skills is growing at over 20% per year, and the shortage of AI-literate engineers will likely persist beyond 2027.

- NASSCOM-BCG estimate India already has 400,000+ people in AI roles, with talent demand expected to grow around 15% annually to 2027, forcing aggressive GenAI skilling.

- Across APAC, studies show most enterprises plan to ramp up hiring for AI roles, including data scientists, AI configurators, and ML engineers.

- An IBM–e& 2024 study finds 65% of MENA CEOs are accelerating AI adoption faster than their organisations can absorb, revealing a clear talent and capability gap.

- Gartner’s 2024 survey of US and UK software leaders reports AI/ML engineers are the most in-demand role, and applying AI/ML to real applications is now the biggest skills gap.

The 2026 Playbook: A Practical Roadmap for Universities

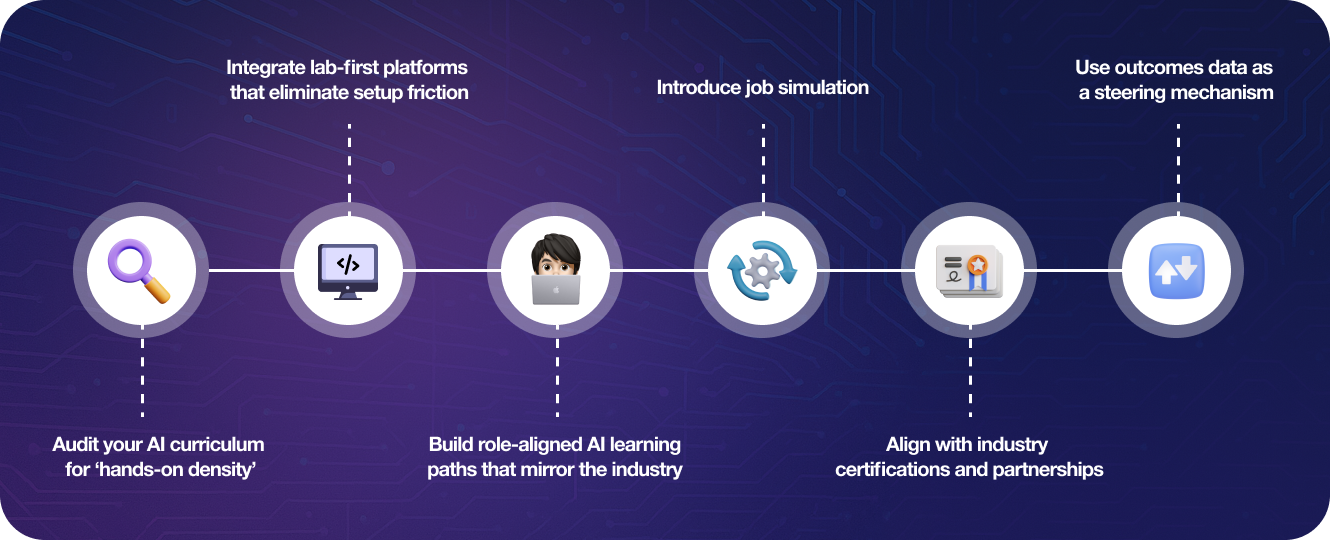

What can universities realistically do over the next academic cycle to move from AI literacy to AI readiness?

Here is a six-month roadmap you can adapt.

Month 1: Audit Your Reality

- Estimate the listen vs build split in AI / cloud courses.

- Ask: “Are we close to 60-70% hands-on time in these subjects?”

Month 2: Fix the Infrastructure

- Partner with lab-first, browser-based platforms so students can practise without complex setup or billing risk.

- Ensure they have structured labs + open playground time across AI, cloud, containers, and DevOps.

- Shift from 'install this tool' tasks to 'deploy this model / connect this service' style work.

Month 3: Align with Roles and Outcomes

- Pick a few anchor roles, for example: Cloud + AI Engineer, MLOps Engineer, AI Platform Engineer, Data Engineer with AI.

- Map key courses to clear Course Outcomes (COs) tied to those roles.

- For each important CO, define one lab or project as direct assessment evidence.

Month 4: Use Certifications Smartly

- Combine degrees with a small, realistic certification stack, for example:

- Foundational: AWS Cloud Practitioner, Azure AI Fundamentals, KCNA.

- Optional advanced: CKA / CKAD, AWS Solutions Architect Associate.

- Decide where these fit: recommended milestones, electives, capstone or break-time bootcamps, backed by your existing labs.

Month 5: Introduce Job Simulation

- Adopt a job-simulation environment where students work as junior engineers would.

- Let students handle incidents, debug with limited information, and make trade-offs (cost vs performance, speed vs stability).

- Encourage them to turn simulations into short portfolio entries (scenario, actions, outcome, learning).

Month 6: Track What Actually Matters

- Track outcomes by role, not just 'placed / not placed' (Cloud + AI, MLOps, Data, AI Platform, etc.).

- Where possible, watch early-career signals: starting salary bands, time to first promotion.

- Collect simple employer feedback and feed it into curriculum review and CO/PO discussions.

Faculty: The Make-or-Break Factor

None of this works without empowered faculty because real change depends on faculty confidence.

Universities can increase impact by:

- Providing faculty sandboxes for experimentation with AI-native tools and lab environments.

- Running train-the-trainer programs so instructors can adapt labs, not just adopt them.

- Supporting faculty in designing project-based assessments that align AI practice with program outcomes.

When faculty are equipped and supported, AI readiness becomes a property of the institution, not of individual enthusiasts.

How Lab-First Platforms Enable This Shift

Universities do not need to build all of this infrastructure themselves. Lab-first platforms designed for academia can accelerate the move from AI literacy to AI readiness.

Platforms like KodeKloud offer role-based learning paths (DevOps Engineer, SRE, Cloud Engineer, AI-native DevOps, and more) that combine:

- Short conceptual lessons

- Hands-on labs after almost every step

- Cloud and AI playgrounds where students practise in production-grade environments

For AI-native skills, students can work through hundreds of courses and labs and multiple AI playgrounds to build RAG and agent workflows with tools such as LangChain, LangGraph, MCP, and Gemini CLI. Everything runs in the browser with zero setup: no API keys, no credit cards, no billing risk.

Job-simulation environments such as KodeKloud Engineer let students solve realistic cloud and AI-native DevOps challenges under time pressure and turn that work into portfolio projects.

This lab-first model is already being used by universities such as the University of Copenhagen, Université de Bordeaux, Université de Montpellier, and Maastricht University, where it integrates with existing programs.

The result: Students graduate with portfolios as evidence of capability rather than only course marks, while faculty gain continuous data on lab completion, project performance, and simulation outcomes to support curriculum review and accreditation work.

Your Move

The gap between AI literacy and AI readiness will shape which universities graduate confident, employable engineers in 2026 and beyond.

By then, most employers may not be asking whether a candidate can explain how a transformer works. They may care whether that candidate can build, deploy, and maintain AI systems in production.

The playbook is clear and simple:

- Audit hands-on density (aim high, not theory-heavy).

- Integrate lab-first platforms (zero setup, instant practice).

- Align learning to real engineering roles.

- Simulate real work, not toy problems.

- Review and refine regularly, not every five years.

The universities that act now will graduate students who are confident, capable, and competitive. Those that wait may find their students at a disadvantage compared to learners who trained in more practical environments.

If you are a university leader, faculty member, or head of department looking to modernize your AI, cloud, and DevOps programs, explore how lab-first platforms like KodeKloud can help your institution make students real-world ready, not just hello-world ready. Visit KodeKloud for Education to learn more and schedule a conversation with the team.

The 2026 job market will move quickly. Your students deserve to be ready for it.

Explore KodeKloud resources

-

Browse domain-based and role-based learning paths:

KodeKloud Learning Paths -

Let students experience real job simulations:

KodeKloud Engineer: Job Simulation Platform -

Partner with us for your university’s AI, Cloud & DevOps programs:

KodeKloud for Education

Discussion