In the past, standalone applications were the most common way to deliver software. These applications were installed and run on the user's local computer without requiring external resources.

However, over the years, there has been a significant increase in the use of web technologies, leading to the delivery of software primarily over the Internet. As a result, web applications and SaaS (Software as a Service) solutions have become popular, especially for managing complex business processes in large enterprises.

When developing web applications or SaaS solutions, several critical factors must be considered to ensure success. One crucial factor is designing the application with flexibility in mind, as application requirements may change over time, and new features may be added. Scalability is also crucial, as the number of users may increase over time.

Furthermore, it's essential to ensure that the application can be deployed across different environments. With the popularity of cloud platforms, it's vital for web applications to be deployable on popular cloud providers such as AWS, GCP, and Azure.

In today's fast-paced development environment, the ability to bring an application to market quickly is more important than ever. Automation can play a significant role in this, and compatibility with CI/CD processes can facilitate efficient deployment.

Are you looking for a methodology to develop scalable and maintainable enterprise-level web applications or SaaS solutions? Look no further than the Twelve-Factor App. This widely accepted methodology provides a set of best practices for designing and building robust, portable applications.

After reading this blog, we highly recommend checking out our Kodekloud crash course on the twelve-factor app methodology. It's a great way to further deepen your knowledge and skills in developing robust, scalable, and maintainable applications that follow the best practices of cloud-native computing. Enroll now to gain practical insights, hands-on experience, and expert guidance from seasoned industry professionals.

Twelve-Factor App Methodology Explained

The Twelve-Factor App Methodology is specifically designed for developing software-as-a-service (SaaS) applications that can scale with ease, remain portable, and function flawlessly. The methodology outlines twelve principles that you must keep in mind when building a SaaS application. These principles include:

- Codebase

- Dependencies

- Config

- Backing services

- Build, Release, and Run

- Processes

- Port Binding

- Concurrency

- Disposability

- Dev-Prod Parity

- Logs

- Admin Processes

By adhering to these principles, you can create a SaaS application that is easy to maintain, scale, and release consistently. To illustrate the methodology, we will develop a simple Python web application using Flask. This will help you understand how to incorporate each principle in practice.

Overall, the Twelve-Factor App Methodology is an invaluable resource for any developer who wants to develop scalable and maintainable SaaS solutions or web applications.

To demonstrate a basic example, let's use Flask to display a simple message in your browser. Before we begin, we need to install Flask on our local machine. To do this, please enter the following pip command:

pip install FlaskOnce the installation is successful, the next step is to create an "app.py" Python file in the "simple-flask-app" directory. This file will be responsible for handling incoming and outgoing requests within our web application and serve as the entry point for our simple Flask app.

from flask import Flask

app = Flask(__name__)

@app.route('/')

def welcomeToKodeKloud():

return "Welcome to KODEKLOUD!"

if __name__ == "__main__":

app.run(host="0.0.0.0", debug=True)The process to run our Flask application is straightforward, as we have already completed all the necessary steps. It's time to execute the application and see it in action.

python app.pyBy accessing the designated URL, you can view the message "Welcome to KODEKLOUD" on your browser.

For those who prefer a more visual approach to learning, we've got you covered - check out this helpful video that we've embedded below.

CODEBASE PRINCIPLE

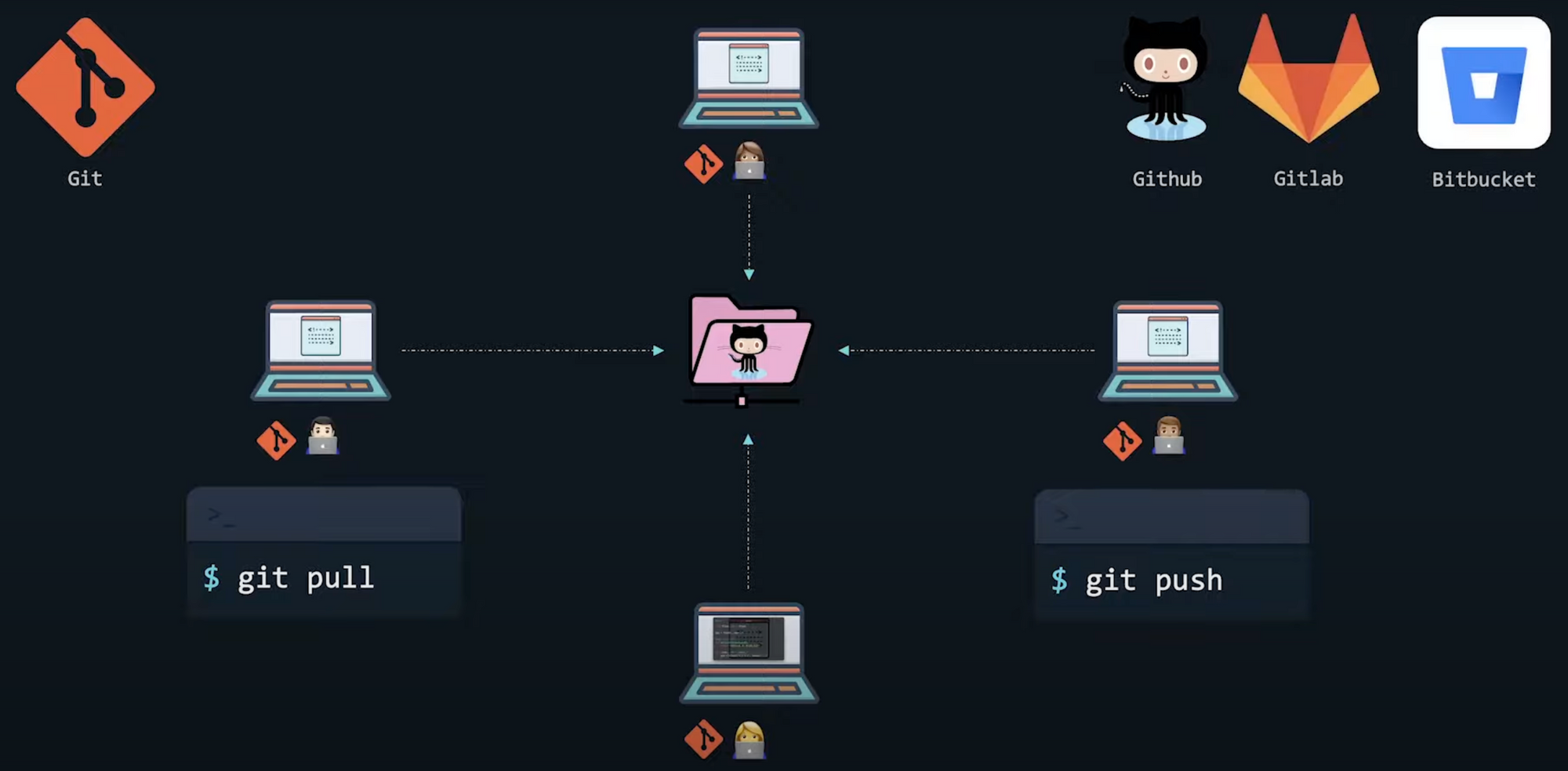

As our Flask web application grows in complexity with added features, collaboration among multiple developers becomes essential. To facilitate this, we will use a revision control system such as GIT to share and manage our code. This approach will enable each developer to share their code changes and collaborate effectively. We have chosen GitHub as our code repository to provide a centralized location for all code-related activity.

To ensure consistency and streamline our workflow, we will use a single repository for our Flask application. This approach is cost effective and eliminates any potential issues that may arise from maintaining multiple codebases. It also provides us with a central, definitive source of truth for our application, which is crucial for effective developmnt and operations.

To simplify the maintenance of our application and make the development process more efficient, we will automate the build and deployment phases. We can easily automate these processes by using a single repository to ensure consistency. Having multiple repositories for each application can lead to complexity and make automation difficult. Therefore, we will work on our Flask app locally, push commits to a single remote repository, and use CI/CD to automatically build, test, and deploy the app from that single source code repository.

By using a single repository for each application, we can have its own version of dependencies, configuration, and settings. This approach ensures that changes to one application do not inadvertently affect other applications.

The first principle of the twelve-factor app recommends using a single codebase per app, tracked in version control, and multiple deploys from this single codebase. This principle emphasizes the separation of concerns between different SaaS applications by using separate codebases for each app.

With this principle in mind, we will now create a GitHub repository and push the code for our Flask application, as we have previously mentioned. We will be using GIT as our version control system.

To begin, we will create a new repository on GitHub to store our Python Flask web application codebase. The repository can be found in this repository. Once the repository has been created, we can proceed by pushing our code to it.

The repository we have created is solely dedicated to this specific web application and will be used to manage different branches in the future. We can create multiple deployments using various branches, all of which will be done within the same repository. Furthermore, this same repository will be used to deploy different versions of our application to dev, prod, and staging environments.

DEPENDENCIES PRINCIPLE

As you may have noticed, we installed the 'Flask' framework, a Python web application framework, before writing our code. Our Python code requires the Flask module, which is included in the Flask Python package.

Therefore, it is essential to install the Flask Python framework in our local development environment before beginning development. This is just one of the dependencies for our Flask web application, but as the application grows, we may end up with many additional third-party dependencies.

Most applications, like ours, have multiple dependencies that must be installed before running the application. In Python, these dependencies are typically listed in a file named "requirements.txt" in a specific format, as shown in the example provided.

flask==2.0.0It is worth noting that specifying the version number after the package name is crucial. Failure to do so can result in inconsistencies when running the program in different environments because developers may install different versions. For instance, a developer might have installed one version of Flask during development, but upon deployment, the operations team may install a newer version, causing the app to malfunction.

During the build process, the pip install command will install all the dependencies specified in the requirements.txt file, usually located in the project's root directory.

Creating an isolated environment for our Flask application that includes all necessary dependencies is considered best practice. This approach ensures that our application is shielded from external dependencies and that the same explicit dependency package is consistently applied across all environments - development, staging, and production.

We have two options available to achieve this: Python Virtualenv and Docker Containers. Both offer reliable and efficient ways of managing dependencies and creating isolated environments for our application.

To guarantee robust and consistent dependency isolation, we will use Docker to containerize our Flask application. This process, commonly known as "dockerizing," enables us to run our application in a self-contained environment that is isolated from the host system. This approach is more efficient and reliable in managing dependencies. Therefore, we will proceed to create a Docker container for our Flask application.

FROM python:3.10-alpine

WORKDIR /kodekloud-twelve-factor-app

COPY requirements.txt /kodekloud-twelve-factor-app

RUN pip install -r requirements.txt --no-cache-dir

COPY . /kodekloud-twelve-factor-app

CMD python app.pyThe following illustration provides an overview of our app's current design.

As mentioned earlier, the second principle of the twelve-factor app is to "Explicitly declare and isolate dependencies." We have adhered to this principle by listing our dependencies declaratively in the requirements.txt file and isolating them in a Docker container. Additionally, we have used the Dockerfile to declare all the dependencies required by our Flask app, further following this principle.

BACKING SERVICES PRINCIPLE

As previously demonstrated, our Flask application operates within a Docker container. The basic program displays the message "Welcome to KODEKLOUD!" on the user's browser. However, we have a new requirement to enhance our Flask application by incorporating a visitor count feature. We plan to increment the count each time a user visits or refreshes the webpage, providing a more interactive experience.

We intend to use the Redis key-value database to persist the visitor count to achieve this. To do so, we will connect a Redis database as a service to our Flask application. Since our web app is already running inside a Docker container, we will also run the Redis database within another container as a service.

This approach enables us to consider it as an attached resource to our main web application, and we can interact with it. Consequently, our database service becomes loosely coupled and portable. The following diagram illustrates this concept. We can integrate as many backing services into our Flask application using this method, enabling us to scale our app endlessly.

Docker Compose is a powerful tool that allows the definition and execution of multi-container applications and services. It enables us to manage dependencies and create isolated environments for our Flask application, ensuring consistency across all development, staging, and production environments.

services:

redis:

image: redislabs/redismod

container_name: redis-db

ports:

- "6379:6379"

flask:

build: .

container_name: flask-twelve-factor-web-app

ports:

- "5001:5000"

volumes:

- .:/kodekloud-twelve-factor-app

depends_on:

- redis-dbUsing the "docker-compose" command, the Docker Compose tool can execute multiple containers simultaneously.

docker-compose upWith the necessary infrastructure set up, we can now interact with our Redis database and store the visitor count. To achieve this, we need to add a few more lines to our app.py file. These lines will handle the storage, retrieval, and display of the visitor count.

from flask import Flask

from redis import Redis

app = Flask(__name__)

redisDb = Redis(host='redis-db', port=6380)

@app.route('/')

def welcomeToKodeKloud():

redisDb.incr('visitorCount')

visitCount = str(redisDb.get('visitorCount'),'utf-8')

return "Welcome to KODEKLOUD! Visitor Count: " + visitCount

if __name__ == "__main__":

app.run(host="0.0.0.0", debug=True)The twelve-factor app recommends keeping backing services, such as our Redis database in this case, as attached resources. This practice enhances flexibility since it allows us to replace any service at any time without affecting the main application.

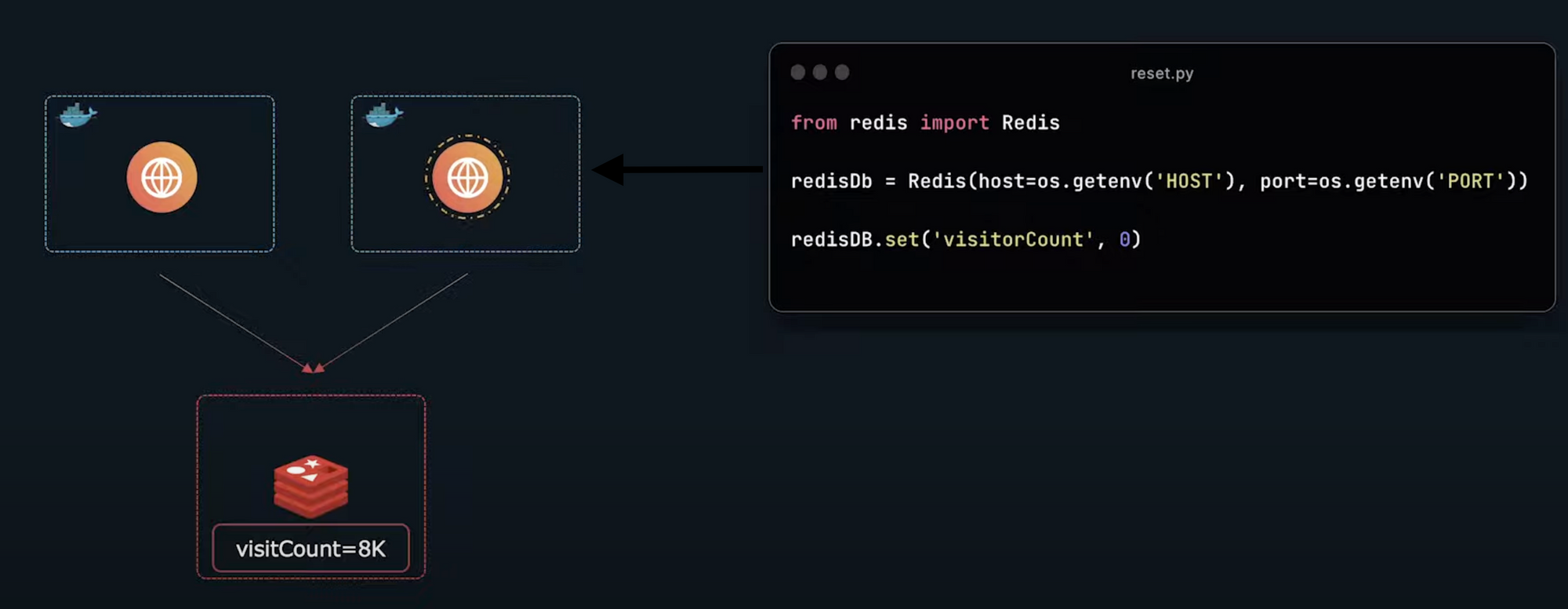

CONFIGS PRINCIPLE

As you may have noticed, our Python code currently contains hard-coded Redis host and port values. This can cause issues when deploying the application to different environments, such as production, staging, and development, as each environment may use a different Redis instance, requiring changes to the host and port values. This practice is not considered ideal, as it can lead to inconsistencies and errors when deploying to different environments.

To address this concern, we will separate the environment configurations from the main application code and put them under version control. This approach allows us to deploy our code to different environments without modifying the main application code. Furthermore, it simplifies the management and maintenance of different environment configurations.

To get started, create a new file named ".env" in the root directory.

HOST = "redis_db"

PORT = "6379"In order to make use of environment variables in our Flask application, it's necessary to add an "env_file: .env" entry in the docker-compose.yml file. By doing so, we're ensuring that the environment variables declared in the ".env" file are available and accessible to the containers mentioned in the docker-compose.yml file.

Why is this important? Well, by utilizing environment variables, we can keep our sensitive information separate from our main codebase. This allows us to deploy our code to different environments without the need for modification. Furthermore, it makes it easier to manage and maintain the configuration of different environments.

So, when creating a docker-compose.yml file for your Flask application, don't forget to add the "env_file" entry with the path to your ".env" file. This simple step can save you a lot of time and hedaches in the future!

services:

redis:

image: redislabs/redismod

container_name: redis-db

ports:

- '6379:6379'

flask:

build: .

container_name: flask-twelve-factor-web-app

ports:

- '5001:5000'

volumes:

- .:/kodekloud-twelve-factor-app

depends_on:

- redis-db

env_file: .env

environment:

- HOST: ${HOST}

- PORT: ${PORT}To make use of the host and port information from environment variables, we need to modify the code in app.py acordingly.

from flask import Flask

from redis import Redis

import os

app = Flask(__name__)

redisDb = Redis(host='redis-db', port=6380)

redisDb = Redis(host=os.getenv("HOST"), port=os.getenv("PORT"))

@app.route('/')

def welcomeToKodeKloud():

redis.incr('visitorCount')

visitCount = str(redisDb.get('visitorCount'),'utf-8')

return "Wecome to KODEKLOUD! Visitor Count: " + visitCount

if __name__ == "__main__":

app.run(host="0.0.0.0", debug=True)docker-compose upThis approach enables us to separate the configuration settings of our Python Flask application from its logic. As a result, we can use different configurations for various deployments, including testing, staging, or production. Moreover, it makes the project open sourceable at any time without any code-level changes and without exposing sensitive configuration information to the public.

With the configuration file in place, our Flask web application architecture takes the following form.

The twelve-factor app methodology emphasizes the importance of "storing configuration in the environment." In line with this principle, we have utilized environment variables and .env files to separate our configuration from the codebase. This approach not only simplifies configuration management but also enables us to maintain it more efficiently.

By keeping our configuration separate, we can easily modify it based on different deployment environments, including testing, staging, and production. Additionally, it ensures that our sensitive configuration information is not exposed to the public in the event that we decide to open-source the project.

BUILD, RELEASE, RUN PRINCIPLE

So far, we have developed and deployed our Flask application succesfully, and it is now accessible to the public, including a new visitor count feature. However, during the previous step, we made an unintended mistake in the welcome message and pushed it to our GitHub repository. This mistake is crucial, and we need to correct it immediately.

Ensuring a seamless user experience is crucial for any application, and addressing bugs and issues promptly is essential in achieving this goal. However, making changes to code in production can be risky and lead to unwanted downtime. So, what can we do to quickly and efficiently address issues without affecting our users?

One solution is to have a plan in place to revert to a previous version of our application until a fix for the identified bug can be implemented. This is where the twelve-factor app methodology comes into play. By separating the build and run phases, we can effectively manage our build artifacts and deployments.

During the build phase, we create a distinct build artifact and store it in a designated location. This allows us to easily roll back to previous releases or redeploy a specific release as needed. This level of control over our software improves our overall ability to manage and maintain it, giving us the flexibility to address issues efficiently and effectively.

In summary, separating our build and run phases is a best practice that can help us quickly and efficiently address critical bugs while minimizing user disruption.

In our example, we have adopted the practice of creating Docker images for every update made to our Python code. These images, commonly known as build artifacts, are designed to be used across various environments such as development, staging, or production. By leveraging these images, we can effortlesly launch our web application, a process that is commonly refered to as the "run phase."

The clear separation of the build and run stages is a fundamental principle of the twelve-factor app methodology. By separating these stages, an application's development and deployment proceses are more distinct and managable. The aim is to increase the scalability, maintainability, and portability of the application. The example presented above illustrates this principle in action and can help clarify its importance in practice.

Processes and Concurrency Principles

Our Flask web application has experienced exponential traffic growth recently, with the user base increasing from 10 to 1000 within a month. To cater to this surge in demand and ensure a seamless user experience, we have devised a scaling strategy that leverages additional Docker containers. This approach enables us to handle the increased workload effectively and ensure optimal performance, even as the demand for our application continues to grow.

Although scaling our Flask application by providing more CPU and memory is an option, it can be expensive, especially if our user base continues to increase rapidly. This method of scaling is known as vertical scaling. To tackle this challenge more efficiently, we can add another instance of our web application and use a load balancer to distribute traffic between the two instances. This not only addresses the immediate increase in traffic but also provides a scalable solution for future growth. This approach is known as horizontal scaling.

Horizontal Scaling

Vertical Scaling

Vertical scaling is a popular technique, but it can be expensive and has limitations. Therefore, we've opted to implement horizontal scaling for our application. This involves adding another instance to our web application, which is straightforward since our application is already 'containerized with Docker'. Creating a new container from the Flask app's Docker image (build artifact) is all that is required.

This method aligns with the Concurrency principle, as described in the twelve-factor app methodology. Additionally, this approach allows for easy downscaling when the load decreases, resulting in cost savings. We will use load balancers to distribute the load effectively among the app instances.

To ensure future growth of our application, we have a plan to scale horizontally by adding more instances. However, if we had used a global static variable within the application code to manage the visitor count, horizontal scaling would not have been possible.

This is because the load balancer could redirect requests to any of the two containers, and each instance would have its own static variable that stores the visitor count, resulting in inaccurate visitor count data. This is a drawback of stateful processes that keep state within the application and make horizontal scaling difficult.

In contrast, our Flask web application manages visitor count in a backend Redis database. This database can be accessed by any number of application instances at any time and shares the same data value among them, making it more scalable.

This design aligns with the stateless processes principle of the twelve-factor app methodology, which states that an app should be executed as one or more stateless processes in the execution environment. The Redis architecture allows us to restart services at any time without data loss.

By using this approach, we can effectively scale our application horizontally without sacrificing accuracy or data integrity. We look forward to seeing the benefits of this implementation in practice.

Port Binding Principle

In earlier steps, you may have observed that accessing our Flask web application was straightforward: you entered the URL and port number in a web browser on your local machine.

localhost:5000We have expanded our Flask application by creating an additional instance that can be accessed through localhost:5001. Similarly, our Redis database is up and running, and you can connect to it via localhost:6379. This demonstrates our ability to run multiple instances of the application and services on the same host machine.

Unlike traditional web applications, our app does not rely on a specific web server to function. Instead, it exports HTTP as a service by binding to a specific port and listening for incoming requests on that port. To illustrate this, we can create another instance of our Flask web application, bind it to port 5051, and publish the container port to port 5052 on the host machine. This approach highlights how our app exports HTTP as a service.

As our application grows and evolves, we may need to add more resource such as mail servers and databases to support its operation. The port binding principle enables us to register these types of backing services to a specific port and then use the same URL and port to access the services from anywhere within our application services. This simplifies the process of attaching new resources and allows for easy access in the future.

By implementing this approach, we are following one of the key principles of the twelve-factor app methodology, which is to "Export service via port binding." This principle dictates that an application should expose its services through a well defined port, making it easy to discover and access the service.

Our approach of creating multiple instances of the application and services, each accesible via specific ports, aligns with this principle. It is worth noting that our Redis database is currently running on Port 6380, and we have the flexibility to set up another instance of a Redis database on Port 6381 in the future to meet any specific requirements.

The principle of port binding advocates for the use of a consistent port number as the most effective way to enable network access to a process.

Disposability Principle

We have updated the welcome message of our Flask web application and now need to release the changes quickly. To do so, we must gracefully shut down the current application while preserving any stored data, such as the visitor count. Thankfully, since we are using Redis as our backing database service, our data is secure.

In Docker, when a container is terminated, Docker sends a SIGTERM signal to the main process running in the container. The purpose of the SIGTERM signal is to request the process to terminate gracefully, allowing it to perform any necessary cleanup tasks and release any resources it has been using.

For example, when terminating a Flask application running in a container, the application's main process will receive the SIGTERM signal and can handle it by shutting down any active connections, releasing any resources it has been using, and then exiting the container gracefully. Handling the SIGTERM signal is critical to avoid unexpected data loss or resource leaks that can occur if the process is forcibly terminated with a SIGKILL signal.

The following log snippet provides an example of a SIGTERM signal being received during the termination of the Flask web application running in the Docker container:

To update our web application with the latest changes, we need to follow a few steps. First, we need to gracefully shut down the current Docker container running the application.

Next, we will create a new Docker image with the updated code changes. Finally, we will launch a new instance of the application based on the latest image. This approach ensures a smooth and efficient update process for our application.

Excellent; we have successfully terminated the previous process and deployed a new instance of the application with the updated code quickly and efficiently. This aligns with one of the key principles of the twelve-factor app methodology, which emphasizes the importance of rapid startup and graceful shutdown of web applications, enabling the application or service instances to be disposable.

Dev/Prod Parity Principle

As we continue developing our Flask web application, it's crucial to establish separate environments for testing updated code and promptly observing any changes. While the development environment is ideal for testing, it may contain unresolved bugs or disruptive modifications and isn't suitable for end-users.

Therefore, our team of developers should use it exclusively. To ensure a seamless experience for our valued customers, we must maintain a separate, reliable, and error-free environment, known as the production environment.

To ensure proper development and deployment of our Flask application, it's crucial to have both a development and production environment. The accompanying figure illustrates the importance of these environments, which allows us to test and make necessary adjustments in the development environment before implementng them in the production environment for end users.

It's clear that our development and production environments aren't adequately synchronized, as evidenced by a typographical error in the production environment's welcome message that is absent in the development environment. Additionally, the production environment's welcome message is several weeks old, while the development environment has the latest version.

Such misalignments between the two environments are not best practices, and it's crucial to minimize any discrepancies between them. This includes ensuring that backing services like Redis databases are as similar as possible, following the TwelveFactor App methodology.

Now, imagine being able to deploy codebase changes within hours. By adopting a process where a typo in the Flask application's welcome message can be fixed, committed to the GitHub repository, and subjected to automated testing with a passing result, we can generate a new Docker image on the fly. This enables seamless integration of updates into the existing Flask app Docker container in the production environment with a simple restart.

By implementing Continuous Integration and Continuous Deployment (CI/CD), a best practice according to the TwelveFactor App methodology, we can almost constantly keep our development and production environments in sync. This greatly reduces the potential for errors and bugs in either environment.

Logs Principle

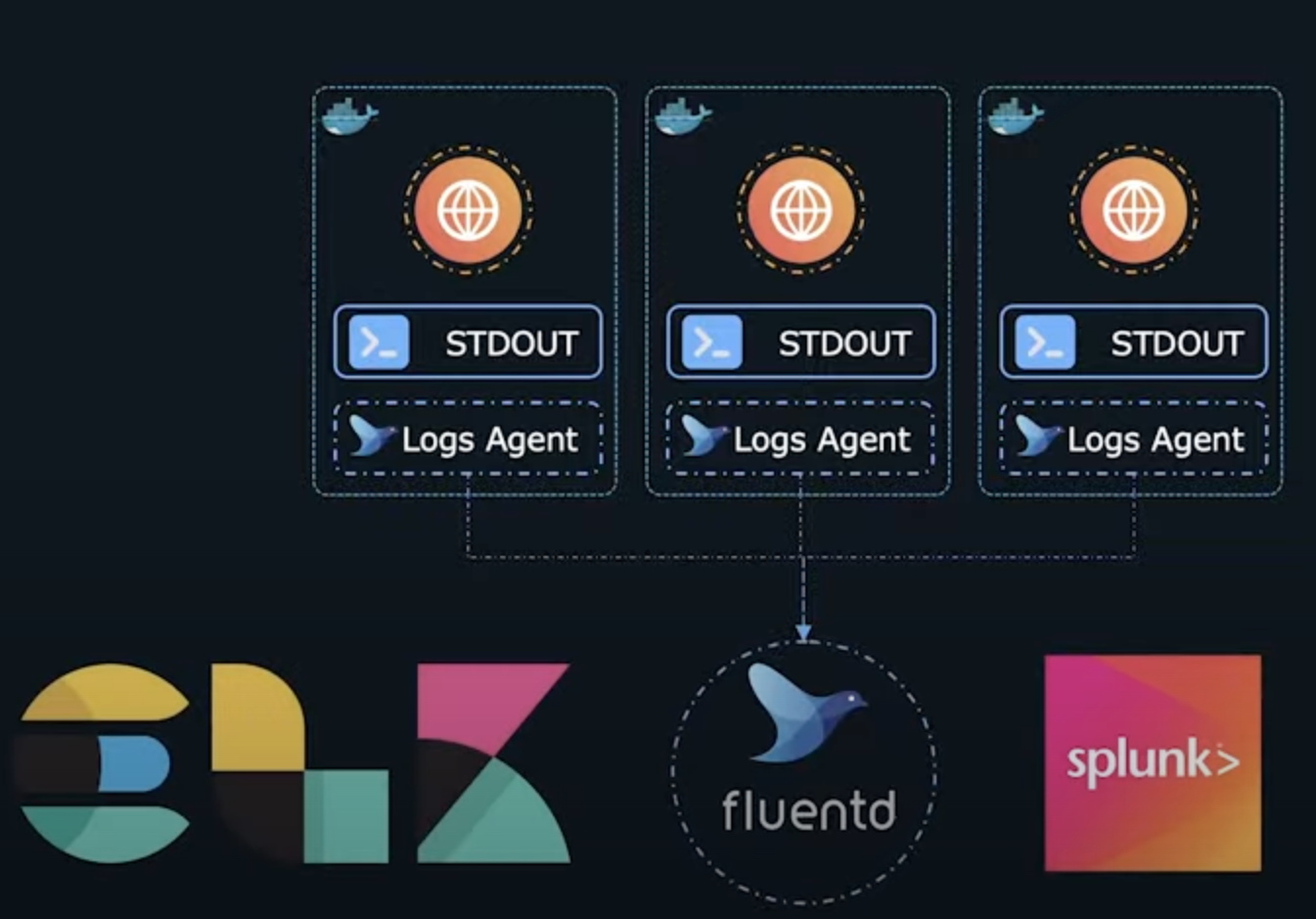

Recently, we've noticed a decrease in our Flask web application's loading speed, and despite our troubleshooting efforts, we haven't identified the cause. One solution to this issue is to implement an application log, which would enable us to track and identify potential problems more easily.

Instead of having the application write and manage log files, we can configure it to log all information to the output stream. This approach enables application administrators to view the stream within a terminal while the execution environment captures and stores the logs for long-term viewing or archiving.

Moreover, the streamed log data can be sent to data warehousing systems such as Hive or Hadoop to identify usage trends and specific past events.

This logging approach aligns with the "Treat logs as event streams" principle described in the Twelve-Factor App methodology.

Admin Process Principle

As our app is used by a vast number of endusers, the visitor count has surpassed eight figures, and to ensure accurate tracking, we need to reset the visitor count to 0. This can be achieved by running a one-time database script. This is just one example of several one-off processes that are typical in modern web applications, including data migrations, data cleanups, and schema changes. These processes are usually short-lived and should be effortlessly executable against the codebase.

We can use the docker exec command to execute such one-off scripts or commands against a running container. This approach conforms to the "Run admin/management tasks as one-off processes" principle outlined in the Twelve-Factor App methodology.

Check out our Twelve-Factor App course.

Conclusion

In conclusion, the 12-factor app methodology provides a clear and concise framework for building modern, scalable, and maintainable applications. By adhering to these principles, developers can ensure that their applications are portable, easily deployable, and can run on various infrastructures.

The 12-factor app methodology also emphasizes stateless processes, enabling developers to scale their applications horizontally as needed. Additionally, it encourages the use of modern technologies and tools, such as containerization, continuous delivery, and cloud platforms, to streamline the development and deployment process.

Overall, the 12-factor app methodology represents a best practice for building and deploying applications in the modern era. Its principles can help developers build applications that are resilient, scalable, and easy to manage, while also promoting collaboration, transparency, and agility.

By following these principles, developers can create applications that are ready for the challenges of the future and that can meet the evolving needs of their users and stakeholders.

Please share your suggestions and feedback for the blog by leaving a comment. Your input is valuable and will help us improve our content. Thank you!

Other interesting reads:

Discussion